Looking a gift llama in the mouth

How Llama 3.1 uniquely leverages Meta's business model (and why we should be a little bit cynical about it)

With each new Llama release, the internet tends to have two reactions:

"open source is SO BACK, closed models NGMI"

"Why is Mark Zuckerberg spending billions to give away free models?"

That second question is one that I've been thinking about for quite some time. I previously wrote:

In a way, the biggest obstacle to Microsoft, Google, and [other foundation model companies] controlling the next generation of AI technology is Meta.

...

By making it so (almost) anyone can run Llama 2, or any other foundation model, Meta is attempting to build the AI industry standards of the future. And by doing so, commoditize the proprietary models that its competitors currently offer.

So far this year, I've seen bits and pieces of this strategy confirmed in Meta's earnings calls. The Q4 2024 call mentions that:

Open source is safer and more secure

Open source helps with hiring the best talent

Becoming an industry standard is good for future innovations

And while all those reasons are true - they're among the reasons why React was so successful as a framework - they weren't particularly satisfying. It's one thing to want to support open-source software; it's another to spend billions worth of GPU time to give away free model weights.

Yesterday, Meta released Llama 3.1, and with it, a letter from Zuckerberg firmly explains why he believes spending so much on AI is not only good for the company, but also good for the world.

Let's take that one step at a time, starting with Llama 3.1.

Llama 3.1, by the numbers

Llama 3.1 is a family of models that includes a new, ginormous 405 billion parameter model and slightly upgraded versions of the 8B and 70B parameter models that were originally released as part of the Llama 3 launch. Those upgrades include a significantly larger context window (from 8K to 128K tokens) and better multilingual and tool-use capabilities.

The model also comes with new pieces in the "Llama system" - a system of tools to make it easier for developers to build applications with Llama models. It's a long way from "I have an open-source LLM" to "I have a working LLM-enabled product." The Llama ecosystem has now grown to include an agentic framework, content and jailbreaking safeguards, and a proposal for new developer interfaces.

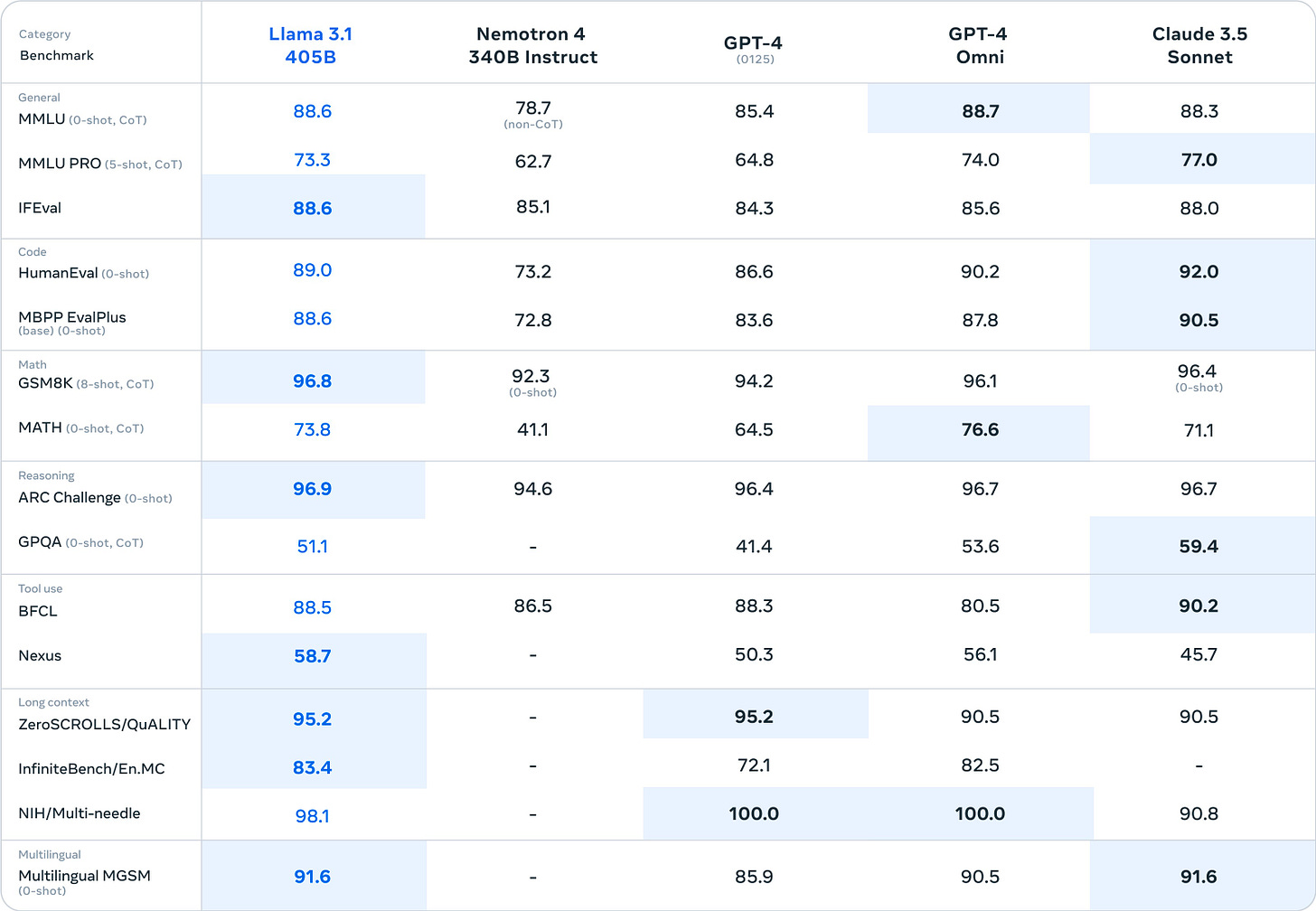

But perhaps the most impressive aspect of Llama 3.1 is the fact that it's seemingly competitive with GPT-4o and Claude 3.5 Sonnet:

By now, we should all be taking benchmarks with a very big grain of salt. But even if they’re mostly accurate, it would mean getting an open model that’s in the same ballpark as the latest proprietary models after two months1.

The architecture for Llama 3.1 is straightforward, relative to the more complex strategies (like a mixture of experts) that we've seen from other companies. The simplicity is noteworthy because it requires more resources: Meta used 16,000 H100 GPUs to train the 405B model. In the words of Ben Thompson, "Meta trained Llama like a big company with lots of resources, while OpenAI trained GPT-4 like a startup."

But that brings us back to why: why did Meta repurpose $400M worth of hardware to give away the result for free2?

Giving away the goods

Mark Zuckerberg's letter has shed much more light on how he views (or at least positions) Meta's strategy in the broader AI competitive landscape.

He says that developers, CEOs, and governments need to have models that they control. That means protecting data, fine-tuning for specific use cases, and choosing the right long-term technology bet. That's true - while startups are fine using ChatGPT or Claude, larger organizations are less nimble and more prescriptive about the technology they can use. And there are many champions of open-source AI who are benefiting from Llama.

Meta actually fits the bill for the kind of company that benefits from Llama: it doesn't want to bet its future on a proprietary system, nor does it want to have to gamble that OpenAI will win out over Anthropic, and DeepMind, and Cohere, etc. Zuckerberg predicts that frontier model development will continue to be extremely competitive, which means 1) the "best" models will likely continue to change, and 2) open-sourcing any given model isn't giving away a big advantage for very long.

But there's one last reason that open-sourcing is good for Meta: it doesn't contradict its business model.

A key difference between Meta and closed model providers is that selling access to AI models isn’t our business model. That means openly releasing Llama doesn’t undercut our revenue, sustainability, or ability to invest in research like it does for closed providers.

A longstanding idea in tech is to "commoditize your complement" - to make adjacent layers of the stack/supply-chain/ecosystem commodities, so that your product benefits from more options and your competition fights each other. It then allows you to double down on your strengths rather than trying to bolster your weaknesses.

OpenAI, Anthropic, DeepMind, and other foundation model developers make money by selling access to their models, either per token or via product subscriptions. Meta already has a multi-billion dollar ad business, which means it can afford to give away the AI stuff for free.

And not just the model - with Llama 3.1, a significant ecosystem of partners is lined up from Day 1 - Llama 405B is available on a dozen different cloud platforms, with fine-tuning and RAG systems available on about half of those.

That seems like a much, much bigger moat than having a model that performs 3% better on the latest benchmarks. Especially as the models themselves continue to be commoditized - most developers are happy to switch from ChatGPT to Claude if it means better results.

And as an added benefit, they can also line up behind an argument for AI safety.

Making the world a better place

So far, all of the Llama talking points are well-worn strategies from the open-source playbook. But AI now has a geopolitical angle: we never saw companies arguing that Javascript frameworks were for the good of all mankind.

Meta is happy to be the company championing global benefits. There's the safety argument, which Zuckerberg makes - the world is better off with democratized access to AI, and leveling the playing field gives larger actors (governments) the ability to check the actions of smaller actors (individuals).

There's also the liberal democratic values argument, i.e. the argument against China.

The United States’ advantage is decentralized and open innovation. Some people argue that we must close our models to prevent China from gaining access to them, but my view is that this will not work and will only disadvantage the US and its allies. Our adversaries are great at espionage, stealing models that fit on a thumb drive is relatively easy, and most tech companies are far from operating in a way that would make this more difficult.

And look: while I'm sympathetic to Zuckerberg's arguments and in favor of open-source AI, I do think it's worth issuing a word of caution here.

Call me a cynic, but I don't believe there's such a thing as a free lunch. Interconnects pointed out that while the new Llama 3.1 license allows developers to use Llama to train other models, it also requires that any downstream models include "Llama" in the name. While the open-source community benefits from Meta's training, it's boosting the Llama brand and ecosystem in the long term.

Meta could also decide the standard interfaces and APIs that we use to build with open-source LLMs. Privacy and open internet advocates have been fighting for years against analogous pushes from Big Tech to define browser standards.

I'm old enough to remember when Facebook made a play to create its own stablecoin, Libra. People (read: governments) panicked, fearing that Facebook would create a "sovereign currency" capable of operating outside the global banking system. The backlash from EU and US regulators was strong enough to kill the project's momentum before it ever got off the ground.

So yes, we should give kudos to Zuckerberg and Meta for championing open-source AI. But we should also be wary of depending on a tech giant to "save us" - Meta's incentives are aligned with the developer community for the moment, but that might not always be true.

Granted, the latest proprietary models also have advanced multimodal features, but it’s still a strong benchmark

There’s also the question of “why did Meta have so many GPUs to begin with?” It turns out that when the company was launching Reels to compete with TikTok, it needed a ton of computing power to handle the video processing load. And when it was constrained by the number of GPUs available, the company started amassing enough GPUs to never be in that position again.

I don't know if giving everyone a potentially dangerous technology is a way to make it safer. It feels more like it gives more people the chance to do harm

I'm skeptical too. My personal take is that this is a "picks and shovels" play for AI, where Meta hopes other businesses use the tools they create to create more tools, but I'm also still digesting.