The Chatbot Wars Are Over. What Comes Next?

Spoiler alert: ChatGPT won.

Something that I've been meaning to write about for a while now (months, really) is my increasingly confident observation that, for all intents and purposes, the "chatbot wars" - kicked off by the launch of ChatGPT - are over. ChatGPT won, and there doesn't seem to be much competition anymore.

What Does "Won" Mean?

It's perhaps a bold claim, but like I said, I'm increasingly confident. Let's start with some usage numbers, though admittedly, it's very hard to do a straight apples-to-apples comparison here. The leading companies all share different metrics, at various dates, if they share them at all - Google, for example, has never commented on Gemini's revenue, as far as I know.

But let's look at the numbers. ChatGPT reportedly had 700 million weekly active users in August, meaning their monthly active users are likely to be meaningfully higher. A rough rule of thumb for MAUs is 2x the WAUs, but it can range from 1.5x to 4x depending on "stickiness." So it wouldn't be unreasonable to say that ChatGPT has in the range of 800M to 1.6B monthly active users, which is also roughly in line with their July statistic of 2.5B prompts per day.

The next biggest alternative, Gemini, had about 450M monthly active users as of July. Of course, a lot can happen in a few months in this space, but I'm pretty skeptical that Gemini has grown its usage by 3x in that time1. And Anthropic, the third option, Claude, only had 2M monthly active users as of January of this year. That's not all of Claude's usage, mind you - just the chatbot - but more on that later.

Revenue-wise, we see a similar story with our two data points, OpenAI and Anthropic. OpenAI does north of $12B in ARR, with one estimate suggesting ~75% of that comes from chatbot usage (i.e. paid ChatGPT subscriptions). Anthropic, meanwhile, "only" has $4B in ARR, with an estimated 25% coming from chatbots (Claude Code alone is about one-eighth of that number).

Anthropic has even acknowledged that they're not focused on chatbots anymore - a seeming admission that we've reached the endgame. According to Jared Kaplan, Anthropic's chief science officer, "we stopped investing in chatbots at the end of last year and have instead focused on improving Claude's ability to do complex tasks like research and coding."

And there's also subjective metrics. Nobody says "Claude it" or "ask Gemini." The New York Times isn't writing articles about the dangers of Llama 3.1. And ultimately, as competitors cede ground in the chatbot fight, consumers may simply come to associate "ChatGPT" with "chatbots."2

As things stand, ChatGPT is quickly becoming the "Kleenex" of AI - colloquially known as "trademark genericization" or "genericide."

Why the Competition Stumbled

When I say "competition" here, I'm talking about OpenAI, Anthropic, and Google DeepMind. Two years ago, there were several more names I could put up here (see: OpenAI's top competitors), but they've mostly become irrelevant in the chatbot space or have pivoted to other use cases.

Why, though? Looking back, I think three key advantages created something of a flywheel that made it tough for others to keep up with:

First-mover advantage. ChatGPT wasn't the first large language model - it wasn't even the first widely remarkable release from OpenAI (I'd argue GPT-3 takes that crown). But it was the first "modern" LLM that didn't require special prompting or documentation.

And although the first version was rough (i.e. hallucinated a ton), the base GPT-3.5 model was still strikingly intelligent, and the bare-bones chat UI was very low friction (I'm old enough to remember the first version of ChatGPT didn't require a login to use). So by the time competitors launched their own "ChatGPT alternative," millions of users had incorporated the original into their daily workflows.

Access to capital. While OpenAI had done a great job raising capital before ChatGPT launched, in the form of their (still unconfirmed) $10B Microsoft partnership, they've taken it to another level since. In the past two years, the company raised first a $6.6B round and then a $40B one - the largest private technology deal on record.

And that war chest has enabled them to do things like subsidize the free tier of ChatGPT3, or even offer ChatGPT as a service at all - most other companies would find it almost impossible to secure enough compute if faced with the same level of demand as OpenAI.

The capital has also let OpenAI let loose on marketing - so far, they're the only AI lab besides Google that's run a Super Bowl commercial, despite making decidedly less than Google's ad revenues.

In the long run, this might not hold up, given Sundar Pichai and Mark Zuckerberg's near-bottomless pockets, but it's likely made a big difference compared to smaller labs like Anthropic and Stability.

Relentless shipping. And the last thing that has helped keep OpenAI in the lead is its relentless shipping pace. Both because it's allowed them to compound their advantages and build a better product faster, and because it's garnered them an enormous amount of free PR. In the two years since ChatGPT, they have launched so many different models, products, programs, and initiatives that the result has become a company that attracts millions (if not billions) of eyeballs.

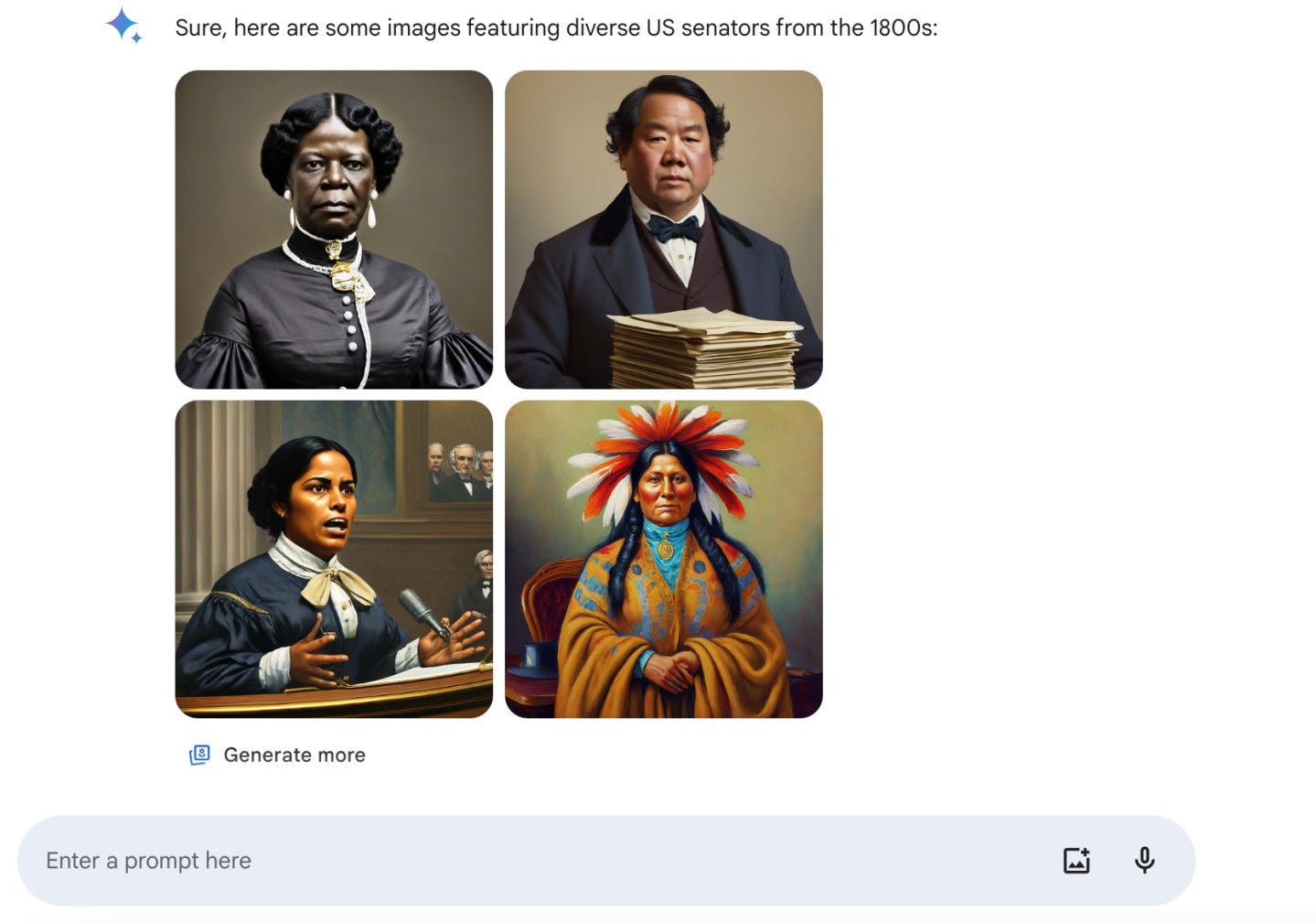

They also stood in stark contrast to Google for much of that time. The narrative for over a year was that Google couldn't get its act together - it failed to ship a GPT-4 class model for months, there was an awkward rebrand from Bard to Gemini as it figured out its product marketing strategy, and there were plenty of mishaps with the rolling out of Google's various AI features - remember the "diverse US senators" incident?

For what it's worth, Google has seemingly turned the ship around - its models are now roughly on par with OpenAI's as a whole, and better in some aspects. They've released compelling product experiences like NotebookLM. And they still have the massive incumbent advantage of, well, being Google.

But OpenAI's shipping culture has allowed the company to not have dramatically large ambitions, but also take a real shot at fighting on multiple fronts at the same time. And as a result, it's an easy decision for consumers to stick with ChatGPT - it offers far more bells and whistles as part of its subscription than other chatbots, and even if Gemini or Claude pioneers a hot new feature, odds are it will come to ChatGPT within weeks.

Dethroning ChatGPT

ChatGPT's lead in general-purpose chat seems insurmountable, but technology history is littered with "insurmountable" leads. To my mind, there are three-ish ways that it could still lose.

Gemini by default. Ultimately, if Google decides they don't need to monetize Gemini via subscriptions, it could be offered for free across a wide range of Google products. And even if it's worse than ChatGPT, it'll be hard to beat the price point or ubiquity of a bundled-by-default Gemini.

I think of this as the "Microsoft Teams" of AI - for years, Slack offered a better product experience as a B2B chat app, but Microsoft was able to fight a war of attrition, and simply offered Teams as a free product within their Office 365 bundle. And in the end, Teams ended up with hundreds of millions of MAUs, several times that of Slack.

Regulatory restrictions. Should the regulatory environment get a lot harder for AI companies, it might turn out that chatbots are no longer worth pursuing as a business model. The strongest case here would be something like making AI companies liable for things like violence or suicide. But in truth, I find it hard to believe we'll see strong AI regulation at all under this administration, let alone regulation that somehow disadvantages incumbents and elevates smaller competitors.

AI hardware. If I had to guess, the software that most people interact with more than any other is iOS and Android. We reach for our phones an average of 144 times per day - they're incredibly addictive. Inevitably, someone will put good AI companions on our phones, far better than the AI-enabled weables we've seen so far. And if the phone makers themselves can own that experience, it could ultimately limit the growth of "third-party" chatbots like ChatGPT4.

What About Open Source?

The wild card in all of this is open source. How can I declare ChatGPT the winner when free alternatives exist? I'd argue Meta, Mistral, DeepSeek, and others aren't losing this race because they're not even running it.

These players positioned themselves as arms dealers in the chatbot wars - selling picks and shovels (or giving them away to undermine competitors' moats). Meta, for example, hasn't been particularly concerned with making money on a Llama-based chatbot5 - it's happy to give that away for free, whether as a standalone model or as part of its Meta AI features, as long as it helps retain users and drive ad revenue.

Mistral's business model revolves around selling API access, not chatbot subscriptions. And DeepSeek is a research spinoff from a hedge fund, meaning it doesn't exactly need to pay back billions to investors6.

In these cases, the open-source companies don't care about chatbot subscription revenue; they care about developer adoption and downstream application usage. And they're certainly doing a good job with those metrics - but they're not competing for the consumer chatbot crown.

The Balkanization of AI

Of course, even though chatbots may not be a particularly competitive space anymore, AI labs are now competing on several new frontiers.

AI IDEs. The most prominent - and arguably, also close to consolidation - is AI-powered IDEs. It's Cursor's race to lose at the moment, especially in the wake of Windsurf being gutted as a product (after an OpenAI acquisition fell through, company leadership went to Google and the core product seems to have been mostly stripped for parts) and most upstarts having a fraction of the user base. There are both and new entrants from big tech - GitHub Copilot, Amazon Kiro, maybe even an updated Xcode - but I'd mostly see them winning if and when this becomes a war of attrition rather than innovation, where products can simply offer free inference to attract new users.

Coding agents. However, things are quickly evolving beyond code editors and into coding agents, which changes the interaction model entirely (and opens up more competition). Instead of AI that helps you write code, we're seeing AI that can write, run, debug, and deploy code with varying degrees of autonomy. Claude Code is the frontrunner, with over $500M in run-rate revenue as a standalone product. Then there's Google Jules, OpenAI Codex, and myriad other "coding agent" startups trying to get a piece of the action.

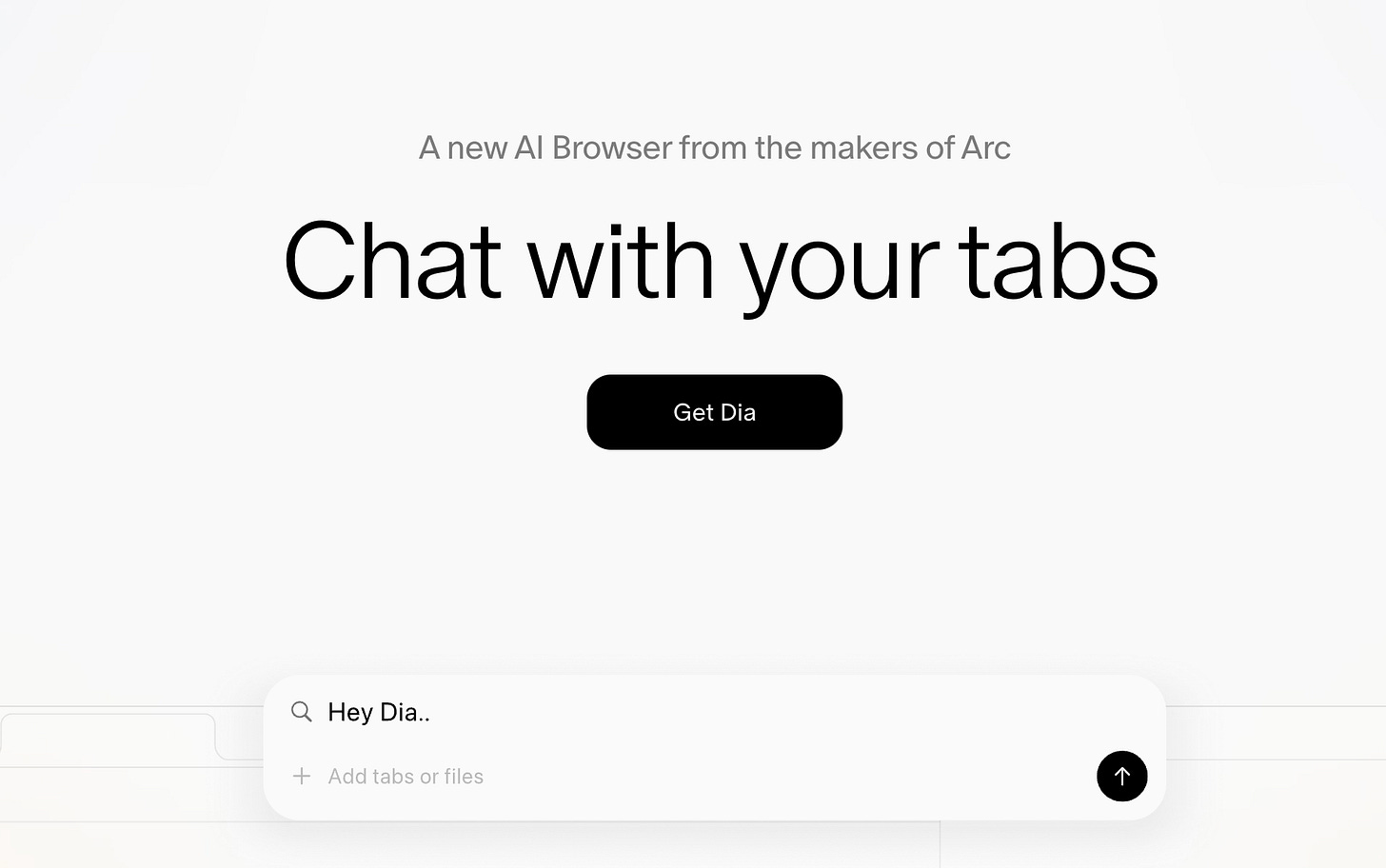

Browser agents. Much less mature than coding agents, but still highly competitive, are browser agents - AI that can navigate and take action across the web on your behalf. The big competitors are already emerging. OpenAI's ChatGPT can now browse and interact with websites. Google is building "Project Mariner," which promises to integrate AI agents directly into Chrome itself - leveraging all their internal browser expertise. And then there are more experimental approaches like Dia, which is building a browser from the ground up with LLMs in mind, or extensions like "Claude for Chrome" that give existing AI models control over your browsing experience.

What's particularly interesting here is how the competitive dynamics differ from chatbots. Google has a massive structural advantage given that Chrome has 65% market share - they can bake AI agents directly into the browser that billions of people already use daily. But that also creates an opening for someone like Dia to rethink the entire browser experience around AI from first principles, rather than retrofitting existing interfaces.

Big Tech 2.0?

And there are undoubtedly more categories coming. I'm guessing that in the next few months, we'll see the frontier labs launch even more features that attempt to turn their models into moats across various digital workflows. Shopping seems like an obvious extension - while general-purpose agents are neat, there's real money to be made in having an AI that acts as the middleman between you and Amazon or Shopify.

The pattern is becoming clear: if there's a digital task that can be broken down into concrete, reasonably manageable workflows - and if there's a way for AI companies to position themselves between you and that task while charging for the privilege - they'll likely take a swing at it7.

But unlike the chatbot wars, these new battle lines will likely be more fragmented and specialized. Coding agents, browser automation, and task-specific workflows may become winner-take-all markets within their respective niches. And at the moment, it seems only a handful of companies are competing across multiple fronts.

In some ways, I see this as potentially a repeat of "Software 2.0" and Big Tech's oligopoly. Across most major verticals that matter: search engines, mobile phones, e-commerce, social media, cloud computing, etc., the pie is divvied up with one clear leader and second and/or third runner-up, but all of the faces are pretty familiar.

Additionally, it's going to get increasingly harder to know what counts as an "activation" when it comes to big tech's chatbots. I'm assuming the usage presented here means something along the lines of "users having a chat with the Gemini chatbot" and not "any user triggering a Gemini-powered feature across Gmail, Docs, etc."

According to YouGov, 84% of people surveyed have heard of ChatGPT, compared with 73% for Gemini and 38% for Claude.

Long term, I fully expect the free version of ChatGPT to disappear or get meaningfully worse, whether that means only offering (relatively) dumb models, injecting ads, or otherwise turning the user into the product.

Which is why OpenAI is hell-bent on launching a consumer hardware device.

Though this may be changing, as Mark Zuckerberg is now attempting to chart a path to "superintelligence," and may retreat from championing open source models as much as before.

And in both cases, there's a strong national interest to have a leading, home-grown AI lab, meaning less pressure to monetize the results.

The ultimate question here though is whether we see any major AI lab go for full-on AI companions. OpenAI seems to be the closest, but it's clear that there's a lot of risk involved with actively marketing something meant to form parasocial relationships with users.

Welcome back!

I sort of agree with your premise here in that ChatGPT has become pretty much synonymous with "standalone AI chatbots."

But I'm quite bullish on Google when it comes to the future paradigm, which I think will be much less "logging onto a dedicated chat website" and more "using AI naturally within my day-to-day ecosystem or suite of products." Here, Google has massive advantages since so much of the business world is powered by stuff like Gmail, Google Slides, Sheets, Docs, etc. - and all of them are gradually getting increasingly meaningful integrations with Gemini models behind the scenes, which also serve as the "glue" that pulls the entire Google ecosystem together.

Google's slow to get going and cautious about releasing models prematurely, but judging by the pace of releases and SOTA models it's pushing out, I don't see why these can't be incorporated into its products in a meaningful way going forward.

Having said that, ChatGPT Plus is currently the only subscription I actually pay for...but that might change.

great blog.