The Chatbot Trap

Why AI products really need some better UX.

I've often said that I believe generative AI is a technological shift at least as big as the smartphone. One reason for that analogy is how ubiquitous smartphones have become - for many of us, they're the first thing we see when waking up and the last thing we see before falling asleep.

But there are other reasons why I make the comparison. For example: when the iPhone first launched, mobile browser traffic exploded, and companies scrambled to do something about it. For most, that meant building "m.google.com" and "m.facebook.com" - pages that took the existing desktop format and crammed it into a vertical aspect ratio.

While it took the better part of a decade, we eventually figured out what "native" mobile experiences were. To get there, we had to invent entirely new interaction patterns: pinch to zoom, pull to refresh, swipe to advance. Eventually, we built apps like Instagram, Uber, and Strava - products that simply couldn't have existed in a desktop-first world.

We're at a similar inflection point with AI interfaces today. But instead of cramming desktop websites into phone screens, we're forcing AI capabilities into chat windows. Just as "m.facebook.com" was a compromise that failed to capture mobile's true potential, the ubiquitous chatbot interface is holding back AI's transformative power.

Right now, it feels like 95% of "AI-enabled" products I encounter have welded a chatbot to an existing SaaS app - and in reality, that number should be closer to 5%1.

The cognitive cost of conversation

To understand why chatbot interfaces are often the wrong choice, we need to look at their fundamental limitations. The most obvious: the cognitive load they impose on users.

Imagine a chart of different AI features with two axes: "How much impact/value can this have?" and "How much brainpower does it take for me to use this effectively?" Ideally, you want your tools to have a significant impact while requiring very little active thinking. With LLMs, the impact is high - but so is the cognitive load when using them2.

Imagine using ChatGPT to generate some code (without a fancy AI-enabled code editor). There’s a bug in the output that needs to be fixed. With a chatbot interface, you have to:

Copy (or generate) the entire document into the chat

Explain the bug you want to fix (or the line you want to change)

Wait for the whole document to be regenerated

Scan through to find what changed

Verify nothing else was inadvertently modified/hallucinated

Copy the result back to your original code editor

That's... a lot of work for what should be a simple edit. And if you're repeatedly tweaking the code or trying to get ChatGPT to help you debug, it can mean having to check for hallucinations or work around "... the rest of the code here ..." over and over again.

There's also the issue of affordances (or lack thereof) for new users. At this point, I mostly know what to expect from a new LLM, but that's not true for everyone. The challenge isn't just that chatbots are general purpose - it's that they give users almost no clues about their capabilities or limitations. Without clear UI indicators, users are left guessing what's possible and what isn't.

I especially like the way Amelia Wattenberger puts it:

Good tools make it clear how they should be used. And more importantly, how they should not be used. If we think about a good pair of gloves, it's immediately obvious how we should use them. They're hand-shaped! We put them on our hands. And the specific material tells us more: metal mesh gloves are for preventing physical harm, rubber gloves are for preventing chemical harm, and leather gloves are for looking cool on a motorcycle.

Compare that to looking at a typical chat interface. The only clue we receive is that we should type characters into the textbox. The interface looks the same as a Google search box, a login form, and a credit card field.

Luckily, computer interfaces have been around for 50 years - and we've had the solution to this problem for 40 of them.

Direct Manipulation: A 40-year-old solution

To help understand more about why chatbot UX is problematic, I want to discuss a concept from the 1980s: direct manipulation interfaces. Introduced by Ben Schneiderman over forty years ago, these interfaces have a few key characteristics:

Continuous representation of objects: Users can see visual representations of the objects they can interact with on the screen.

Physical actions: Instead of complex syntax or commands, users interact with objects via physical actions like clicking, dragging, or pinching.

Rapid, Incremental, and reversible actions: Users can quickly perform actions, see immediate results, and easily undo or modify their actions.

Immediate feedback: The effects of user actions are immediately visible on the screen, instantly confirming the result.

In today's world, that means interactions like drag-and-drop, resizing windows, volume sliders, pinch-to-zoom, and more. Those might sound like obvious, everyday interfaces, but they didn't exist without people explicitly inventing them3.

And we're still building software that encourages direct manipulation in new ways. Figma, for example, makes every object on the canvas selectable, with properties updating as you drag handles and sliders. You're elbow-deep in the actual media that you're designing.

Similarly, Notion takes the core concept of "blocks" and uses it to create tangible, malleable documents. You can drag blocks to reorganize sections, or transform them into different output types.

The challenge with many current AI interfaces is that they ignore the principles above. It's not apparent what commands will work. Changes are often all-or-nothing rather than incremental. Feedback is unpredictable and frequently delayed. And actions aren't easily reversible.

To be clear, I'm not suggesting we abandon natural language interaction entirely. Instead, it's about finding the right balance between conversation and direct manipulation. Sometimes, you want to have a discussion about your document's structure; other times, you just want to reword a single sentence.

The key insight here is that different tasks require different interaction models. Just as Figma uses direct manipulation for visual design but still includes a command palette for power users, AI interfaces need to thoughtfully blend multiple interaction patterns and ways of abstracting content.

Turning the dial of abstraction

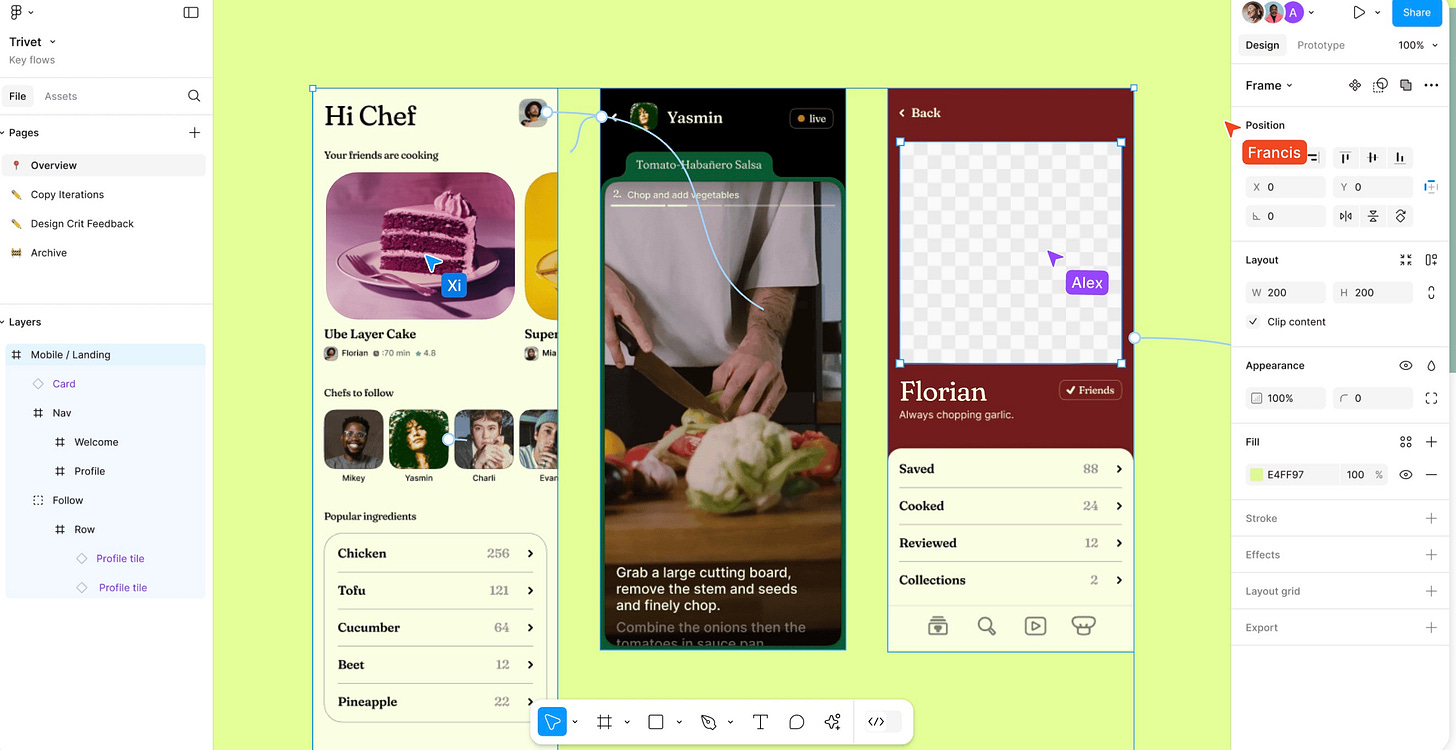

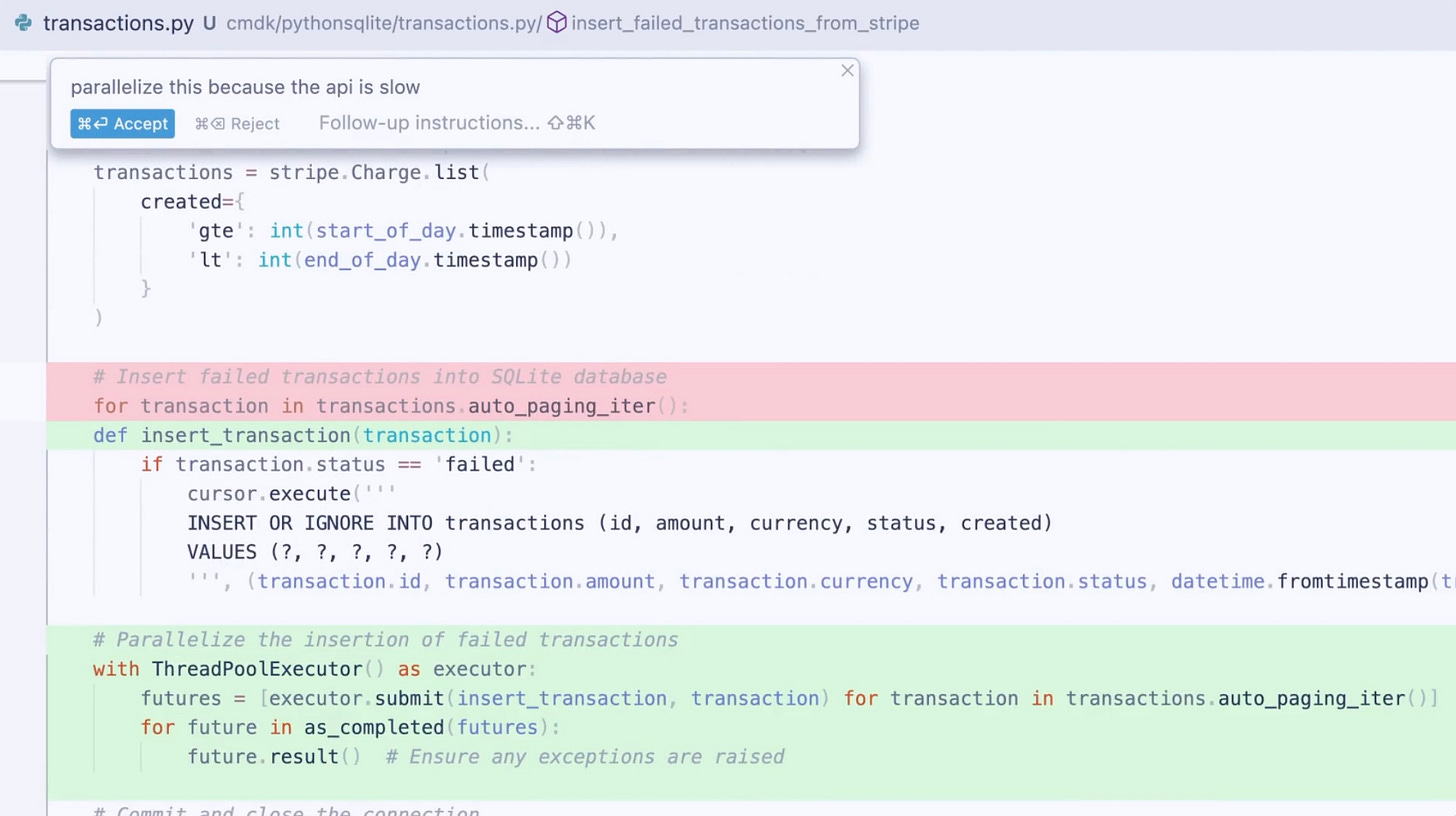

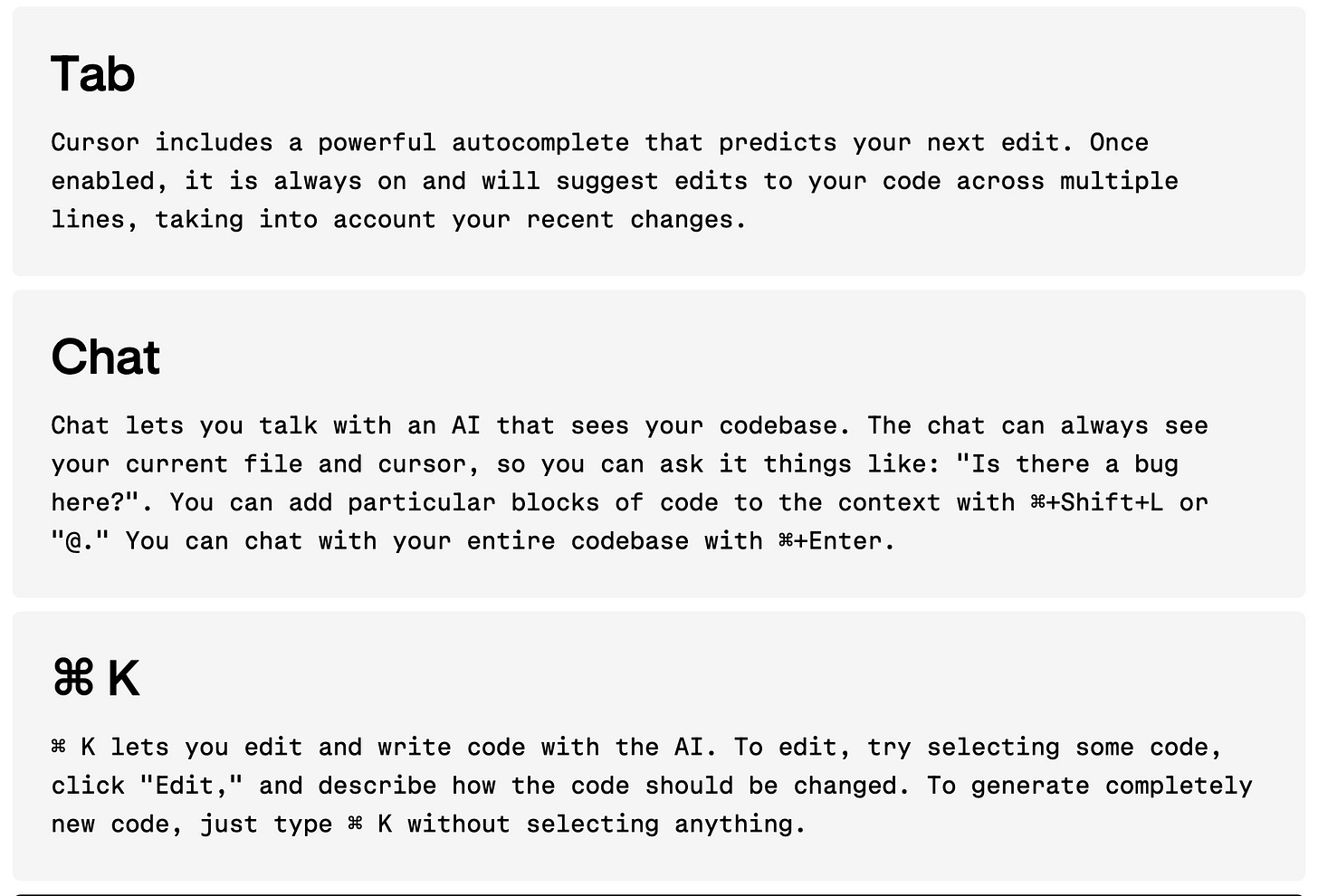

To see how we might move beyond chatbots, let's examine how different interaction models can work together in practice. Take Cursor, an AI-enhanced code editor that demonstrates how multiple levels of abstraction can coexist in a single interface:

At the lowest level, there's character-by-character code completion. This requires almost zero cognitive load - there's no context switching, no need to formulate prompts, and no breaking your flow. Moving up a level, we have inline code generation. When you need to write a new function or component, you can describe what you want in natural language right where you need it. But crucially, the results appear as a diff - you can see exactly what's being added or modified, and accept changes granularly.

At the highest level of abstraction, there's a sidebar chat for more complex tasks like analyzing architecture or debugging issues. But even here, the interface isn't just a generic chatbot. The AI has context about your codebase, can reference specific files and functions, and can propose changes that you can preview and apply directly.

Importantly, these aren’t just different interfaces - they're appropriate interfaces for different scales of task. You don't need a chat conversation to autocomplete a variable name, but you might want one to understand a complex algorithm.

And ensuring that all changes are filtered through a model that's fine-tuned to generate diffs means that no matter where the code suggestions are coming from, there's a unified interface for reviewing and accepting changes.

This pattern of "layered abstractions" could extend far beyond code editors. Imagine an AI writing tool that applies similar principles:

Character-level suggestions for copyediting and rewording

Sentence-level analysis of evidence and clarity

Paragraph-level suggestions about theme and flow

Document-level feedback on structure and thesis

All changes presented as specific, reversible diffs

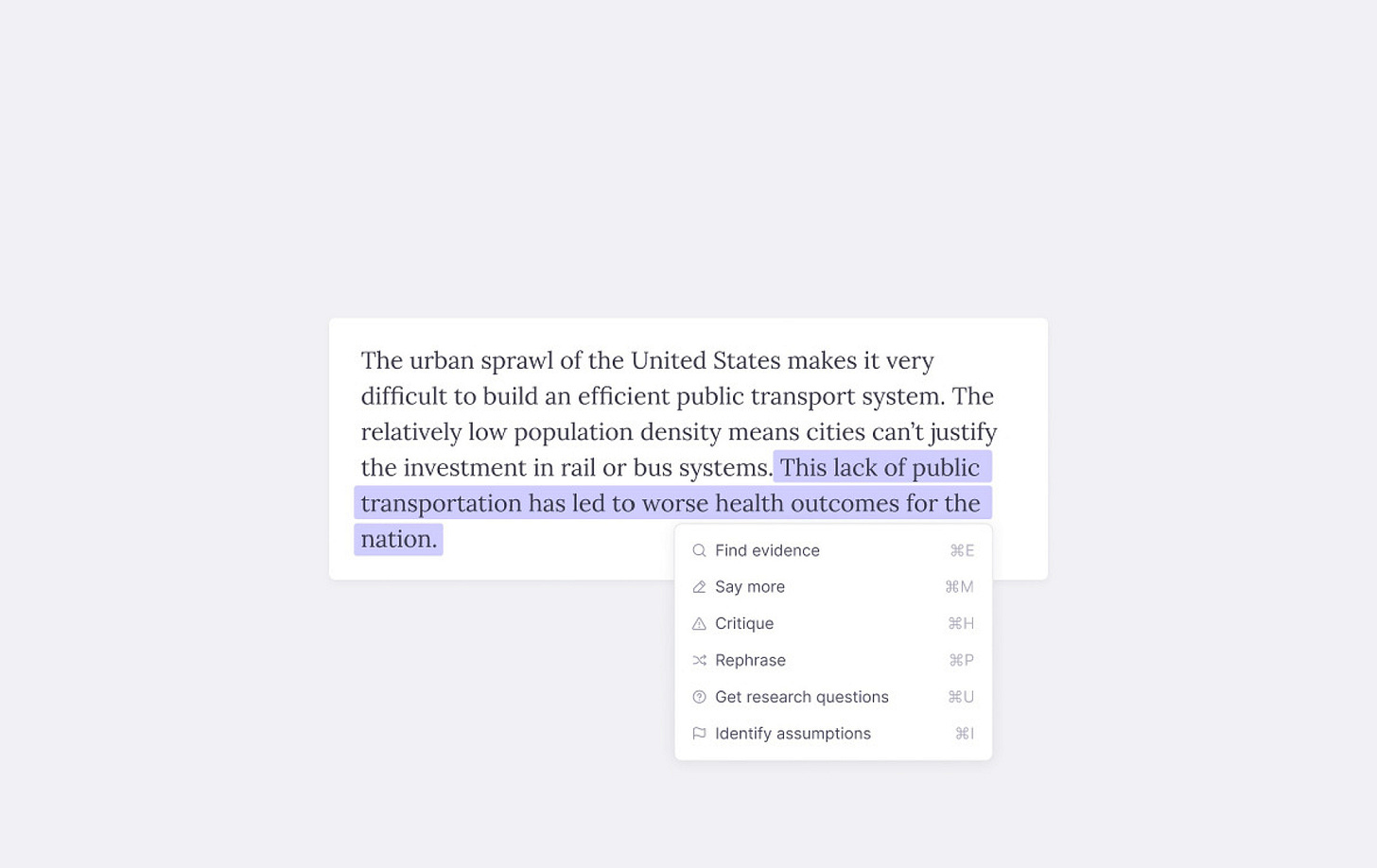

Maggie Appleton has done some excellent explorations of what an AI-powered writing tool might look like once we discard the notion of a chatbot. What if ChatGPT could toggle between various editing "hats"? Or what if we built a tool that structured the process of collecting evidence, or strengthening arguments?

Beyond the chatbot era

The good news is that we're starting to see real progress in new kinds of AI products. Shortwave, an AI-powered email client, has woven AI actions throughout its UI with buttons, keyboard shortcuts, and contextual suggestions. And Cove, a visual workspace for AI collaboration, is starting to give AI the ability to do direct manipulation itself.

I'm also optimistic about foundation model developers incorporating some of these ideas natively. ChatGPT's new Canvas feature, for instance, now offers much better direct manipulation and different specialized tools depending on whether you're writing code or prose. Its quick actions menu, in particular, is a fantastic example of what we can do if we start harnessing the power of LLMs for specific use cases.

I strongly believe that the future of AI interfaces won't be found in better chatbots, but in thoughtfully designed, domain-specific tools that make AI's capabilities directly manipulable. And getting there will take learning and growing from designers and engineers alike.

Product designers must move beyond the chatbot paradigm and consider how to tailor AI's capabilities for their specific users. That's a difficult task when we're still figuring out what AI can do, and the technology keeps evolving. How do you design interfaces for capabilities that might exist next month? What does a world with more agentic AI look like from a UX perspective?

Engineers face their own challenges. Building these new interfaces requires familiarity with an emerging AI stack - thinking about guardrails, RAG pipelines, hallucination mitigation, streaming responses, and more. I have quite a few thoughts on AI Engineering, so stay tuned for more on this topic.

Just as we moved from m.google.com to Instagram and Uber, we're on the cusp of moving from generic chat interfaces to AI-native experiences. Time will tell exactly what those experiences look like, but the path forward is clear: we need interfaces that make AI's power more accessible, intuitive, and directly manipulable than ever before.

If you’re interested in learning more about building with LLMs, I’m working on an AI engineering course, and I want your feedback to help tailor the content. Sign up for updates here.

There's a straightforward reason that so many "AI-enabled" products are shoehorning a chatbot into their product: ChatGPT. The product, which initially launched as a "low key research preview," became the fastest growing product of all time, and spawned a million "ChatGPT for X" copycats - most of which were simply wrappers around ChatGPT itself.

As an aside, it's crazy how good the UX is for Github Copilot's autocomplete - and as software developers, it's incredibly lucky (or unlucky, depending on your point of view) that it was one of the first mainstream AI tools for developers. It's a tool that you can use dozens (if not hundreds) of times per day with near zero effort, distraction, or cost!

And there are those trying to invent the next wave of "obvious, everyday interfaces.” Bret Victor's work on dynamic mediums shows how direct manipulation could evolve for computational thinking. His demos of "reactive documents" and "explorable explanations" point to a future where complex systems become tangible and manipulable.

Fantastic article, thanks for sharing. You’ve articulated really well concepts I’ve been thinking about but haven’t been able to put into words!

nice thoughts Charlie.

curious on your thoughts on Google's Project Astra which seems to take away the personal AI assistant away from a chatbox and into a real world companion (via wearable glasses)