Recap: OpenAI DevDay 2025

ChatGPT's App Store, AgentKit, Sora 2 in the API, and more.

For the second time in three years (I missed last year), I had the chance to attend OpenAI’s DevDay in person - a slick, stylish event at San Francisco’s Fort Mason.

Two years ago, DevDay was all about making developers’ lives easier with better APIs and the Assistants framework. And in some ways, OpenAI is still pursuing many of the themes from two years ago; in others, it appears that the focus has expanded beyond “just” developers.

Let’s break down what was announced and what it all means.

ChatGPT Apps (Take Three)

Remember plugins? Remember GPTs? Well, OpenAI is trying again with ChatGPT Apps. Third time’s the charm?

What’s new:

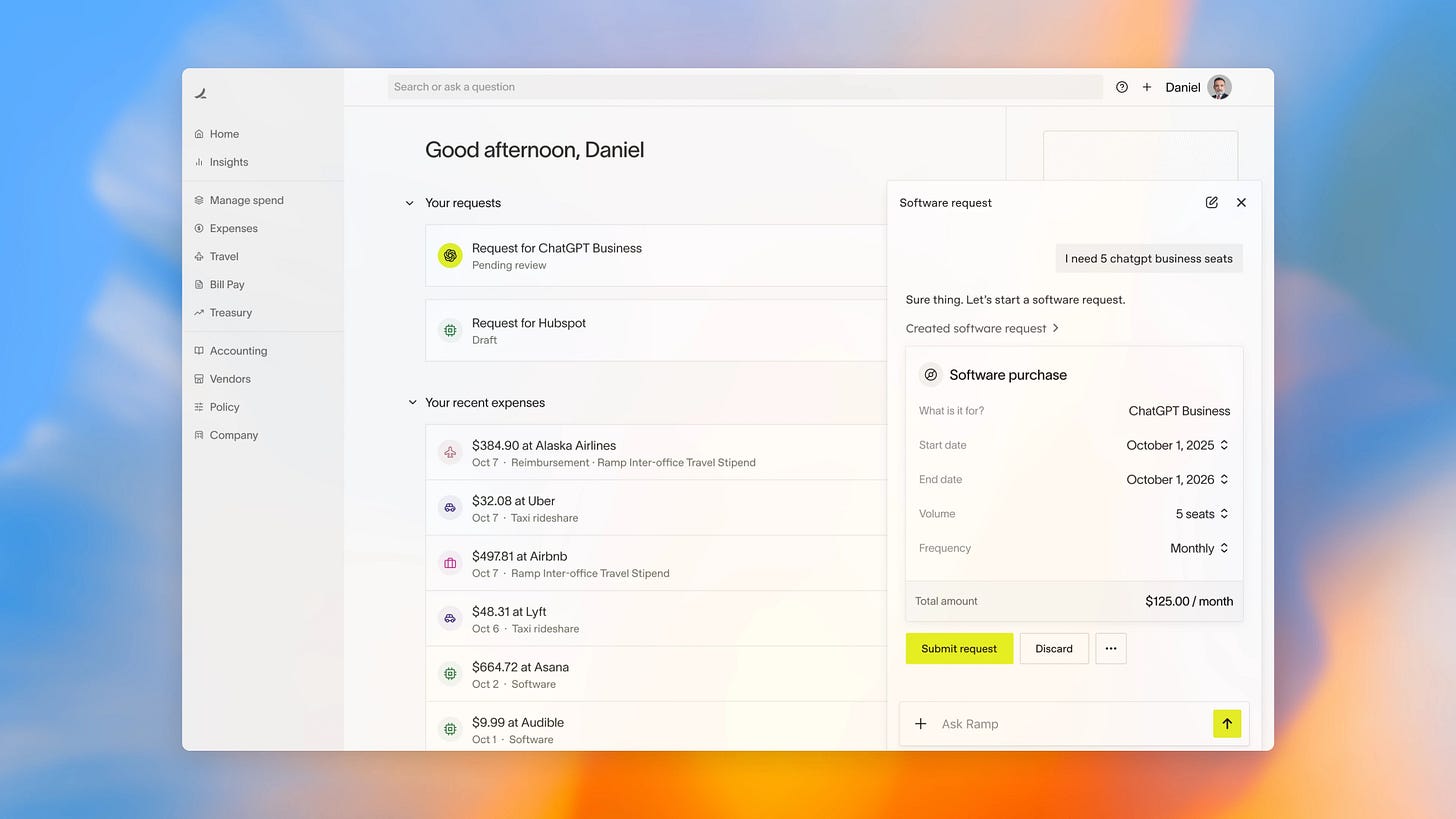

Apps are conversational interfaces that surface contextually in chats or can be invoked by name (e.g., “Spotify, make a playlist...”)

Built on the Model Context Protocol (MCP), which has real momentum in the developer community

Interactive UI elements appear inline - maps, playlists, design mockups, etc.

Two-way context transfer between the app and ChatGPT conversation

Apps can connect to backend data sources and services

Early partners include Booking.com, Canva, Coursera, Expedia, Figma, Spotify, and Zillow

The MCP foundation is a smart move. Rather than fighting the momentum behind MCP, OpenAI is embracing it while adding its own layer: OAuth flows and HTML scaffolding for richer UIs. This feels much more pragmatic than the proprietary plugin architecture from 2023. Based on some of the demos, this is going to be a handy way to add richer interaction; however, I’m sure we’ll soon feel the limits of the APIs.

But the billion-dollar question remains: Will developers actually build apps en masse? You’re essentially becoming a headless data/action endpoint in exchange for ChatGPT’s distribution. That’s a compelling trade-off if the distribution is real and the monetization is fair - both of which are TBD.

Also, some practical concerns:

How do you prevent apps from squatting on common names?

What does “higher standards for design lead to better discovery” actually mean in practice?

The monetization model matters enormously here. OpenAI mentioned the “Agentic Commerce Protocol” for instant checkout, but details are sparse. And despite the much-awaited monetization terms for GPTs, it certainly feels like things fizzled out there.

AgentKit: The No-Code Agent Stack

OpenAI is packaging up everything you need to build, deploy, and optimize “agentic” workflows into a single offering called AgentKit.

What’s included:

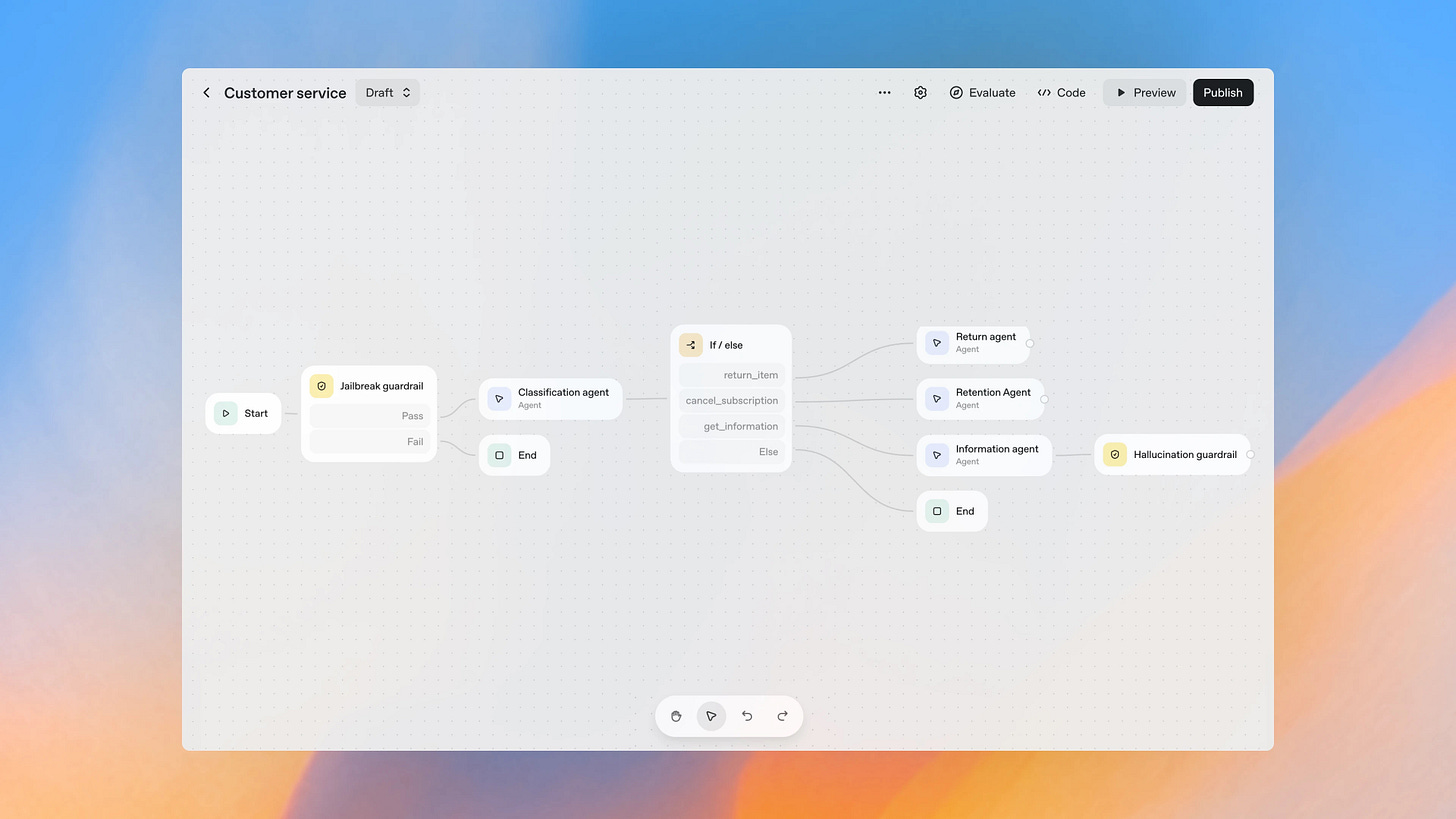

Agent Builder (beta): A visual canvas for building agents without code. Think of it as a workflow builder with versioning, preview runs, inline evals, guardrails configuration, and templates.

ChatKit (GA): An embeddable chat widget - basically Stripe Checkout for conversational AI. Handles streaming, threads, and “show the model thinking” patterns. White-label it and drop it into your app.

Evals (GA): Built-in observability with datasets, trace grading, automated prompt optimization, and - surprisingly - support for third-party models via OpenRouter.

Connector Registry (beta): A centralized admin panel for data connections across ChatGPT and the API. Supports Dropbox, Google Drive, SharePoint, Teams, plus third-party MCP connectors.

Guardrails: Open-source modular safety layer for PII masking, jailbreak detection, etc.

Look: a ton of headlines are about to proclaim that OpenAI is “killing hundreds of startups.” And that might be true - Agent Builder certainly looks like a lightweight n8n or Zapier clone. But it’ll likely need a decent amount of additional engineering and product investment before it becomes a serious competitor to those tools. So while those startups might not be “dead,” they’re certainly on notice.

But it’s good to see acknowledgement that the drag-and-drop interface isn’t everything you need. ChatKit is clever. It reminds me of the conversation equivalent of Stripe’s payment widget - you get a battle-tested UI without building it yourself. For B2B SaaS companies wanting to add AI chat without reinventing the wheel, this is a no-brainer. And the evals expansion will hopefully get more people into the camp of testing their LLM apps more rigorously, instead of relying on vibes every time there’s a prompt update.

Codex: Now With Slack and an SDK

Codex (OpenAI’s cloud coding agent) has been in preview for a while, but today it hit general availability with some developer-friendly additions.

The latest:

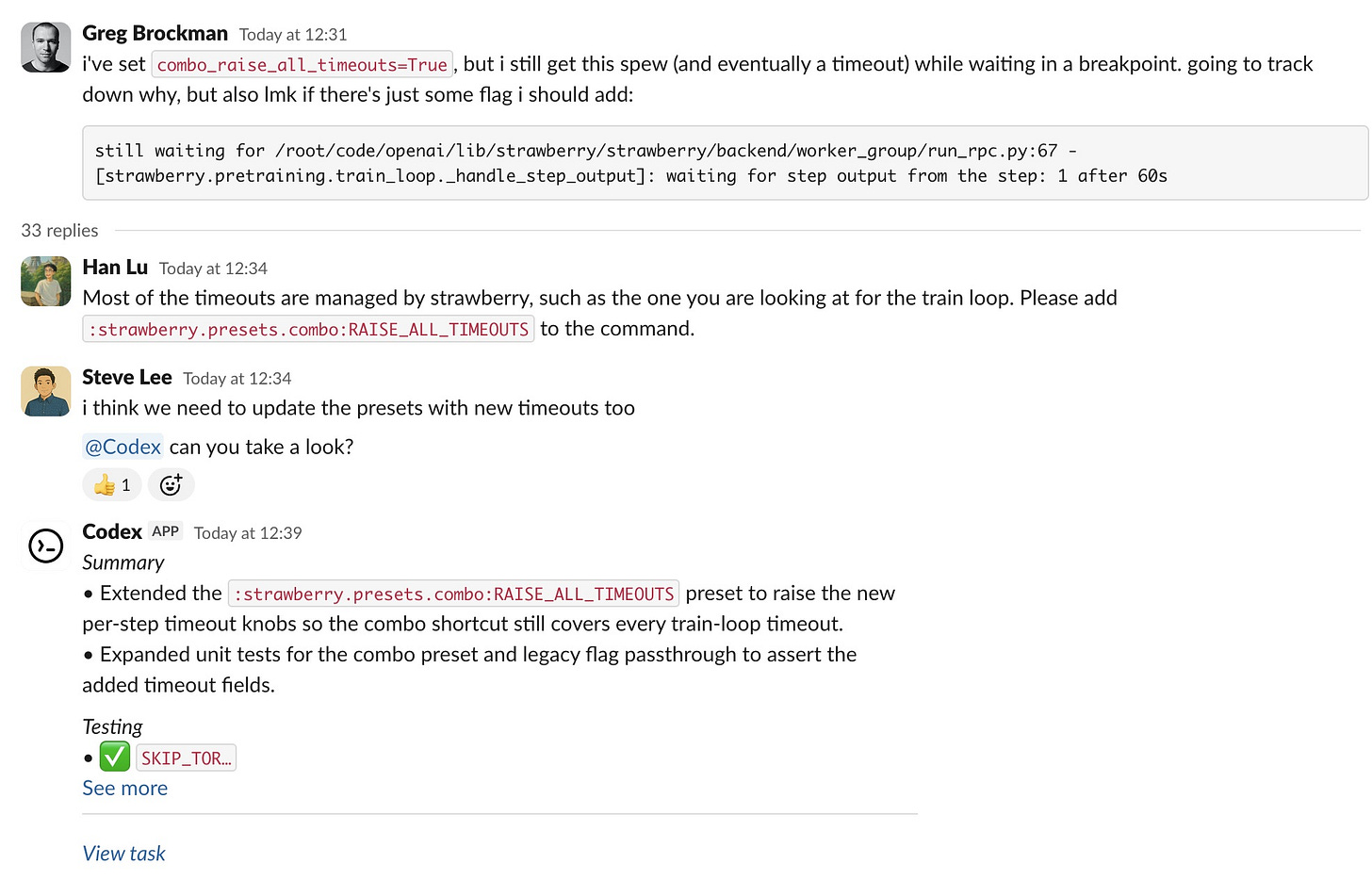

Slack integration: @Codex in Slack channels or threads. It gathers context, picks an environment, and links back to completed tasks in Codex cloud.

Codex SDK: Embed the Codex agent in your own tools and workflows. TypeScript is available now, with a GitHub Action for CI/CD pipelines.

Admin features: Environment controls, managed configuration overrides, usage analytics, and quality dashboards across CLI/IDE/web. (Business/Edu/Enterprise only.)

Codex has been iterating quickly, and it shows1. The Slack integration is the kind of thing that’ll make non-engineer managers very happy - delegate a task, get a link back when it’s done. For teams with less technical PMs, this lowers the barrier to leveraging Codex significantly.

I can’t currently speak to how good the Codex model is versus, say, Anthropic’s Claude or even Cursor. But it’s clear OpenAI is investing heavily in the developer experience and enterprise controls. The admin dashboards and environment management are exactly the kind of thing that’ll convince larger orgs to roll this out org-wide.

But perhaps the most interesting piece was the SDK. It turns Codex from “a tool” into “a platform component” that you can plug into your own internal tools. In the demos they showed, apps had the ability to “edit themselves” using the Codex SDK and live-reload code changes. I’m still wrapping my brain around what types of products would benefit the most from that.

New Models: The Last-Minute Bombshells

In the final 10 minutes of the keynote, OpenAI dropped four new models into the API:

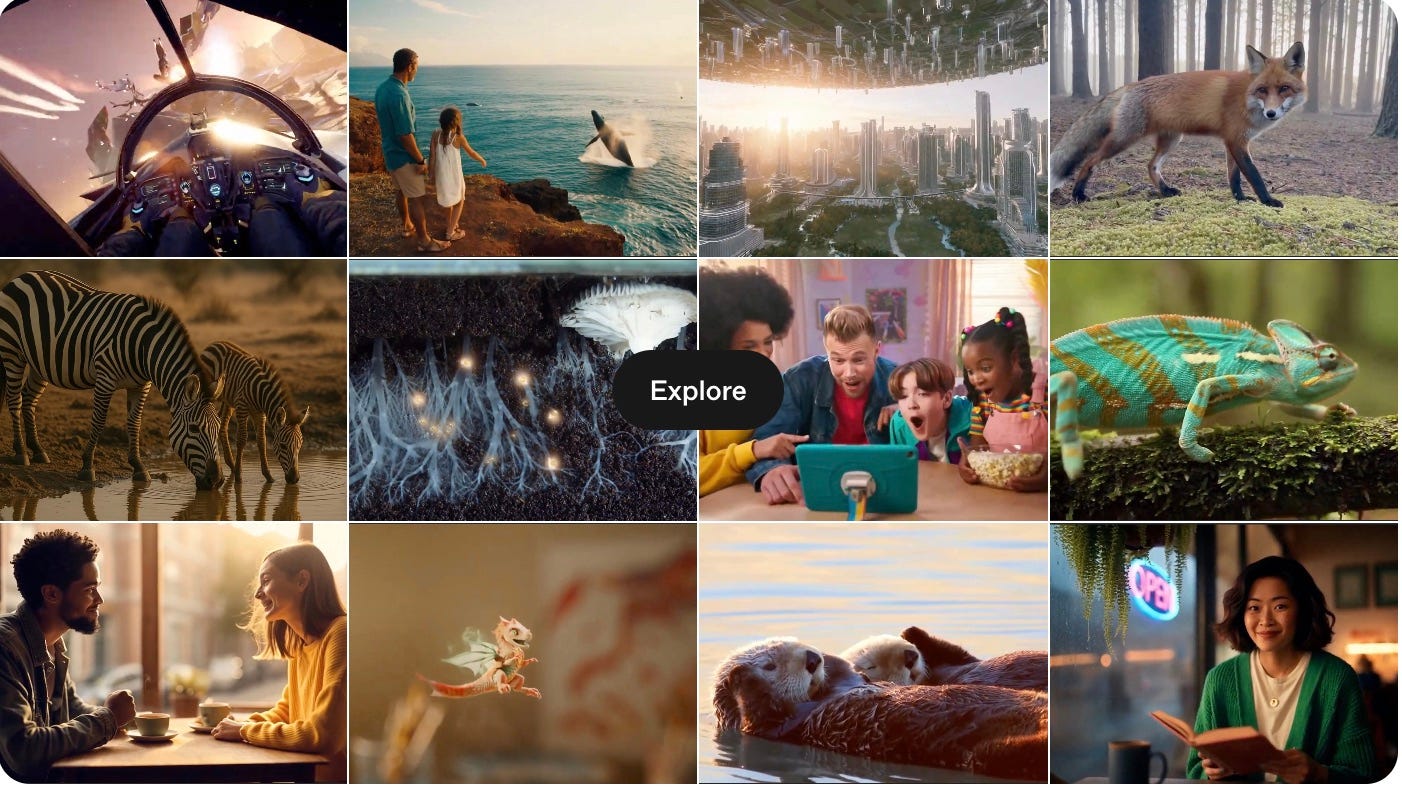

Sora 2: Next-gen video generation

GPT-5 Pro: The most intelligent reasoning model

gpt-realtime-mini: A faster, cheaper real-time model

gpt-image-1-mini: A faster, cheaper image generation model

I’m perhaps most excited about these - and judging from Twitter, the Sora 2 announcement is going to dominate viral moments for the next week.

Final Thoughts

OpenAI really, really wants an app store. This is their third attempt (plugins → GPTs → Apps), and they’re bringing heavier artillery each time. MCP as the foundation helps, but the success of this platform hinges entirely on whether developers trust the economics.

AgentKit is (probably) a startup killer. From eval tools to agent builders to embeddable chat widgets, OpenAI just bundled features that previously required multiple vendors. At this point, if you’re building tooling for almost anything in the LLM ecosystem, you need to ask: “What happens when OpenAI builds this into their platform?”

The terminology wars continue. “Agent” means different things to different people, and OpenAI’s usage here (essentially, workflow automation) is likely to frustrate those who define agents more narrowly. We were just starting to find a little consensus on what constitutes an “agent2” - OpenAI has made things a bit murkier now.

Two years ago, DevDay seemed far more focused on developers. This year, the focus has unmistakably broadened - the Slack integrations, no-code builders, and embeddable widgets are as much for PMs and managers as they are for engineers. OpenAI is chasing a bigger market than “just” developers.

Whether that strategy pays off depends on questions they still haven’t answered: Will the app store work this time? Will enterprises trust an all-in-one platform? And can they avoid the GPT Store’s fate of quietly fading into irrelevance?

See you in 2027 for DevDay 3.0 - and probably Apps 4.0.

Though also worth noting that it’s not necessarily groundbreaking - Claude and Devin (among others) have had similar capabilities for a while.

Simon Willison defines it as “An LLM agent runs tools in a loop to achieve a goal.”

ChatGPT Apps look like the correct implementation of in-chat applications - finally, something that feels native to the medium. The more seamless it becomes, the faster adoption will follow. It’s proactive rather than reactive.

This also feels like the thin edge of the wedge for ChatGPT Ads & Promotions. Once your chat routinely recommends apps, embeds their content, and effectively becomes the new “screen,” then preference and promotion become inevitable. At that point, OpenAI can charge for conversions instead of conversations - a much more defensible business model.

Strategically, it’s a sensible step for OpenAI. Whether it’s good for developers is less clear. It’ll depend on how open the ecosystem is, and whether OpenAI can strike the same uneasy balance Apple did with the App Store. We’ll see if they can resist the gravitational pull of control.