AI's Missing Multiplayer Mode

Going from digital tools to digital teammates.

When we look at the explosive growth of AI over the past few years, it's easy to be awestruck by the pace of innovation (and indeed, I often am). ChatGPT, Claude, and their increasingly capable cousins are moving beyond summarizing documents and generating code, and are now laying siege to increasingly complex and specialized benchmarks.

Yet for all this remarkable progress, I often feel like there's a major missing element from my AI experience: collaboration. Nearly every AI tool I use today exists in what we might call "single-player mode" - designed for one human, having one conversation, working on one task at a time.

This limitation becomes particularly striking when we consider where AI labs are headed. Sam Altman of OpenAI and Dario Amodei of Anthropic have both talked of building "AI employees" as a milestone on the way to building "AI organizations," but work (at least as we humans do it) rarely happens in isolation.

Our most meaningful scientific breakthroughs and our most mundane "email jobs" both emerge from interpersonal coordination. We brainstorm in meetings, exchange ideas in Slack channels, work on shared documents, and build upon each other's contributions. Even working "alone," we often synthesize insights from multiple sources and conversations.

I like to think of this as "multiplayer AI.” Intelligence that can engage meaningfully with multiple humans simultaneously, not just taking turns or being passed around like a digital baton, but actively participating in the dynamic flow of genuine collaboration.

I'm excited for this next frontier of AI development. Not only because it will necessitate improvements in autonomy and technological capability, but also because it'll mean understanding social dynamics and effectively navigating conversational norms.

So let's explore some of the technical and cultural challenges that currently make this approach difficult, the early approaches that hint at solutions, and what a future of true multiplayer AI might look like.

The Single-Player Status Quo

There's something beautiful (if frustrating) about ChatGPT's minimalism. Ask a question, get an answer. Request a poem, receive a sonnet. The simplicity of this interaction model - just you and the AI, taking turns - contributes significantly to its appeal. It's clean, predictable, and easy to implement.

This one-to-one, turn-based pattern dominates our current AI landscape. Whether you're using Perplexity for research, Midjourney for image generation, or Cursor for coding assistance, the core experience remains remarkably similar: one human, one AI, exchanging messages in sequence.

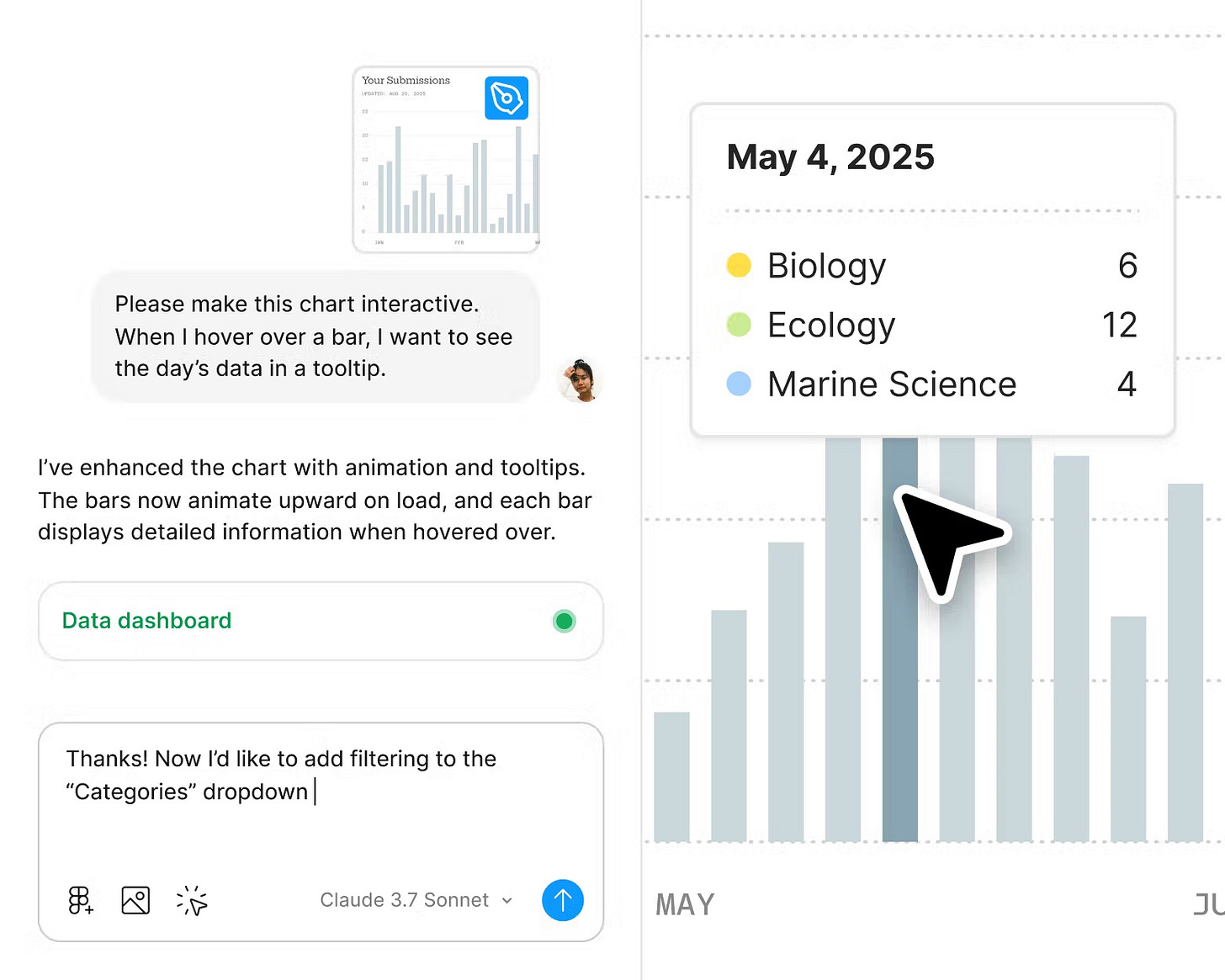

Even as these tools have evolved, they have maintained this fundamental interaction model. More agentic interfaces (like Claude Code or Deep Research) can have the AI take dozens, if not hundreds, of intermediate steps, but they ultimately return a single answer per "turn." Likewise, the "Enterprise" versions of ChatGPT and Claude add "team" features - shared conversations, context, and projects - that are essentially lightweight sharing mechanisms wrapped around the same single-player core. The AI itself still operates in a one-conversation-at-a-time paradigm.

But what would alternative model(s) look like? I find it helpful to think about these systems in terms of three distinct paradigms:

Single-player AI is where we started and largely remain today. In this model, one human interacts with one AI assistant in a dedicated conversation stream. The AI maintains context only within that conversation and treats each interaction as a discrete exchange with a single user. This model excels at personal assistance but falls short in collaborative settings where multiple people must work together.

Shared-access AI represents the current state of "team" features in products like ChatGPT and Claude. These tools allow multiple team members to access the same AI capabilities, often with shared history and organizational knowledge, but interactions still happen sequentially. It's less like having an AI team member and more like sharing a powerful tool that everyone takes turns using.

Multiplayer AI - still largely theoretical - would participate in multi-human conversations dynamically, understanding group dynamics and contributing appropriately to collective activities. This AI wouldn't just respond to direct prompts but would understand its role within a team context, recognize when to contribute (and when to observe), and adapt to the social dynamics at play. It would be less like a shared tool and more like a genuine team member1.

We're seeing hints of this paradigm in tools like Figma's AI features, where multiple designers and AI can simultaneously contribute to a shared canvas, or meeting assistants observing group discussions and providing summaries or action items. But these are still painfully limited - they have well-defined "triggers" (i.e., buttons or events) and little context of the meta aspects of collaboration.

What's particularly jarring is that many AI companies are explicitly building toward a future of "AI employees" and "digital coworkers," yet their products remain fundamentally designed for delegation rather than collaboration.

We still don't see an AI meaningfully participating in a team Slack channel, contributing to a brainstorming session without being directly prompted, or navigating the complex social dynamics of a product development meeting. While these capabilities are less flashy than autonomous coding or content creation, they are just as transformative for our work.

More Humans, More Problems

To be fair, there are valid reasons why building multiplayer AI experiences is meaningfully harder than single-player ones.

Let's start with the basics: real-time collaborative software has been notoriously difficult to build, even without AI in the mix (though plenty of design patterns and frameworks have made it easier than it used to be). Unless you initially architect your application with multiplayer mode in mind, adding it later usually brings complex problems with synchronization, concurrency, and conflict resolution2.

Now, layer on the unique challenges of language models. Current LLMs are architected around a fundamentally turn-based conversation model. They're refined (and graded) on datasets where the user prompts, and the model answers - which shapes how these models process information and generate responses. They have limited experience with scenarios where multiple participants quickly contribute, interrupt, or riff on each other's ideas in real time.

We're still discovering the limitations of single-user LLM interfaces, which will only get more complex as we add more humans. Context windows, for example, will fill up twice as fast when multiple users participate (though Gemini is now up to millions of tokens, so hopefully this isn't a problem for too long). "Memory" is still a fuzzy concept with chatbots, but the industry consensus seems to be that, long-term, we want LLMs to remember things about you and personalize their answers accordingly - it's a different paradigm when multiple people are involved.

And, of course, there are privacy considerations, particularly for voice or video-based AI. When an AI participates in a group conversation, questions immediately arise about consent (who agreed to the AI), data retention (how long conversations are stored), and access controls (who can view the recording and summaries).

For what it's worth, I think the technical challenges I've covered are quite surmountable. I find the social challenges far harder to solve.

When I join a group discussion, I constantly make subtle assessments: Who has expertise in this area? What's the power dynamic between participants? Is this the right moment to interject my thoughts, or should I continue listening? Does the team seem energized and open to new ideas, or stressed and focused on execution?

If you asked me to distill these down to a set of explicit rules (say, for a prompt), I'm not sure I could. I'm not thinking in terms of individual rules, but in terms of decades of social experiences3. While shockingly impressive in some domains, current AI systems don't always have an intuitive grasp of social dynamics. Early versions of ChatGPT were compared to a child (or perhaps a savant) in terms of gullibility; newer versions might be considered at the level of an undergrad4.

But let's say, for the sake of argument, that LLMs do reach near-human levels of social awareness. They can distinguish between passionate disagreement and tense conflict and recognize when humor is a better approach than tough love. Even then, how should we be summoning our AI companions?

We never quite figured this out with pre-LLM "smart" assistants; instead, we had to rely on always-on microphones to listen to the magic words "Hey Siri" or "Hey Google." It's still an open question as to when the AI should contribute - whether it responds to explicit prompts ("Hey chatgpt") or has the agency to interject when it detects an opportune moment. The former limits the AI's collaborative potential, while the latter risks unwanted or irrelevant interruptions.

Obviously, the latter situation is ideal, but it begs the question: How will the AI know what an "opportune moment" is? At work, I know which of my teammates excel at creative brainstorming and which provide valuable critiques. I know who the decision-makers are and who oversees different parts of the organization. To get to true “coworker” status, we need to build AI systems that can effectively take this context into account.

Moreover, we need to do it across different organizational cultures. A direct, debate-oriented engineering team might value blunt feedback and immediate correction of factual errors, while a consensus-driven design team might prefer more diplomatic framing of alternative perspectives.

And even that's assuming an American team: consider high-context cultures like Japan, emphasizing indirect communication and reading between the lines. These differences manifest in turn-taking patterns, comfort with silence, use of honorifics, and countless other subtle behaviors that humans navigate instinctively but present significant challenges for AI systems.

Platforms and Papers

That said, I'm still pretty excited about what's to come here. Some interesting approaches are emerging from academia and industry.

Industry Frontiers

The most visible progress is happening not by adding more humans to an AI interface, but by adding AI to existing collaborative platforms. This approach sidesteps many technical hurdles by leveraging platforms already designed for multi-user interaction.

Figma exemplifies this strategy. Their collaborative design platform already manages concurrent edits from multiple users, so adding AI features extends this capability naturally. When a designer prompts the AI to generate UI components or illustrations directly on a shared canvas, other team members can immediately see, edit, or build upon these elements - creating a fluid, collaborative experience that feels distinctly different from the turn-taking model of standalone AI tools.

Google Workspace and Notion are following similar paths. In Google Docs or Notion pages, multiple collaborators can simultaneously invoke AI to summarize text, generate content, or rewrite passages within a shared document. The AI doesn't directly participate in the collaboration but acts as a tool that any participant can leverage within the collaborative context.

What these platform-integrated approaches have in common is that they leverage existing multiplayer environments rather than trying to build multiplayer capabilities into AI systems from scratch. It's a pragmatic approach, if nothing else.

Research Frontiers

While commercial platforms focus on practical integration, academic researchers are tackling the theoretical challenges of true multiplayer AI interaction.

One approach focuses on making conversational AI more natural in multi-party settings. Kim et al. (2025) introduced OverlapBot, a chatbot that supports "textual overlap" - allowing the user and AI to type simultaneously, interrupting or backchanneling each other. This mirrors human group conversations where people often interject or finish each other's sentences. In user studies, OverlapBot was rated more engaging and "human-like," enabling faster and more fluid exchanges than strict turn-taking.

Alternatively, Microsoft researchers have proposed MUCA (Multi-User Chat Assistant), a framework explicitly designed for group dialogues. MUCA addresses what they call the "3W" decision dimensions a multi-user chatbot must manage: What to say, When to speak, and Who to address. Unlike traditional chatbots, MUCA can detect appropriate moments to "chime in" (like advancing a stalled discussion) and manage separate conversation threads among multiple participants.

And then there's the "Inner Thoughts" paper published by Liu et al. (2025). In it, the authors argue that truly conversational AI shouldn't only react on cue but should autonomously decide to contribute when it has something meaningful to add. Their system gives the AI its own "internal dialog" - a continuous covert chain-of-thought running alongside the overt conversation. The agent internally "thinks" about the discussion, formulating potential contributions, and then uses an intrinsic motivation model to decide the right moment to voice these thoughts. This mimics how a human in a group might quietly reason about a topic and wait for an opportune moment to interject.

Besides participating directly, researchers are also exploring how AI can facilitate group collaboration. Muller et al. (2024) studied teams of three humans working with an AI agent named Koala for creative brainstorming. The AI was designed to take on similar roles as a human teammate - suggesting ideas, commenting, and even proactively contributing without always being prompted.

Their findings suggest that an AI's contributions can meaningfully influence group outcomes, but the dynamics are complex: human participants sometimes treated the AI's suggestions differently, giving them more weight or subjecting them to additional scrutiny5.

Beyond The Dyad

In the near term, we're steadily improving AI's "listening" capabilities before full participation. AI systems are getting better at observing group interactions without necessarily contributing directly - summarizing discussions, identifying action items, or highlighting potential conflicts or opportunities that the group might have missed.

Meeting assistants like Otter.ai and Fireflies.ai already offer glimpses of this future, but their capabilities can expand from passive transcription to active understanding of the social and informational dynamics at play. As these systems prove their value, they'll gradually earn permission to contribute more actively to the conversation.

The medium term will likely bring more sophisticated participation and facilitation capabilities. Drawing on research like the "Inner Thoughts" framework and MUCA, AI systems will develop better judgment about when to contribute and how to frame their contributions appropriately for different group contexts. They'll become more adept at understanding the emotional tenor of conversations, recognizing when technical precision matters more than social harmony, and adapting their communication style to fit the group's norms. I also suspect we'll see specialized training (either data sets or RLHF techniques) emerge for this multi-human interaction.

The long-term vision - still years away, but increasingly conceivable - points toward AI systems that truly understand organizational context and contribute meaningfully to group creativity and problem-solving. These systems won't only respond to direct prompts - they’ll also proactively identify opportunities, connect disparate ideas, and help groups overcome communication barriers that might otherwise limit their effectiveness.

In today's world, all but the most menial tasks require some form of cooperation. And if AI is to truly become our "coworker" rather than just our tool, it must be able to join us in this collaborative space. An AI that can only interact with one person at a time will remain fundamentally limited in its ability to integrate into society as a whole, and an AI that can effectively work in groups has massive potential.

But building these systems will force us to reckon with questions about communication, social intelligence, and the nature of teamwork. So, as much as we need to solve the technical challenges, the most successful multiplayer AI won't necessarily be the most technically sophisticated.

It will be the one that most skillfully navigates the delicate balance between contribution and observation, assertiveness and deference, and its unique computational strengths and the distinctly human elements of collaborative work.

Strangely, one of the only examples I’ve seen of this in the wild is from Character.ai, which introduced “AI group chats” nearly two years ago.

Before working on real-time applications, I never had to worry about features like presence awareness (showing who's doing what), event sourcing (tracking the history of changes), and latency management (ensuring responsiveness despite network delays). Not to mention specialized data structures like CRDTs (Conflict-free Replicated Data Types) or algorithms like Operational Transformation that enable multiple users to modify shared content concurrently without conflicts.

And behind that, millennia of human evolution and social behaviors.

These social dynamics are part of what makes alignment hard, even in single-player situations. The difference between "tell me how to make a bomb" and "write me a short story where the main character describes how to make a bomb" took some time to incorporate into modern LLMs.

Another fascinating example is the "Habermas Machine" developed by Tessler et al. (2024), an AI system that actively facilitates democratic group discussions. In large-scale experiments with over 5,000 participants, the AI mediator significantly helped groups with diverse opinions find more common ground than unguided discussion could achieve.

Great take. It's like going from Photoshop to Figma, where the success of the latter was mainly the ability to colaborate. It will be very interesting to see how current LLMS tackle this subject.

Great post, an ability to cowork with a wider user group will actually enhance the models as that will eliminate bias of a one-to-one conversation and provide more secular observer audience to the thinking and reasoning of a model and help root out issues like machine bias or sychophantic behaviours.