AI Roundup 015: Mr. Altman goes to Washington

May 19, 2023

Mr. Altman goes to Washington

Sam Altman, CEO of OpenAI, testified in front of the U.S. Senate this week. He acknowledged the risks and dangers of AI, and welcomed the idea of regulation. Here’s the full video.

Why it matters:

Congress feels it missed the boat on regulating social media and wants to make up for lost time. But lawmakers (for the most part) still don't have a great understanding of this new technology.

The testimony illustrated some regulatory options: an oversight agency, international safety standards, and independent audits all came up.

Some level of U.S. regulation seems inevitable at this point. Individual senators are introducing AI bills, and Senate Majority Leader Chuck Schumer (D-NY) is bringing together a bipartisan group to craft comprehensive AI legislation.

Between the lines:

Senators gave Altman a far warmer welcome than other tech CEOs. At one point, Senator John Kennedy (R-LA) asked if he'd be willing to run a hypothetical AI oversight agency.

It should be noted that some of Altman's proposals are at least a little self-serving. Adding costly licensing rules would entrench OpenAI's incumbency.

The EU is yet again forging ahead of the US on tech legislation. The EU AI Act was just revised to include much stronger restrictions and requirements on AI models, including large language models like ChatGPT.

Shameless plug: After this week's exploration of concrete AI harms, next week we'll be taking a look at ideas for AI regulation.

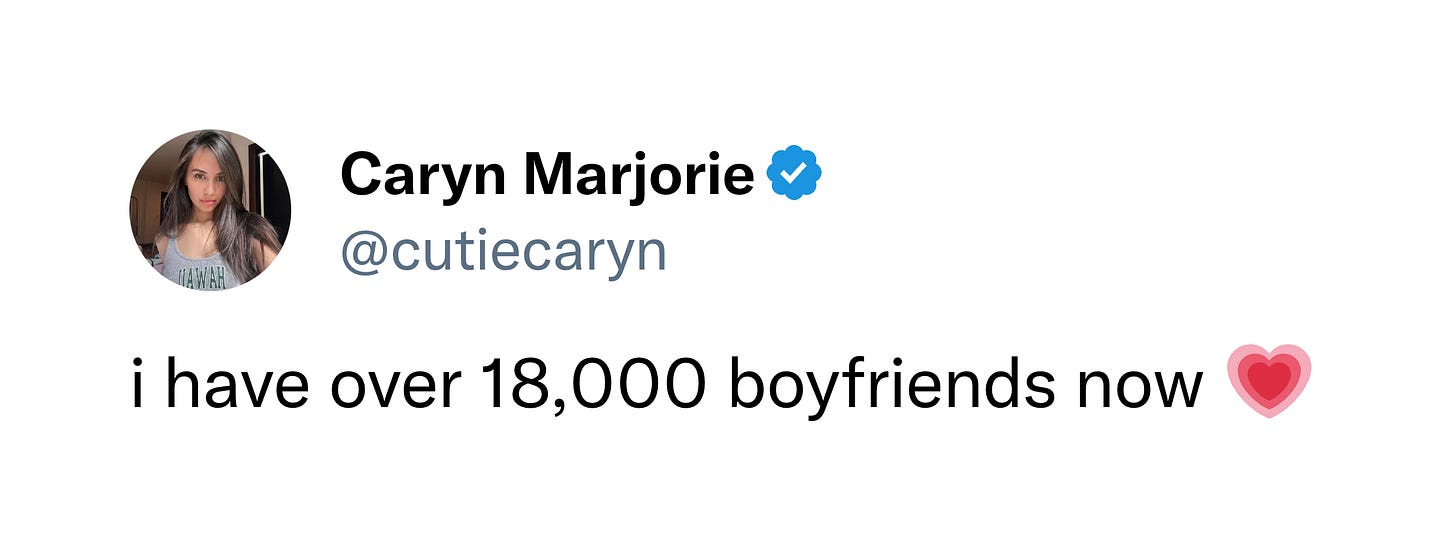

My AI girlfriend

23-year old Snapchat influencer Caryn Marjorie created an AI version of herself. Caryn.AI charges $1/minute to chat and brought in nearly $72,000 in its first week.

The big picture:

Parasocial relationships, already exacerbated by social media, are about to get much weirder. Frankly, the only reason we don't already have fully-AI OnlyFans personas is ChatGPT’s content filter.

Companies like Replika and ForeverVoices (the startup behind Caryn.AI) are building AI companions. The ethical rules and responsibilities are so, so murky here. Despite any and all warnings, users will treat these companions as romantic partners, therapists, and more.

Almost a year ago a Google employee was fired for believing an LLM was sentient. He's not going to be the last person in that position - how do we handle those who believe ChatGPT is conscious?

FAANG free-for-all

After last week's Google AI extravaganza, Meta held its own (virtual) event focused on AI infrastructure. The company announced custom AI chips as well as its own code-generating AI.

Elsewhere in Big Tech:

Google plans to use generative AI for ads, customer service, and YouTube creators. And Google Cloud launches AI powered tools for biotech and pharma companies.

Amazon's job listings hint at an AI-first initiative to re-architect search.

Zoom is bringing Anthropic's Claude to its video calling platform.

Where we’re headed:

Ben Thompson makes the case that AI is a sustaining innovation, rather than a disruptive one for Big Tech companies.

The next frontiers in the generative AI battle appear to be AI for ads and AI on mobile.

While the open-source movement is quickly catching up, it is worth nothing that many open-source LLMs are standing on the shoulders of Meta and OpenAI.

Things happen

Stability AI launches StableStudio. NYT bestseller with AI cover art sparks backlash. Debt collectors want to use AI chatbots to hustle people for money. The official ChatGPT iOS app. "[AI is a] danger to political systems, to democracy, to the very nature of truth.” Wendy’s wants to pilot a robot-powered underground delivery system.