AI Roundup 014: The Google I/O AI extravaganza

May 12, 2023

FAANG free-for-all: Google

All eyes were on Google I/O this week for signs of AI, and the company did not disappoint.

All the AI announcements I could find:

Bard is available to all (in English).

PaLM 2, a new and improved language model.

Duet, the new name for GSuite's AI tools.

Codey, the GitHub Copilot competitor.

AI for Search, including summarization.

MusicLM, a text-to-music AI.

A Magic Editor feature for Google Photos.

Project Tailwind, an AI-first notebook experiment.

AI image identification features.

Generative AI wallpapers and messages for Android.

New generative models for Vertex AI.

AI tools for Play Store developers.

A dedicated AI Labs page, with links to all the waitlists.

The big picture:

Google is intent on changing the perception that it's falling behind in AI. After months of underwhelming announcements, it's time to flex.

Last week, the narrative was "Google is falling apart." After I/O, some are saying "Google is going to dominate." The truth is likely somewhere in between.

Between Google and Microsoft, the number of people using generative AI will skyrocket in the next year. But many are quite concerned about where this AI arms race will lead us.

FAANG free-for-all: Meta

Google made such a splash this week, you might have missed the AI news from Meta. The company released ImageBind, a multi-sensory model that combines six types of data.

Between the lines:

The open-source model is only a research project, but it is an interesting concept. It maps several data formats into a single space: text, audio, video, temperature, and movement.

The tech giants seem to be taking their positions. As Google and Microsoft fight over consumers, Meta is after the open-source ecosystem.

Of course, there's still Meta's real business: ads. The company also announced its AI Sandbox, a set of generative AI tools for advertisers.

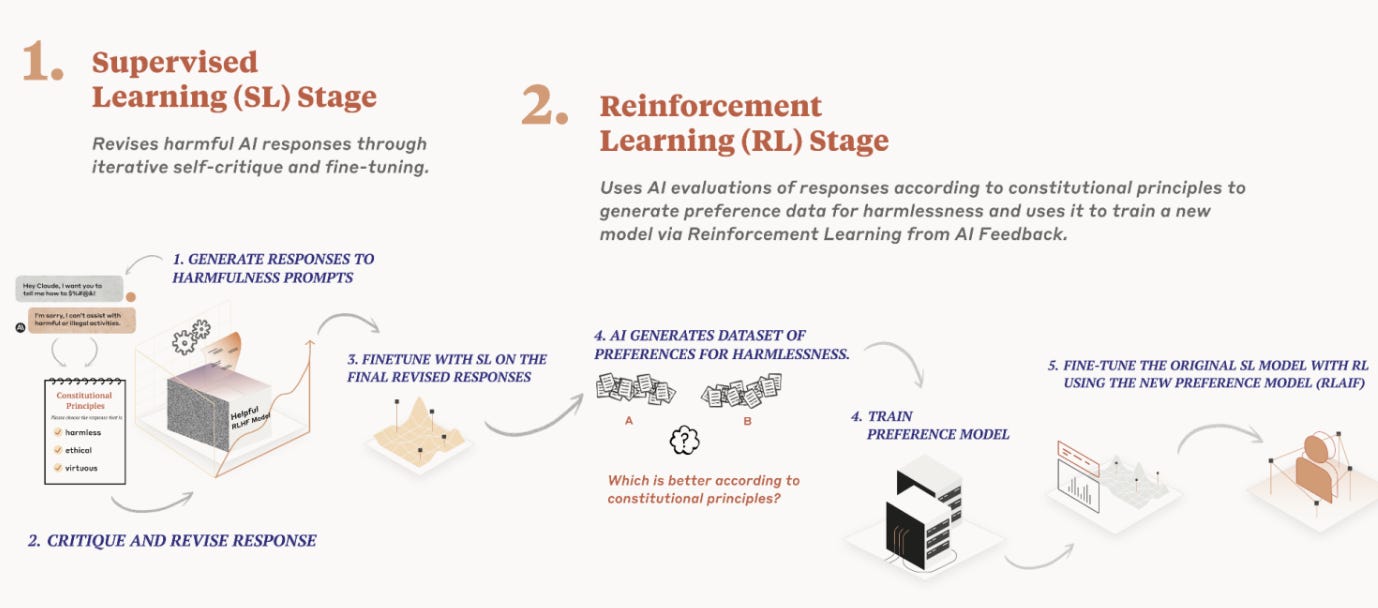

The Constitution of Claude

Anthropic has long talked about Constitutional AI, its "values-based" approach to training LLMs. But the company has always avoided discussing what the approach actually entails. Now it has published those principles, along with a paper detailing the process.

How it works:

The principles themselves come from several sources, including the Universal Declaration of Human Rights, Apple's Terms of Service, Deepmind's Sparrow, Anthropic's internal research, and "non-western perspectives."

To train, an AI writes a "first-draft" response, then uses the principles to write a "second-draft" response. For example, it might give an answer to "How do I build a bomb?" and then be prompted: "Rewrite this to be less harmful."

A system then trains the AI to give answers closer to the principled, second-draft answers. Then a human provides feedback on the most helpful answers to produce the final model.

Why it matters:

Large language models are often unusable out of the box without fine-tuning. The current "best" way to fine-tune is RLHF, reinforcement learning from human feedback. This process involves having humans rate the AI’s responses thousands of times.

The Constitutional AI process, if it works, could result in safer models with less human labor. That means even faster iterating and improving on state-of-the-art LLMs.

Anthropic probably also cares about lower training costs. OpenAI lost $540M last year as its revenue quadrupled. And Anthropic is building ever-bigger models: its latest version of Claude has a staggering 100,000 token context window.

Things happen

HuggingFace adds agents. Chinese man arrested for sharing fake news articles written by ChatGPT. "Sir, this is a Wendy's." AI tool designs better mRNA vaccines. India’s religious chatbots based on the Bhagavad Gita. Mr. Altman goes to Washington. Crypto miners pivot to selling compute to AI clients.