Why now?

What's behind our current AI boom?

Back in May, Sam Altman tweeted "summer is coming." It was seemingly 1) a Game of Thrones reference and 2) a nod to previous "AI winters," implying that we'd be doing the opposite of stagnating.

Since his tweet, we've had the following developments (among others):

The launch of Llama 2, Stable Diffusion XL, and many more foundation models.

Week after week of new AI products and announcements from Big Tech.

Billions in VC dollars poured into hundreds of AI companies.

It's been a monumental year for AI, which you're probably aware of if you're reading this. But a question has been bugging me for a while:

Why now?

Is this time different?

AI skeptics sometimes argue that we've had breakthroughs in machine learning before, but they're inevitably followed by one of those AI winters I mentioned. They might be right - I wasn't a contributing member of the workforce during the last big winter in the late 90s.

But I am old enough to remember when Google Translate outclassed all of the other translation services available at the time. Or when AlphaGo defeated Lee Sodol, one of the strongest players in the history of Go. Or when we used to bring up the Turing Test whenever a new chatbot launched... we don't really do that anymore.

I've been around for a few technology cycles, and this one feels different1. If you're paying attention, if you know where to look, the rate of improvement is astonishing. It feels like we're at an inflection point, like we're moving at a breakneck pace that doesn't seem to be slowing down all that much. But what makes this moment so special?

It's easy to point to ChatGPT, or the "Attention is all you need" paper, and say it's the root cause. But I'm not sure that's true.

Rather, I believe ChatGPT is the culmination of two different, multi-decade trends: data and hardware. As well as a key invention: Transformers.

Welcome to the internet

One reason that this is a multi-decade trend is because ever-growing datasets have led to wins in AI for some time now.

Let's go back to that Google Translate example. Previous translation algorithms were primarily rules-based - they tried to code all of the rules of a language explicitly. That meant defining words, and grammar, and orthography, and baking it all into a translation program.

The problem, of course, is that programs built this way are both very expensive and very brittle. New syntax or slang that you haven't accounted for will break things. You need to set up rules for every language you want to use (and in some cases, every pair of languages that you want to use).

Google Translate took a different approach: statistical machine translation. By using transcripts of the same text in both English and other languages, it built a model that analyzed the words that appeared near each other in different translations, and made a best guess based on all of the examples that it had learned from2.

What enabled this approach was the availability of United Nations and European Parliament transcripts: a digital Rosetta Stone, accessible by anyone with an internet connection. Putting something like this together would have been near impossible before the web. But we've found that just adding more training data has led to AI advances in many areas, like video games, self-driving cars, or cancer screening.

But transcriptions of UN meetings is a sliver of what the internet has created, in terms of content. You've got efforts like ImageNet, a database of over 14 million images hand-annotated for training purposes. Or Wikipedia, a collection of 6.7 million articles, images, and diagrams compiled and categorized in a structured system.

And then there's social media. Google once estimated that in the modern era, from the invention of the printing press in 1440 until the year 2010, there were roughly 130 million bound, published books. We now generate about the same amount of text on Facebook and Twitter every month. And that's not counting the quarter-million hours of video uploaded to YouTube every day, or TikTok, or Reddit, or Snapchat... you get the point.

The internet has allowed us to aggregate content and data at a size no human can fully comprehend. And we have been throwing it, in ever-growing chunks, at our machine learning models.

Of course, eventually, you reach diminishing returns with the volume of your training data. - size isn’t everything. Beyond a certain point, the quality of the data becomes more important than the quantity. But for much of the last two decades, quantity has had a quality all its own.

Long live Moore's Law

But as important as big data became, the role of the GPU was just as significant.

Moore's Law famously states that the number of transistors on a chip (that is, its computing power) will double every two years. And for most of the last six decades, that's held true.

In the last few years though, we've started to run into the limits of Moore's Law. Some will disagree with this, but it's rapidly becoming a problem of physics, not engineering. A single atom of silicon is 0.5 nanometers wide. The latest chip fabs market themselves as having "5 nanometer" and even "3 nanometer" capabilities3. I'm no Math PhD, but there's only so many times you can halve a 5nm transistor before you get to half a nanometer.

Luckily, we've found some creative ways to deal with these limits. The newest, beefiest CPUs are essentially "stacks" of chips - we ran out of real estate, so we started to build up. But we've also been gaining processing wins in the form of GPUs.

GPUs originally started as graphics processing units, designed to render arcade games in real-time. But as they evolved, they could handle more general-purpose, memory-intensive tasks. Rather than needing to do complex math and logic on a single chip, GPUs handled repetitive workloads at scale. And that aspect, scale, has made GPUs so invaluable.

While we have multi-threaded or multi-core CPUs, GPUs let us parallelize computational workloads in a way that we hadn't done before. Again, the earliest examples of this were graphics use cases - gaming and animation studios had "render farms," hundreds or thousands of GPUs that would generate scenes from Pixar movies and Blizzard games overnight.

But as deep learning has evolved, we've realized we can apply the same approach to training models. Just like with render farms, AI companies are building “compute clusters,” hundreds or thousands of GPUs that train large language models - often raising billions of dollars to do so.

Yet, as crucial as improvements to data and hardware have been, it took one more breakthrough to unlock their full potential: Transformers.

Robots in disguise

The Transformer architecture was first proposed by Google researchers in 2017, in the now landmark paper, “Attention is all you need.” At the time, the architecture represented a new, more efficient way to train language models.

Before Transformers, there were multiple approaches to training - RNNs, CNNs, and LSTMs, to name a few. However, each of these approaches ran into limitations.

The first was speed. These networks learned sequentially, making training runs slow. For the network to learn the relationship between words in a sentence, for example, it had to process each word at a time. Training on large datasets took ages, and it seemed like the smarter approach was to build a better algorithm rather than throw more data at the problem.

The second was memory. Previous architectures relied on "hidden states" to keep track of the relationships between inputs. But as the size of the inputs grew, so did the hidden states, often at an exponential rate. The approach quickly became unwieldy for learning sentences longer than a few dozen words - as a result, the AIs could generate grammatically-correct text that made no sense narratively.

But with the Transformer, we solved both of those problems. Without going into the technical details, Transformers (as well as Attention, an earlier research breakthrough) allowed us to learn relationships between words (or images, or audio, or data) simultaneously, rather than sequentially.

It meant that training speeds and memory limitations were no longer the main bottleneck4. Transformers meant that we were able to fully leverage the vastness of the internet, and the advancements in distributed computing, for the first time. All three were necessary to get to this moment: the content and GPUs didn't matter without the Transformer, and the Transformer didn't matter without the content and GPUs.

To be fair, it took a few years for the ramifications of this to play out. BERT, the first language model using transformers, was released in 2018, the year after. As was GPT-1 (Generative Pre-trained Transformer), a research model from a small company named OpenAI.

But the architecture set the stage for the explosion of AI advancements that we're seeing today.

Everything, everywhere, all at once

Ultimately, the legacy of the Transformer is something that its inventors likely didn't appreciate at the time. They were primarily concerned with machine translation, and cared mostly about benchmarks against other research models.

But as it turns out, efficiently learning the relationships between pieces of data is useful for many, many domains beyond translation. Chatbots, which dominate the current moment, weren't on their radar. Nor was generating images, audio, video, or whatever else we're seeing in generative AI. Transformers turned out to be so good, and so generalizable, that dozens of use cases are being rebuilt around them.

When I was at college, I remember each area of machine learning research having different approaches. Natural language processing, image recognition, and text-to-speech all had disparate architectures and algorithms. But with so many sub-disciplines of ML now reoriented around Transformers, we're creating a positive feedback loop of innovation. New ideas in image generation can lead to breakthroughs in LLMs (large language models), and vice versa.

In my mind, we're seeing (what feels like) landmark results in multiple areas every other week because they're both riding, and contributing to, the same wave: the momentum of the Transformer architecture.

It helps, of course, that there's so much commercial interest in AI right now5. For better or worse, startups and Big Tech companies are incentivized to move far faster than academics, which adds to the breakneck pace of product launches. It's one thing when a major new paper gets published - it's another when you're seeing AI improve, in near real-time, as you use it.

But it's still unclear who will win - besides Nvidia - because it's still unclear how much further AI has to go.

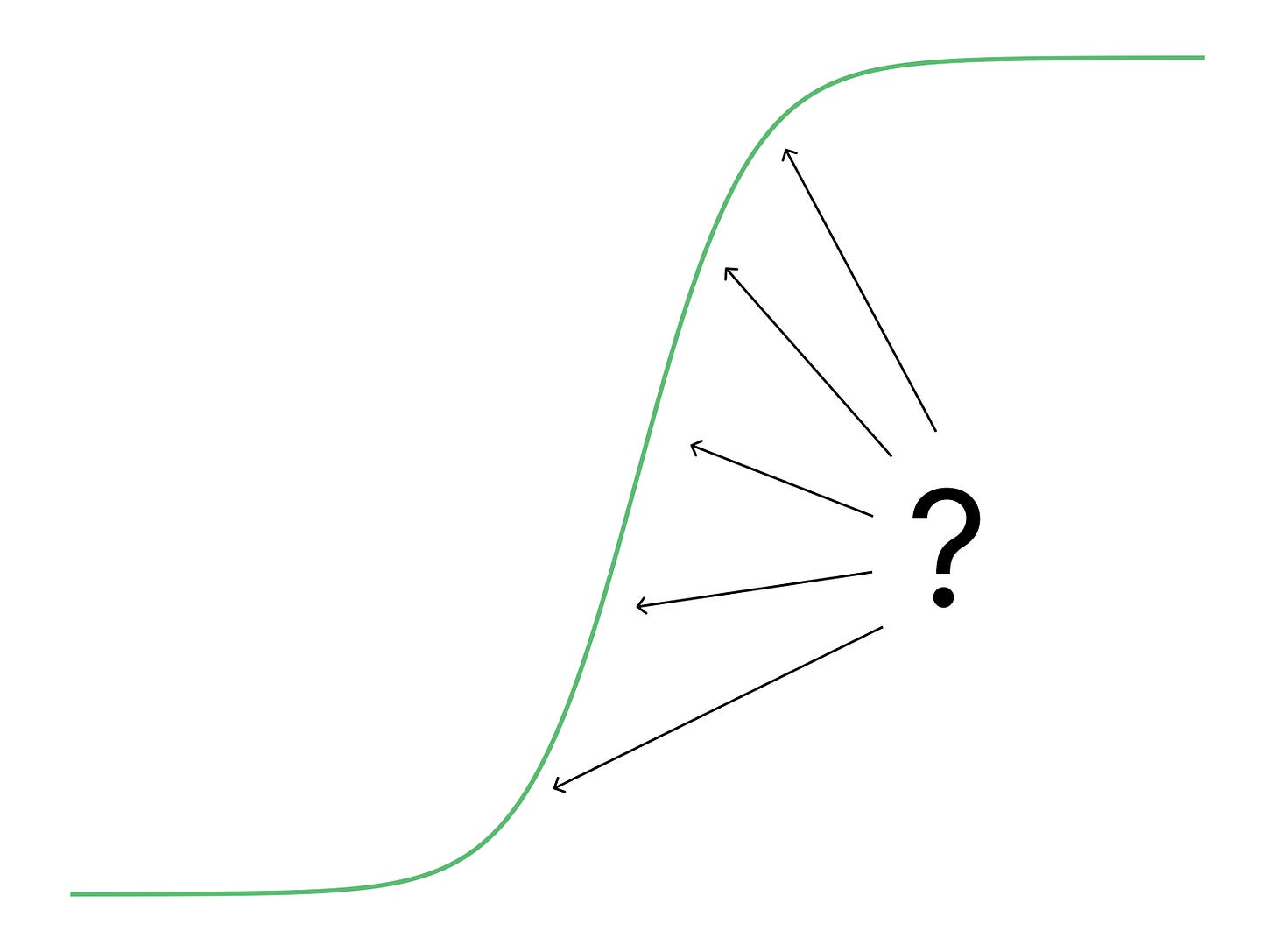

Riding the sigmoid

The adoption of every new technology, from landlines to laptops, takes the shape of an S-curve, or sigmoid. It starts off slow, while the tech is in its infancy. Then, it begins to grow exponentially as mass adoption occurs. And finally, inevitably, it plateaus as the technology reaches maturity.

With the AI of today (ChatGPT and friends), we don't know where we are on the S-curve. In the coming years, we're collectively going to find out. And I think it depends on the answers to a few questions:

What comes after Transformers? The architecture has gotten us this far, but we're already discovering its limitations. At a certain level of complexity, the computing requirements become enormous, requiring tens (if not hundreds) of millions of dollars to train models. And even the best models can't "remember" more than tens of thousands of words of conversation - for now. Researchers are looking for new breakthroughs to make LLMs more efficient and to solve other core problems like hallucinations and context windows.

Where are the limits of our data? Internet data, despite its vastness, is quite messy. While we can certainly add more of it, there are often diminishing returns on just the size of a dataset - eventually, quality matters much more. The best models will likely have curated (and expensive) datasets. Or, we might turn to synthetic data - use AI models to generate training data for other AI models. Many are experimenting with this idea, though the jury's still out on its effectiveness.

How much compute should we plan for? Nvidia is absolutely raking it in at the moment - their GPUs are an incredibly hot commodity, and they literally cannot seem to make enough of them. It will take time, but I'm confident significantly more computing power will be available in the coming years - but how much, exactly, is a big question. The difference between 100x more GPU capacity for AI models, vs 10000x more capacity, can lead to quite different futures.

What wins exist beyond training? Training ever-larger models, even if they become cheaper, won't necessarily make them better from a usability standpoint. One of ChatGPT's breakthroughs was RLHF (reinforcement learning from human feedback), which allowed it to follow instructions and avoid harmful responses. And RLHF, while an important discovery, didn't have to do with the core model's training data or hardware - it was layered on top. In our quest for AGI, we might find the next set of breakthroughs lies somewhere outside of our training parameters.

What now?

I genuinely don't know where we are on the S-curve. We might be near the top, as we find out that GPT-4 is close to the best we can do for a while, with some incremental improvements. To be clear, that would still be a huge deal - even if we stopped all new AI progress today, it would take us years to fully exploit and integrate the capabilities we currently have.

Of course, we might also be near the bottom. We might just have barely reached the first part of that big vertical line, and we're all in for a wild few years. The AI true believers certainly think so - to them, AGI is less than a decade away, so why bother working on anything else?

I'm not sure anyone truly knows where we’re going to end up, regardless of how confident they are. I do know that we're in the middle of a new technology wave. And whether it turns out to be a tidal wave or a tsunami, the important thing, to me, is to keep my head above water, and not get pulled under by the deluge of new AI developments. Just keep swimming.

It is, of course, overhyped in this moment, and will most likely go through some eventual disillusionment. Not all AI press releases are created equal.

One limitation was that it used English as a middle-man for almost all the translations: going from Spanish to Korean would mean translating into English first, meaning a loss of accuracy for certain words (like "you") that have multiple representations in other languages.

Though, like "5G," the exact meaning is becoming fuzzy and is slowly devolving into a marketing term.

At least, that's what it meant at the time. We've since built such gargantuan networks that these bottlenecks are coming back into play.

ChatGPT deserves a lot of the credit for that - it sparked the current hype-wave as people discovered how far the underlying tech had come.

Another trend I believe was a key contributing factor is big tech's adoption and widespread distribution of LLMs. Think about a scenario where OpenAI doesn't launch ChatGPT and Microsoft keeps their Bing chatbot under wraps. In that setting, many developers might feel they have to fix the "hallucination" issue before releasing anything.

Sure, Microsoft, OpenAI, and later Google faced criticism for users' early encounters with LLMs going off-track [0], but thanks to that, and only a year after ChatGPT's launch, even the average user is aware that hallucinations can occur in AI responses. This widespread understanding helps LLM builders and incumbents deploy LLM-powered apps faster and with less scrutiny.

[0] https://fortune.com/2023/02/21/bing-microsoft-sydney-chatgpt-openai-controversy-toxic-a-i-risk + https://www.npr.org/2023/02/09/1155650909/google-chatbot--error-bard-shares

Thank you for this summary. For me, the crucial part is, "But as it turns out, efficiently learning the relationships between pieces of data is useful for many, many domains beyond translation." As the dust settles, for me, it becomes increasingly evident that the quality of data and the ability to accurately establish these relationships are paramount for the next phase.

In addition to the importance of data quality, it is worth delving into the significance of semantic mapping in this context. Semantic mapping plays a pivotal role in enabling AI systems to not only understand data relationships but also to derive meaningful insights and context from diverse datasets.