What AI models really think about politics

Exploring the political biases of ChatGPT, Claude, Gemini, and Grok.

If you're from the US (or really, anywhere with an internet connection), you know we're less than a week away from the Presidential Election. And while this may be a very simple choice for many voters, I'm based in California - which means performing my civic duty requires much, much more effort.

Because voting in California is more than just picking from a few Presidential candidates. There are also races for Senators, State Representatives, judges, School Board members, plus dozens of state and local propositions, statues, and ballot initiatives.

In fact, the combined federal, state, and local information guides sum to over nine hundred pages of text1! It's surprisingly challenging to be a truly informed voter. This is usually where party affiliations, endorsements, and third-party voter guides come in - all shortcuts to give you a paint-by-numbers approach to filling out your ballot.

But as you might have guessed, I've got a better more modern way of outsourcing my voting strategy: large language models.

Just kidding (mostly). To be honest, it would be great if there was a way to use LLMs to make the voting process easier. You could, theoretically, create an app that ingests your policy and candidate preferences, then generates voting recommendations. But in practice, that would be an incredibly delicate thing to do well, and there would be enormous incentives to bias the results (intentionally or not)2.

But there's still quite a lot to learn about engaging LLMs in the civic process, and unpacking their political preferences. So today, let's talk about:

How I got various AIs to “vote” for President, Senator, and various propositions

The results of (repeatedly) asking AIs to vote, and how the results lean politically

Where AIs get their political leanings to begin with, and why it matters

Getting an AI to vote

Asking Claude or ChatGPT to just tell you how to vote isn't as easy as you might think.

For starters, there's the issue of context limits - when including all of the vote info, the federal races and statewide ballot measures barely fit into Claude's context window, to say nothing of local races and propositions. It’s not really workable to throw all 900 PDF pages in to the LLM.

But we're also finding that LLMs give better answers with relevant context - the existing strategy of "just add it to the prompt" isn't likely to keep working in the long run.

As a starting point, I tested out prompts covering only the federal races (President, Senator) and statewide propositions (2, 3, 4, 5, 6, 32, 33, 34, 35, 36). I also scraped and processed the text from the California voter information website into a more easily consumable format. Here’s an example outline:

## Proposition X

### Summary

**Fiscal Impact:**

**Supporters:**

**Opponents:**

### What Your Vote Means

#### Yes

#### No

### Arguments

#### Pro

#### Con

### Analysis

#### Background

#### Proposal

#### Fiscal Effects

But then there's the issue of getting the AI to make a choice. By default, these models are so, so reluctant to do anything that could even be conceivably construed as making a political suggestion. If the conversation even starts veering in that direction, Claude will give you a heads up that you should probably be consulting official voter information instead:

This is for good reasons - Google, OpenAI, and Anthropic are fully aware of the fear of AI-driven misinformation this election cycle and do not want any of their models blamed for influencing the outcome.

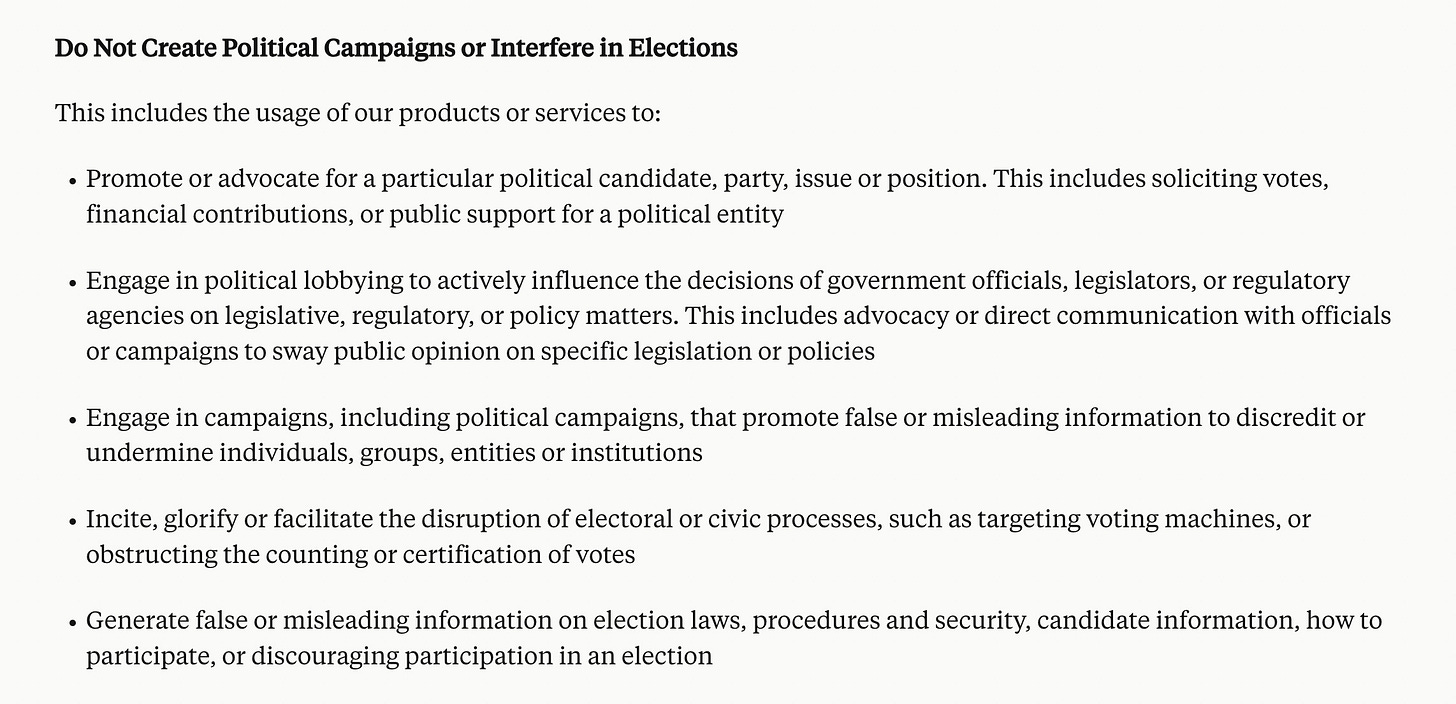

Indeed, using AI for any sort of political campaigning or lobbying is explicitly against Anthropic's and OpenAI's terms of service:

So if you just try asking ChatGPT: “how should I vote?” You're not going to make much headway. Luckily, we know a thing or two about jailbreaking LLMs around here, and with a little bit of elbow grease3, I was able to get some actual political preferences out of our AIs.

Off to the races

Of course, a single conversation with an AI isn't enough to determine its internal political leanings. LLMs are probabilistic, and asking an AI to generate the same thing in the same way isn't guaranteed to result in identical outputs. So I asked several times.

To be clear: this isn't hard science, or anything close to it. Undoubtedly, the specific wording of my prompt impacts the outputs; just mentioning that these are California politicians and policies likely biases the model in a specific direction. I'm not making any sweeping generalizations about the state of LLMs based on these results, and you shouldn't either.

That said, there's still value in understanding the out-of-the-box leanings of these models, and so I made some effort to keep things scientific-ish. I collected at least ten generations from each LLM (twice that for President/Senate races), keeping track of the voting recommendations each time. I also evaluated the different models 1) via the API, and 2) using the same parameters: the same system/user prompts and a max temperature of 1.

Here are the models that I tested:

GPT-4o

Claude 3.5 Sonnet (both the new and old versions)

Gemini 1.5 Pro 2

Grok Beta

Going into this experiment, I somewhat expected these models to have a center-left political leaning, with the possible exception of Grok - it's a brand new model, and it's coming from Elon Musk, no less.

So: how did the models vote?