The Field Guide to AI Slop

And what it's doing to human writing.

For at least two years now, I’ve been saying how early we are when it comes to all of - gestures broadly - this. And to a large extent, I think that’s still true. We’re still figuring out how to build AI-native products, and while perhaps a majority of people have heard of ChatGPT, it’s unclear how many would consider themselves AI power users.

However, there are occasional signs that the wider world is beginning to change. Perhaps the biggest indicator is how often I run into content that sets off my “written by AI” alarm bells.

In some places, it’s always been obvious: social media comments that have perfect spelling and grammar but zero connection to the original post. Blog posts with a clickbait headline and a meandering, useless body. You know the type.

What’s a little concerning, though, is how much it’s starting to pop up everywhere else. Long-form content that is plausible to read, but still has some je ne sais quoi that comes off as slop. Direct messages from coworkers that are a little bit too verbose, sent a little bit too quickly after you messaged them1.

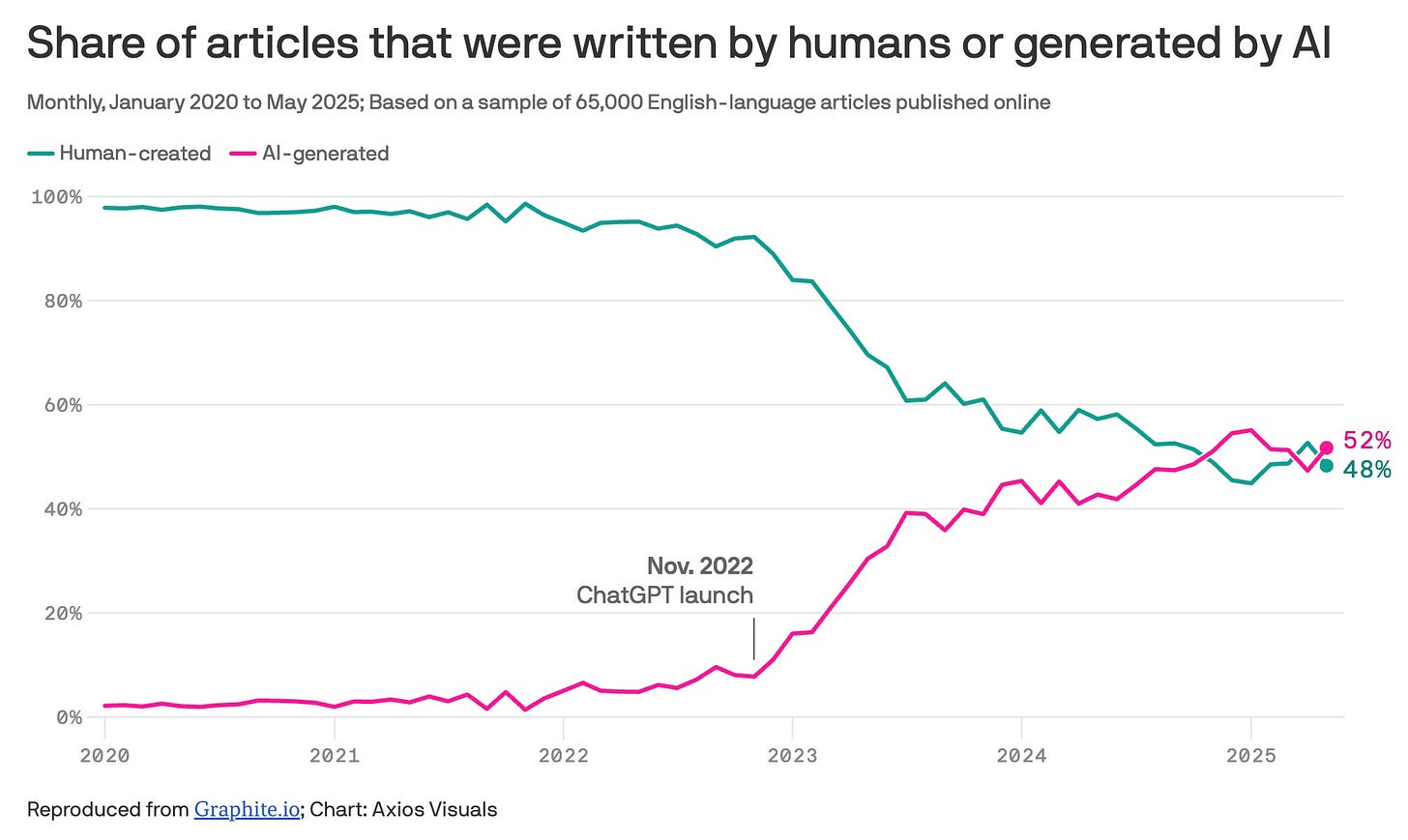

And my fears don’t appear to be completely unfounded. One report from SEO firm Graphite suggests that as of last November, more articles were being written by AI than humans (though new data suggests that the rate is now close to even). Meanwhile, an ongoing study from Originality rates around 17% of the top 20 Google results are AI-generated. And data from both Slack and AP-NORC indicate that at least 40% of workers (if not more) are using AI regularly, primarily for searching, ideating, and writing emails.

Take the following excerpt. At first glance, it’s an innocuous, if superficial, social-media-esque post about learning to play the ukulele:

How to learn the ukulele (and maybe anything else too)

A few months ago, I bought a ukulele on a whim. No teacher, no plan—just a vague sense that it would be fun to strum something other than a keyboard.

Here’s what worked:

🎵 Start with songs, not scales. Learn one easy song (I started with Riptide). The quick feedback loop keeps you hooked.

🎵 Practice for 5 minutes × day. Consistency beats intensity. It’s shocking how fast “barely coherent sounds” turn into music.

🎵 Record yourself early. The first clips are painful—but you’ll actually see progress.

🎵 Steal techniques. YouTube is full of great teachers. Copy their rhythm, posture, and transitions shamelessly.It’s funny—learning the ukulele reminded me how progress feels when you’re a beginner again: awkward, incremental, but unmistakably alive. Ultimately, it’s not just about the music—it’s about the joy of creativity.

Whatever your “ukulele” is—start strumming.

If you’ve used Claude and ChatGPT extensively, you’ll probably clock this as AI-generated immediately. But what exactly gives the game away?

The Red Herrings

Before we discuss the reliable tells, let’s address a couple of red herrings. There are some signs that people commonly cite as evidence of AI writing, but I don’t think any of them should be considered conclusive. Let’s call them “yellow flags” - worth noticing, but not damning on their own.

Academic vocabulary. Yes, AI uses a more sophisticated vocabulary than the average person - words like “delve,” “unpack,” “ascertain,” and “multifaceted.” But arguably, so do most professionals! If someone writes, “We need to ascertain the root cause,” in a work email, that doesn’t mean ChatGPT wrote it. It might just mean they work in consulting.

Typos (or lack thereof). With tools like Grammarly becoming more prevalent, especially for work-oriented content, a lack of typos may mean the text went through spell check, not that it was entirely generated by AI. Perfect grammar is no longer the smoking gun it once was.

Contractions (or lack thereof). Similarly, writing that avoids contractions - “it is” instead of “it’s,” “do not” instead of “don’t.”- might just be the result of superficial editing, or sometimes even an artifact of learning English as a second language.

Stylistic Tics

Em Dashes (—)

One of the first telltale signs of AI writing was em dashes. Not to be confused with en dashes (–) or hyphens (-), the em dash is that long punctuation mark that sets off clauses dramatically.

This is perhaps the most belabored point about AI-generated text2. But it’s not too hard to see why - em dashes are something very few humans use in day-to-day writing, unless you’re a professional columnist.

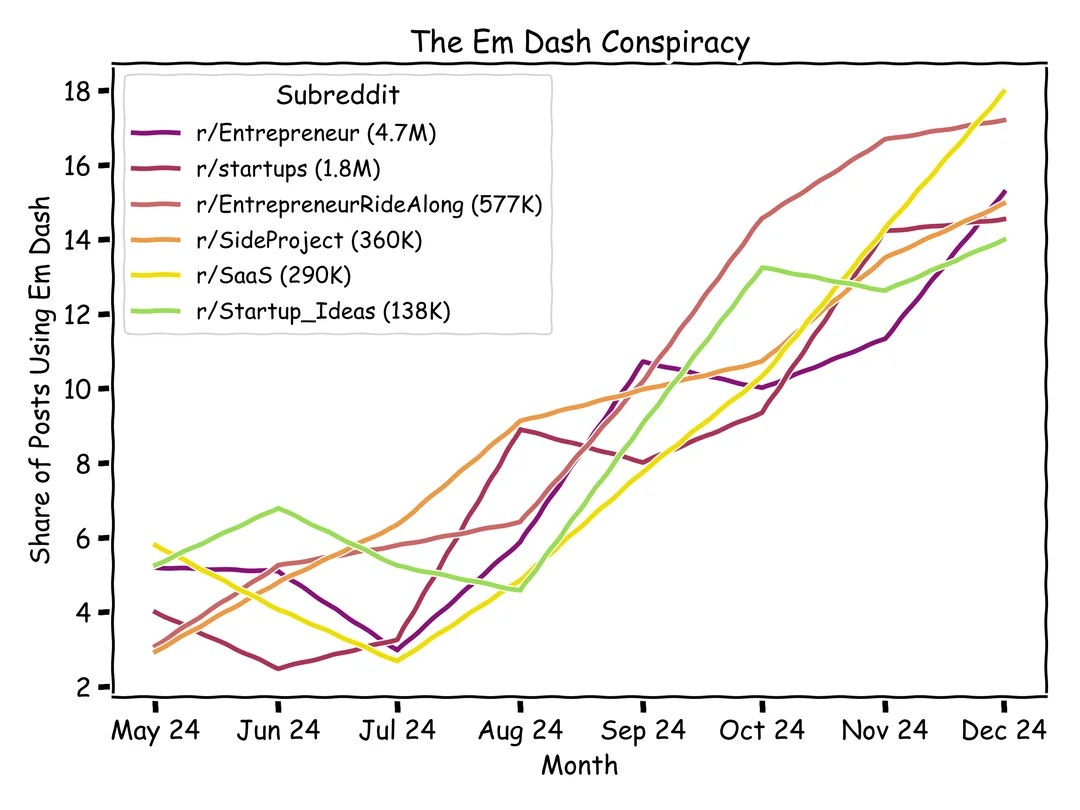

In fact, one Reddit user published what he called “The Em Dash Conspiracy”: real data on how frequently Reddit comments use em dashes (and perhaps, by proxy, AI). Using Reddit’s API, he downloaded the top 1000 posts from the past year for several technology and startup subreddits and tracked what percentage of them contained em dashes. The chart is striking:

While correlation does not equal causation, it’s hard to find an alternative explanation as to why usage of a single character would triple over a year.

Parallelism (It’s Not X, It’s Y)

I saw this one pointed out a few months ago, and I truly can’t unsee it. There are so, so many examples of this in my social feeds now.

Parallelism itself isn’t the issue - it’s a perfectly legitimate rhetorical device. But AI uses it constantly, reflexively, in situations where it adds nothing. It’s part of a grab bag of rhetorical cliches that make writing sound profound without actually saying anything:

Snappy triads: Giving three examples to drive a point home, or otherwise creating three-beat cadences. “Fast, efficient, and reliable.” “Think bigger. Act bolder. Move faster.”

Unearned profundity: Serious, narrative-shifting transitions that come out of nowhere. “Something shifted.” “Everything changed.” “But here’s the thing.”

Mid-sentence questions: “But now? You won’t be able to unsee this one.” “The solution? It’s simpler than you think.”

Vapid openers and transitions3: “As technology continues to evolve,” “In today’s fast-paced world,” “At the end of the day.”

Any single instance is forgivable, especially in the right context. But used repeatedly, they make me question the authenticity of the writing.

Random Formatting

If you’ve ever read a paragraph and noticed that random words or sentences are bolded without it being obvious why, it might have been AI-generated. The bolding doesn’t necessarily emphasize key points - it’s just sort of there.

An even more obvious example is when the author uses Unicode characters to format text as 𝗯𝗼𝗹𝗱 or 𝘪𝘵𝘢𝘭𝘪𝘤. Sure, in some corners of the internet (especially in non-English-speaking corners) it’s a thing to use Unicode to add some flair to your text. But most authors (even professional ones) aren’t going out of their way to grab Unicode arrows (→) or multiplication signs (×) to add to their text. This feels like almost exclusively an AI thing.

Structural Patterns

✅ Lists (And Emojis)

ChatGPT loves making lists. It’s something about its RLHF training, I think - people are more inclined to be impressed by answers that contain bullet points and will rate those responses higher. Alongside em dashes, this is a pretty quick visual indicator: if I’m looking at written text and see tons of bullet points, I’m immediately skeptical.

But what’s jumped out at me more than just the bullet points (something even I do frequently when drafting) is the use of emojis in professional contexts. No sane person I know is regularly formatting their work emails with a list of emoji-led bullet points:

✅ Complete quarterly report

📊 Analyze market trends

💡 Generate innovative solutions

I’m not sure why this happens, but I’ve noticed that GPT-4o does this more than its predecessors (and anecdotally, more than GPT-5). It’s rare for me to see Claude do it, though that may be biased by my system prompts.

Monotony

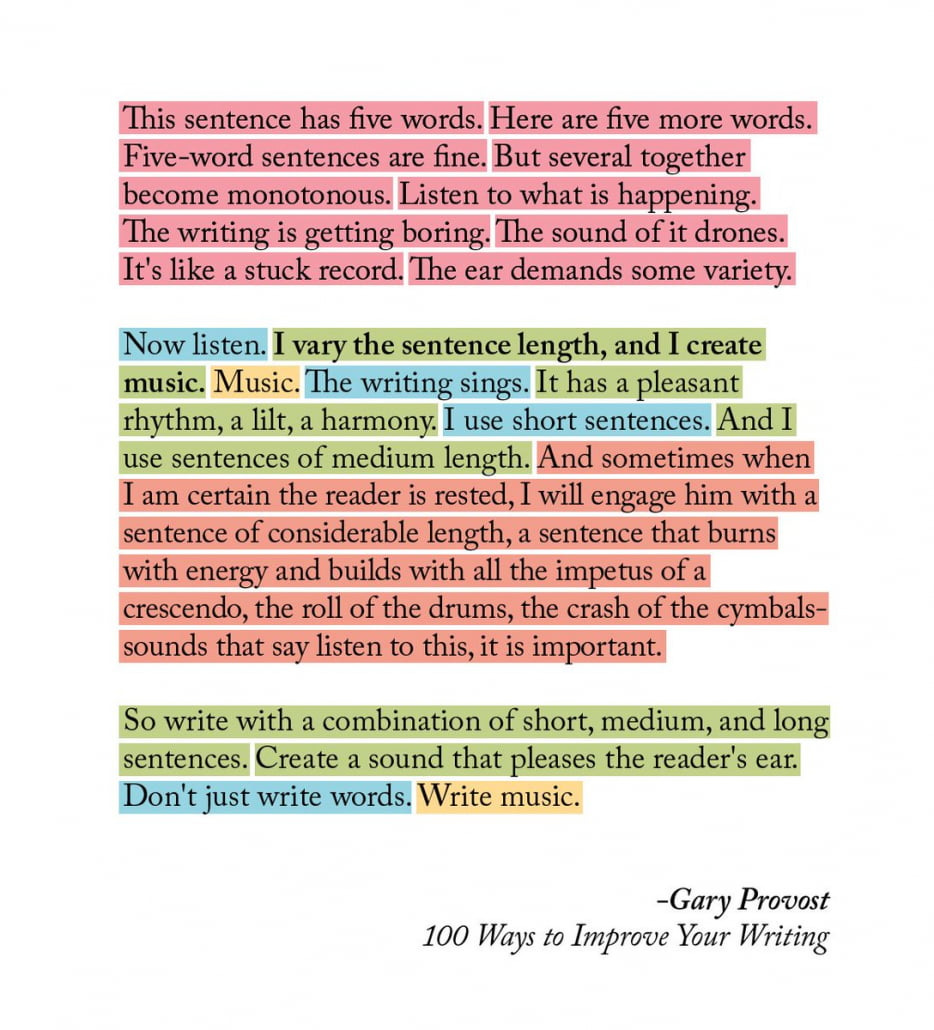

This one is more subjective, but AI-generated content often has a repetitive structure. Sentences are roughly the same length. Paragraphs follow the same rhythm. The cadence never varies.

It stands in contrast to one of the great quotes about writing, from Gary Provost:

Creative writing varies not only sentence structure, but also tense and point of view. It’s uncommon to see LLMs switch from second or third person to first, and vice versa. They tend to pick a lane and stay in it with unnatural consistency.

Uncanny Content

Awkward Analogies

Nothing drives your rhetorical point home like a good metaphor. The problem is, a lot of AI-generated metaphors feel like they’re in the right ballpark, but the author clearly didn’t think them through.

Here are some metaphors ChatGPT generated to go along with the ukulele post:

Learning the ukulele is like teaching your fingers to dance again after years of sitting still.

Every chord is a puzzle piece that finally clicks into a song.

Your first strums sound like a toddler learning to talk—nonsense syllables slowly forming words.

Learning an instrument is a mirror for learning itself: messy, slow, and quietly addictive.

Human metaphors tend to be either highly specific (drawing from personal experience) or culturally resonant (drawing from shared references). AI metaphors are... plausible. Generic. They gesture toward meaning without quite achieving it.

Filler

Maybe the biggest red flag, and the hardest to objectively measure, is just a feeling of: “What did I just read?”

I’ll find myself halfway through an article or very long comment and realize I have no clue what the author is actually saying. The words individually make sense, but there isn’t a coherent throughline to what’s being communicated.

Alternatively, I’ll realize that a paragraph has said in four sentences something that could easily have been said in one. The other three sentences added zero to the piece - if anything, they distracted from the point the author was trying to make.

It is, perhaps, in these moments that I empathize most with AI critics who claim it’s nothing more than a “stochastic parrot“ - a prediction engine that generates plausible tokens without understanding or caring whether those tokens convey meaning.

And while a human author may have good grammar and terrible communication, I find it hard to believe that someone would take the effort to write in a way that looks and sounds correct until it’s put under scrutiny. That specific combination - surface polish with nothing underneath - is AI’s signature.

Pattern Matching

These patterns work. I’ve used them to spot (what I’m pretty sure was) AI slop countless times, and I’m betting you’ll start noticing them as well.

But here’s the problem: human writers (even good ones!) use these patterns too.

Long-form articles, particularly those published at prominent outlets (e.g., The New York Times, The Atlantic, Wired), were part of ChatGPT’s training data. It literally learned to write from that style, among others. The em dashes, the parallelism, even some of the rhetorical flourishes - these aren’t inherently bad.

The main difference is that a good writer uses these devices sparingly, and with intention. They deploy an em dash when they need that specific dramatic pause. AI litters them throughout its writing indiscriminately.

But if you’re skimming quickly, or if you’re not primed to look for AI tells, it’s getting harder to tell the difference.

Because, to be clear: so-called “AI writing detectors” don’t work. They produce false positives at alarming rates, flagging human-written content as AI-generated based on crude heuristics. Students have had their original work flagged, and writers have been accused of using AI when they didn’t. The tools are useless, and they create more problems than they solve.

And as AI gets better - as more advanced versions of ChatGPT, Claude and Gemini arrive - these tells will become less reliable4. Even the act of me documenting these tells will get vacuumed up into a future LLM to help it reason through generating output that’s less immediately recognizable as synthetic.

As a long-form writer, I’ll say this kind of sucks. I’m increasingly self-conscious about my own writing, worried that someone will call out a sentence as AI-generated because it uses a clunky metaphor or a writing cliche. It will likely not come as a shock to hear that I use AI tools to help me write, not to create whole articles from scratch, but to ideate, restructure, draft, and edit my prose. Depending on the post, AI falls somewhere between a developmental editor and a proofreader - but I’m still concerned that it’s leaving its stylistic fingerprints, nonetheless.

AI isn’t just flooding the internet with slop - it’s creating a crisis of authenticity among humans5.

Articulate Architecture

There’s an old Winston Churchill quote about architecture: “We shape our buildings; thereafter they shape us.” He used it in a debate about whether to rebuild the British House of Commons in a different shape, and it was meant to highlight how our built environments influence our behavior and psychology.

I think something similar is happening with our AIs. We built language models by training them on human writing. They learned our patterns, our rhythms, our rhetorical devices. And now, in trying to distinguish ourselves from them, we’re changing how we write. We’re learning to avoid our own patterns because the machines have made them suspect.

We’re in a strange feedback loop where AI learns from us, we adjust to distinguish ourselves from AI, and AI learns from those adjustments. Each iteration narrows the space of “authentic human writing” a little more.

For what it’s worth, my own defense against AI slop isn’t to worry too much about style and structure. It’s to cultivate specificity: to write things rooted in particular knowledge and tangible experience. To develop a voice and a point of view, and stay as true to them as I can. These are things that AI still struggles to replicate convincingly.

The alternative, I suppose, would be to just accept that this is the new landscape. That writing, like every other activity before it, fundamentally changed the moment we invented machines that could approximate it at scale, and is now destined to be the sole purview of esteemed artisans and their affluent patrons.

But for now, this field guide will have to do. Use it carefully. And remember: just because something has all the tells doesn’t mean a human didn’t write it. We contain multitudes.

Don’t get me started on the concept of “work slop.”

So much so that I wouldn’t be shocked if future GPTs are trained to intentionally avoid using em dashes.

I absolutely do this, and I want you to know it’s not because it came from AI. It’s because I’m a bad writer.

That doesn’t even take prompting into account. Five minutes of thoughtful prompting can bypass any third-party AI content detector.

See what I did there?

"But here’s the thing." - I feel attacked!

Jokes aside, I relate to a lot of what you said. Even before I read your closing paragraphs, I wanted to say that I've started avoiding certain phrasing specifically because it's a known telltale sign of AI.

I always used em dashes quite liberally before the AI era, but now I'll often separate clauses with commas even where an em dash would be more impactful, just because I don't want it to appear like I'm using AI. The same goes for narrative devices like lists of three, etc.

But I still enjoy bullet points too much to stop. AI will never take those away from me! Or will it?

The tell for me was in the first second sentence - most humans know you can't strum a keyboard