Review: The best AI-generated voices

It's now alarmingly easy to create human-sounding audio.

Have you been curious about AI-generated voices? Not sure where to start or which product to use?

I researched dozens of AI voice-generation tools and got hands-on with ten of them. Keep reading for a look at the top pick(s), how AI voice generation works, use cases and abuse cases, and a breakdown of the testing criteria and competition.

Note: This review focuses on the most human-sounding voices. While there are plenty of other features to consider, like the diversity of languages, voice cloning, and project management, those were not the most important considerations.

To test each voice generation platform, I used an except from a recent post.

Overall best: Replica Studios

Replica Studios have the best AI voices. They had the best out-of-the-box voices, in a wide variety of accents, that sounded very realistic. Their targeted use case is game developers and filmmakers who want to save time recording character dialogue. My one critique might be that the voices sound very theatrical and dramatic, which may not be what you’re looking for.

Their secret sauce is professional voice actors that are hired to record audio samples. This gives their voices a richness and depth that's very difficult to match. Exploring their library is a fun activity in and of itself, and they have a wide range of accents available. However, they don't currently have non-English voices.

They also have a straightforward project management UI, with scenes and lines. Each line is assigned to a voice, and each voice has styles, which are somewhat related to emotions. Think "light-hearted" vs. "serious" or "happy" vs. "angry." Each voice has its own set of styles, and you can create your own "blend" of styles from the existing options.

You can also do more advanced editing to tweak the lines at a very granular level. It's possible to add silences or change the voice volume, speed, and pitch. However, I didn't see phoneme-level editing, a feature present in other tools, which let you tweak specific syllables.

Their free trial offers 5 minutes of audio generation and then costs $39 for every 4 hours of audio.

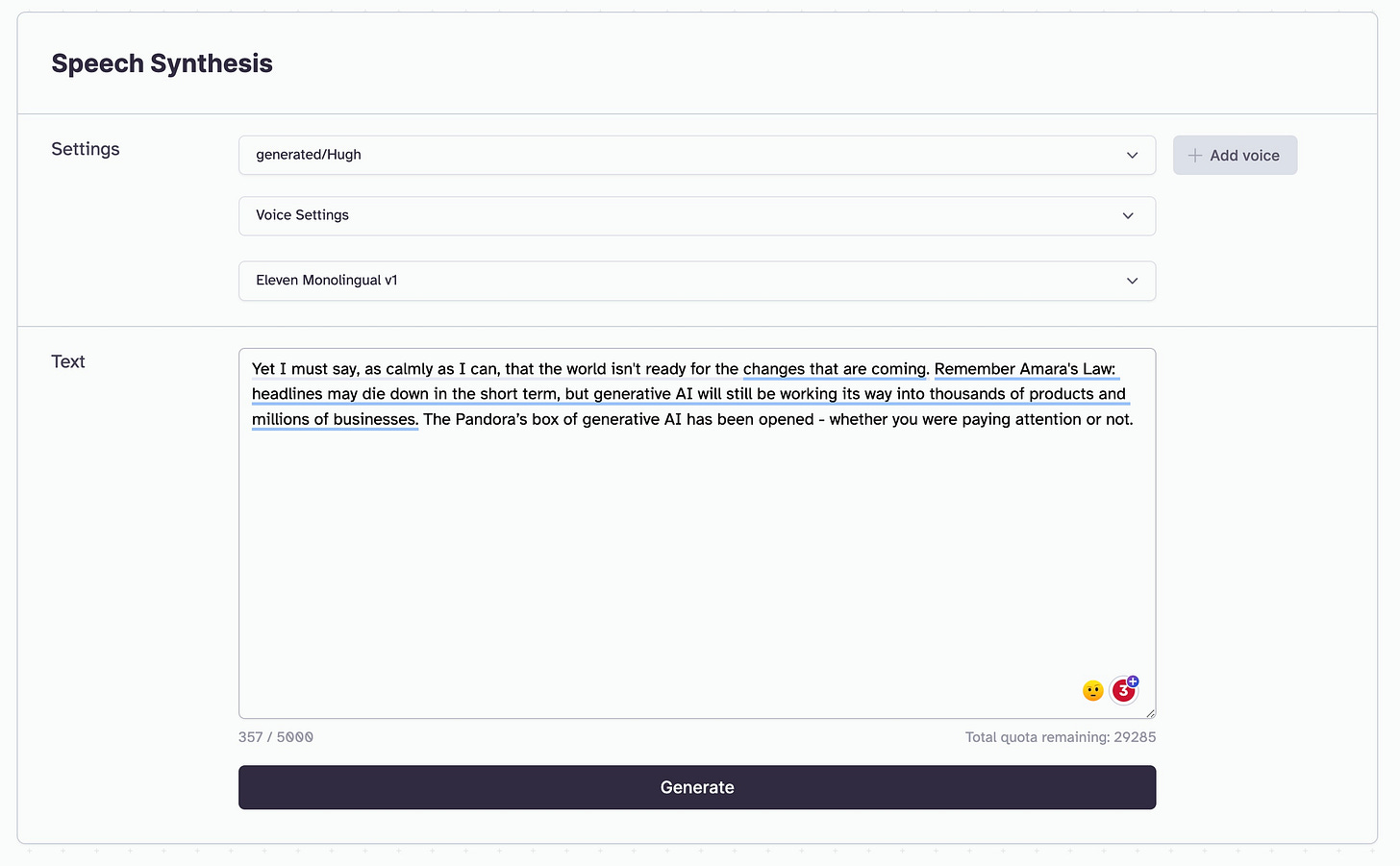

Runner-up: ElevenLabs

The runner-up pick is ElevenLabs. Their default voices were quite good, but there were only a few compared to the options from Replica Studios. Their VoiceLab features allow you to generate near-endless voices for a given gender, age range, and accent.

With some trial and error, these voices were arguably more realistic than the voices from Replica, but generating them isn’t a guaranteed success. Plus, ElevenLabs is missing a lot of features that are becoming table stakes, like clip editing, project management, and emotion fine-tuning. Taken together, these drawbacks kept it from being the top pick.

But given the main factor was the quality of the voices, ElevenLabs is a strong runner-up. It also helps that their platform is quite easy to use - other tools can seem intimidating with all the bells and whistles. Plus, it starts at $5/month for 30K characters (after a 10K character free trial), a much more affordable entry tier.

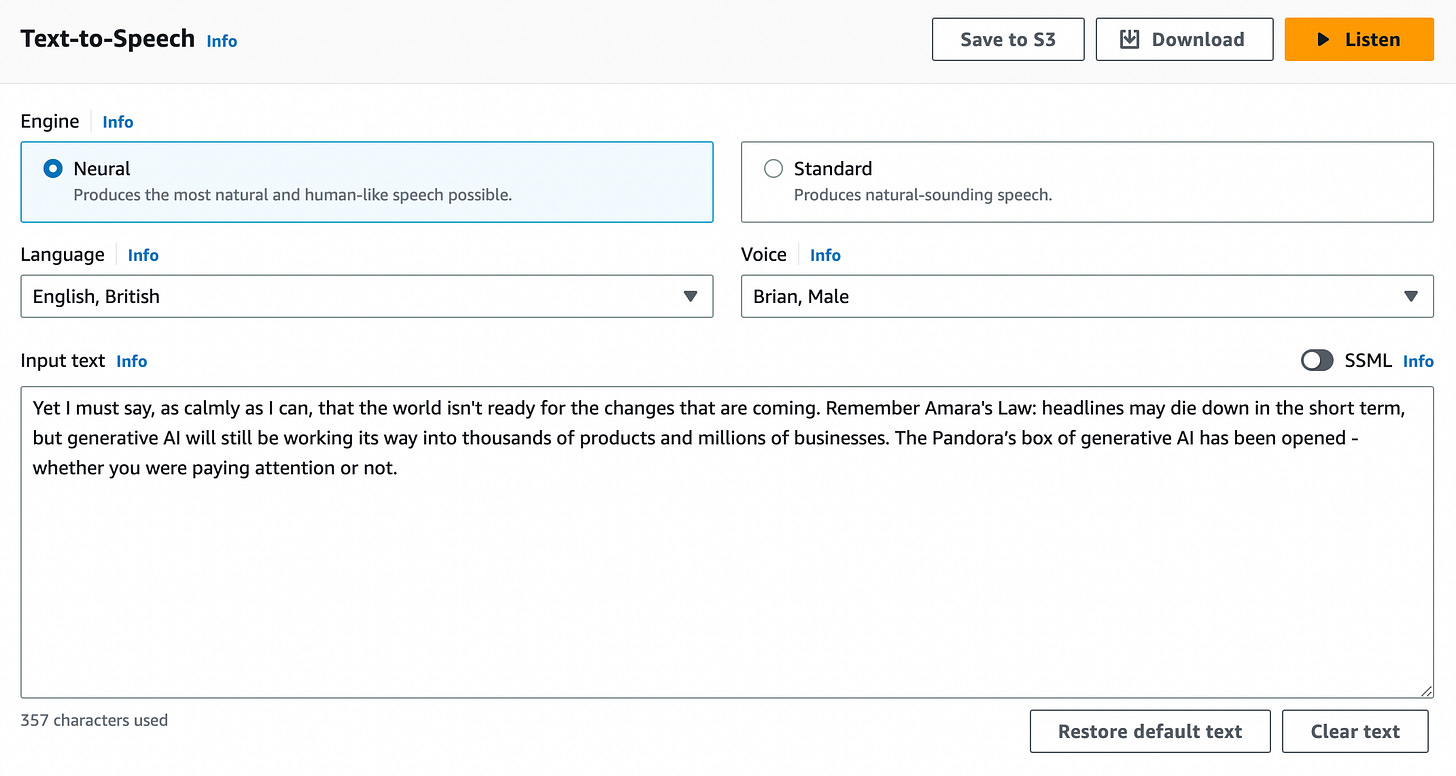

On a budget: Amazon Polly

The budget pick here is Amazon Polly, a text-to-speech service under Amazon Web Services (AWS). The AWS free tier offers 1 million characters of text in the first year, which is very generous. I will say that their voices were not the most realistic sounding, especially compared to the top picks. But that's offset by the very large array of accents and languages that they have on offer. In contrast, Replica and ElevenLabs only offer English-speaking voices right now.

I suspect that Amazon Polly is powering many of the other voice generators I tested. But building a larger application is a perfect use case for Polly - it's meant to be a platform. And while they don't offer any UIs to fine-tune the speech, they do have SSML (synthesized speech markup language). SSML is based on XML, and can let developers control the way speech sounds. Tags like <prosody> and <emphasis> affect emotions and emphasis.

Why you should trust me

I've spent the last 10 years in Silicon Valley and the last 15 years programming. While I don't consider myself a world-renowned machine learning expert, I do have some experience with machine learning. I've built and launched dozens of software products, and I have a good eye for how they work and what their limitations might be.

However, this review is only limited to English text. I wasn’t able to evaluate the voice quality for non-English languages - partly because not all platforms could generate multilingual audio, and partly because I’m not fluent in anything besides English.

For this review, I spent over 100 hours researching, evaluating, and testing different AI voice-generating tools. I didn't receive any compensation or payment for any of them, nor am I affiliated with any of them. That said, I'm an alumnus of both Stanford and YCombinator, and it's likely that I know affiliated folks through my extended network. I've done my best to be unbiased in my review.

How AI voice generation works

This section gets pretty technical, so feel free to skip it.

AI voice generation, also known as text-to-speech (TTS), converts written text into human-like speech. There are several methods used today, with the most common being concatenative synthesis, parametric synthesis, and neural network-based synthesis.

Concatenative synthesis. This method divides speech into small chunks, known as phonemes or diphones, and then reassembles them to create the final audio. Think speech as Lego bricks. The quality of the output depends on the size and diversity of the original speech dataset. While it can produce high-quality results, it generally requires a lot of storage and computing power to do so.

Parametric synthesis. This method models human speech using parameters like pitch, duration, and emphasis. By changing the parameters, the model can generate speech waveforms. It's a lightweight and inexpensive process, but generally results in less natural-sounding output.

Neural network-based synthesis. This is the most recent and popular method for generating AI voices. It uses deep learning models like recurrent neural networks (RNNs) or generative adversarial networks (GANs) to model the relationship between text and speech. One example is WaveNet, developed by DeepMind, which uses neural networks to create very natural-sounding audio.

I'm going to oversimplify here massively, but the process generally involves three steps:

Text preprocessing. The text is converted to a list of smaller tokens, such as phonemes, syllables or words.

Acousting modeling. The AI model, often a neural network, takes the tokens and predicts the corresponding output characteristics. Things like pitch, duration, emphasis, and emotion.

Waveform synthesis. After predicting the final characteristics, the output is then converted to audio directly or sent to a separate model as parameters to create the audio.

We’ve made big improvements in neural network synthesis over the last few years, and given the amount of research going into transformer models like LLMs, expect to see voice generation get even better.

Use cases

Each application had a slightly different intended use case, so let’s take a look at how voice generation can be used today.

Narration/Voiceover. This one is fairly obvious. As an author or a publisher, you can now have high-quality voiceover in a matter of minutes, for a fraction of the cost of a professional voice actor. And project management tools give audiobook publishers a lot more flexibility in how they create and edit. Personally, I'm planning to use AI to create audio editions of this newsletter.

Productivity. While many products focused on content creation, some were aimed at productivity. Speechify is one example - its main value proposition is saving time by narrating documents or emails. These days, this use case hits home. Since starting Artificial Ignorance, I've subscribed to dozens of newsletters and struggle to read them all.

Video games. Video games are a great use case for generative AI (and they are Replica's target customer). The world of a video game is often full of rich detail, with each conversation or cutscene meticulously crafted. Generative AI tools can likely save hundreds to thousands of hours for game developers. With voices in particular, devs can write test out new lines or make edits without having to go back to the recording studio.

Entertainment. Beyond gaming, there are big applications for AI voices in entertainment. We're looking at the very real prospect of fully synthetic characters and celebrities. Or, celebrities cloning their voices to "engage" with more fans than before. But even without that, AI voices can work for general content creation. For example, if I wanted to expand this newsletter to YouTube or TikTok, I could use AI voices to help move to a different medium. Now all you need to make your own YouTube channel is a few different AI tools and an editor.

Post-Production. One interesting use case that I hadn't considered was editing existing audio. BeyondWords, for example, lets you take an audio clip and edit the transcript - all while keeping the voice the same.

Software assistants. Of course, the improvements to AI voices will make smart assistants and other assistive technology that much more prevalent. I previously cobbled together my own smart assistant over a weekend with ElevenLabs and ChatGPT. Conversational assistants could be coming to many more products soon.

Sales/Marketing. Sales people adore tools to tailor their outreach, and this is no exception. Expect companies to send custom voice messages about how their product would be a great fit. On the marketing side, this simplifies one step of recording advertisements or podcasts.

Customer service. In the world of customer service, it's dramatically more expensive to offer live phone support versus text-based chat. Tools like these might bring down the cost of call centers if everyone is typing rather than talking. Though if that doesn't work, at least call trees will sound more exciting.

Abuse cases

But on top of the use cases, I also want to talk about abuse cases. Technology is a double-edged sword, and clearly, this technology has the potential to cause harm. So let's acknowledge some of the trade-offs with AI voices. This isn't an exhaustive list, but only a first attempt at categorizing what is now possible.

Impersonation/Fraud. This is often where people's minds first go. It's becoming easier and easier to clone someone's voice, with recent Microsoft models only requiring 3 seconds of audio. Scams can become that much more targeted and sophisticated, and in fact, it's already started to happen. Parents are being fooled by AI clones of their children's voices. Journalists are using digital clones to bypass bank security. When we can't trust the voice of the person on the other end of the call - that opens up an enormous can of worms.

Job displacement. Like a lot of AI, there are issues with job displacement with AI voices. Voice actors and audiobook narrators may have their livelihoods threatened. Not all publications will switch to AI, but those who primarily care about cutting costs will definitely try AI voices. And as other AI tools get better, we may start cutting out actors completely - big-name directors like Joe Russo believe AI-generated films are only a couple of years away.

IP infringement. While I didn't look specifically at AI voices for music for this review, there's a growing concern about AI in the music industry. After recent viral Drake songs, there are more people than ever creating bootleg pop songs with AI lyrics and voices. And the music industry is not happy. This is still a huge legal gray area, so we don't yet know whether you can copyright a voice. If it turns out that you can, then Hollywood is almost certainly going to own the rights to actors’ voices.

Data security. With many AI tools, data security is a real concern. All of these products require you to keep a copy of your text on their servers. For most projects, that might not be a big deal, but you might have sensitive company emails or a new book release that you don't want to set loose on the internet. While there are some open-source models, such as Coqui, they aren't as realistic sounding as the proprietary ones.

The selection and testing process

Finding and selecting these tools was a bit of a challenge in itself. While YouTube videos with cloned celebrity voices are a dime a dozen, finding the tools behind the videos was more difficult. I used a combination of GitHub, Google, Product Hunt, and third-party data to create an initial list.

From there, I narrowed the list down to 9 that I wanted to actually test. I took several factors into consideration, but I wanted a diverse mix of products. Some of the factors I used to create the shortlist:

Longevity: How long has this tool been around for? Will it still be around in a year?

Uniqueness: Are these all targeted at the same type of customer? Is there a unique value proposition?

Open source: Is there at least one open-source option?

Cloud platforms: Is there at least one major cloud platform (AWS/GCP/Azure) option?

Once I started testing, I created a comparison table for each product and ranked it along many different factors, including:

Free tier available

Price

Voice quality

Voice cloning available

Multi-accent

Multilingual

Project management features

Emotion control

Prosody control

Phoneme control

Ethics policy

API access

Third-party integrations

The competition

Here are the different apps that I tested (in alphabetical order):

Got feedback?

Did I miss your favorite tool? Are there other use cases that you can think of? Leave a comment and let me know! And if you want to help shape the next review, vote in the poll!

Great post! I’ve been using Murf.ai in some of my creative projects. I’m pretty happy with it but will check out some of these other tools.

This was an awesome piece, thanks for all your research and your explanation on how they work!! I really appreciate it and everything else you’ve published in your newsletter.

I’m guessing you may have encountered this in your research on synthetic voices, but I recently noticed a YouTube channel called Izam using pretty realistic AI people as presenters in their videos. They have a pretty genius business strategy of marketing Midjourney prompts they are selling on Etsy with flashy presentations and AI voice/people. So at first glance at their videos with the people and their thumbnails it appears like it’s a real person when it’s not. I’ve tried to figure out what they are using but wasn’t able to. So I’d love for you to review tools like this (if they use just one) or complementary tools that can be used for things like this! I could see it being a huge help to non-native English speakers, camera/voice shy people, and any others who would prefer not to show their own face/body but still have a visual avatar.