How to talk to your family about AI over the holidays

A handy guide for your uncle's burning questions.

Next Thursday is the 1st anniversary of ChatGPT's launch. If you've kept up with all the AI developments since, that seems like ages ago. It's hard to believe so much has changed in the last year.

But it's also easy to forget that most people still have no idea about the industry's daily announcements. They're not obsessing about the latest foundation models or product launches - though by now they may have heard about OpenAI's CEO drama.

In the spirit of the holidays, let's recap some questions your friends and family might have about AI this year.

We'll start with some basics, and work our way up:

What's happening with OpenAI's CEO?

Why is ChatGPT such a big deal?

Why are people so anxious about AI?

What is the government doing about AI?

How is AI going to impact me?

What's happening with OpenAI's CEO?

Even if they don't know what ChatGPT is, if your loved ones have been paying attention to the news, they've probably heard about the dramatic firing (and re-hiring) of OpenAI's CEO, Sam Altman. There have been many, many, many, many, many, many, many, many, many explainers of what happened - I suggest you check some of them out. But here it is in a nutshell:

Sam Altman was fired by OpenAI's board, and co-founder Greg Brockman, plus key researchers, quit.

There was an immediate backlash, and the board began talks to bring Altman back as CEO.

After talks broke down, Sam and Greg "joined" Microsoft, OpenAI's largest investor.

Hundreds of OpenAI employees (~95%) threatened to leave and join Microsoft if the board did not resign.

After going through TWO interim CEOs in four days, Altman was reinstated as CEO and the majority of the board members who fired him resigned.

A new 3-person board has been formed, with the goal of creating a more permanent 9-person board.

It remains to be seen who will be on the new 9-person board. Presumably, Altman and Microsoft both want seats, and Altman likely wants to put allies on the board to reduce the possibility of any future firings. And we still don't really know the original motivation of the board that fired Altman in the first place - though, regardless of whether the motivations were justified, the execution was managed extremely poorly.

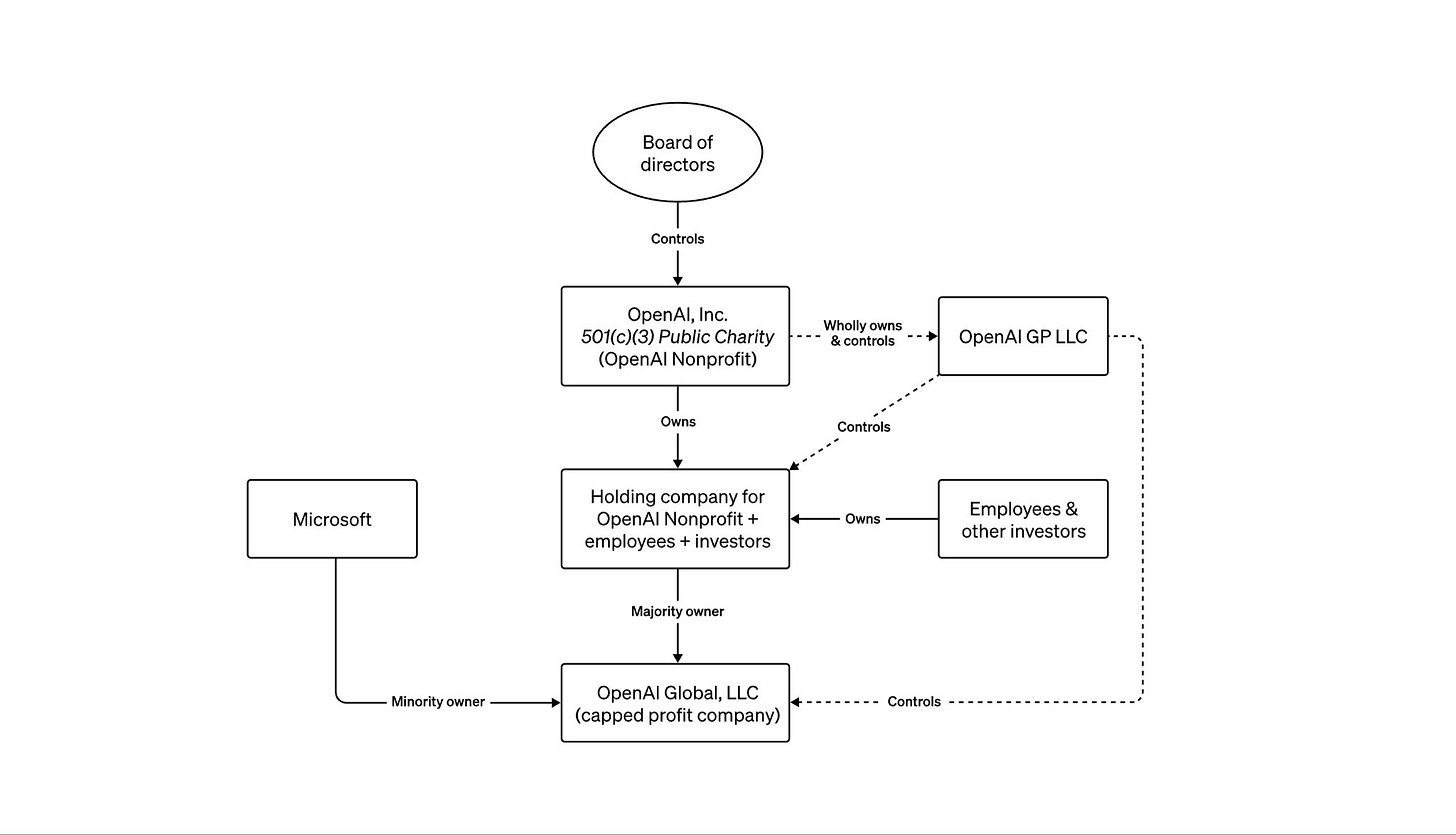

Much of the discussion has also revolved around OpenAI's unique organizational structure. It was originally founded as a non-profit with the mission of creating AGI for the benefit of humanity. Later, OpenAI created a for-profit subsidiary and received an estimated $10 billion investment from Microsoft. Employees and investors alike have profit-sharing agreements rather than stock in the for-profit venture, but it's ultimately controlled by the non-profit. This tension is unlikely to go away completely, but if I had to guess, the company will be much more aligned with its for-profit proponents moving forward.

Why is ChatGPT such a big deal?

Related questions:

How does ChatGPT work?

What makes ChatGPT different from Siri/Alexa?

Does ChatGPT cost money?

As ubiquitous as ChatGPT seems, there are still a lot of people who aren’t familiar with it. Estimates from this summer indicate only 14% of US adults had tried ChatGPT. So if your friends or family are entirely in the dark about this chatbot, it might be helpful to give them a short introduction to what it is and why people care about it.

The biggest issue is that they don't understand how it's different. We've had chatbots before - most internet users have talked to a chatbot in some form. But the vast majority of those bots are either scripted or have a very limited amount of understanding. We're used to smart assistants like Siri and Alexa, that respond with stilted answers unless you phrase a question in precisely the right format.

But ChatGPT (and its competitors) can manage language in all of its messy forms. While it's technically a machine that generates words - you can loosely think of it as autocomplete on steroids - it's so much more advanced that it can start to blur the boundaries of artificial and human conversation. It's a machine that has been fed billions of words from the internet, and as it turns out, at a certain size, predicting the next word in a sentence can become shockingly realistic.

Try a Mad Libs-style demo if you're showing someone ChatGPT for the first time. Ask for their favorite poet or writer, the name of a pet, and have the bot create a completely custom poem on the spot. If they're multi-lingual, ask for an answer in another language. If you have ChatGPT Plus, show them DALL-E or use the voice features to recreate a scene from Her. Seeing someone's amazement for the first time is always a delight.

It's also worth pointing out that ChatGPT is not a search engine. There's a lot of muscle memory to start typing questions into the chat - and ChatGPT is trained to give you answers - but we know that ChatGPT can and will make up facts and figures.

Should they be interested in trying it for themselves, you can also let them know that the baseline version of ChatGPT is free to use, while more advanced versions that can create images and analyze files cost $20 a month with ChatGPT Plus.

Why are people so anxious about AI?

Related questions:

Is this how we end up with Skynet?

Aren't there lawsuits around AI right now?

How do I tell if something was written by an AI?

There is also a lot of anxiety around AI. But, depending on who you talk to, there are very different things to be anxious about! At one extreme, some people believe AI will become superintelligent and threaten humanity as a whole. As crazy as that might sound, many leading AI researchers believe this is possible - as well as many AI CEOs, who continue to build AI anyway.

But beyond theoretical fears of extinction, plenty are worried about the disruption AI will cause here and now. The biggest questions revolve around job losses, copyright, and misinformation.

When ChatGPT launched a year ago, many were shocked at the state of generative AI - not just for words and language, but also art, music, and code. If AIs can become experts at writing children's books or generating stock photography or building websites, we may be at risk of putting millions out of work. And that isn't a hypothetical - some industries are already seeing an impact, and some companies have already committed to reducing headcount and replacing them with AI.

A lot of the impact depends on how fast industries adopt AI. With more time, we can buffer job losses and help make room for new roles that haven't existed before - "prompt engineer" is a title that didn't exist two years ago. But industries and individuals whose livelihoods are threatened by AI are understandably worried, and are doing what they can to protect themselves, including taking legal action.

The main type of lawsuit is about copyright - the allegation is that these models were (illegally) trained on copyrighted works without consent. It’s working its way through the courts, and the decision has the potential to alter the trajectory of future AI products. But it’s not the only legal question. Right now, the courts have yet to decide:

Whether it's legal to train an AI on data collected without consent - even if it's public.

Whether creatives whose work was used to train models are owed royalties.

Whether output that mimics a specific style, like Pixar or Dr. Seuss, infringes on IP.

Whether we can even own the copyright for content created by an AI in the first place.

We're slowly figuring these things out - for example, the U.S. Copyright Office has said that any AI-generated works are ineligible for copyright without significant human edits. But that has yet to be tested by the courts, and other legal areas are still extremely gray.

There's also an open question on how to tell if a piece of content is AI-generated. In the case of music or images, we can potentially embed invisible watermarks - to know in the future if something comes out of an AI. Some companies are already doing this - Google's latest music-generating models contain audio fingerprints, and OpenAI has an internal detector for images made with DALL-E.

But that won't stop many from being fooled by hoax images and cloned voices. Some scammers are already using AI-generated identities to steal money from unsuspecting family members. And in terms of written content, there is no reliable way to tell if something was written by an AI. Any tool that does often generates lots of false positives, and can be easily bypassed with better prompting techniques.

What is the government doing about AI?

Related questions:

What was President Biden's executive order about?

Wasn't there some UK Summit?

If you've been vaguely paying attention to the news, you might have heard about President Biden's Executive Order. For the most part, this doesn't affect most people (unless you work for the government). But it does set the stage for a lot more regulation in the future. Dozens of different agencies are now tasked with evaluating AI's potential impacts. Each cabinet department now has a Chief AI Officer. And in the coming months and years, we will see a vast amount of agency-level actions and guidance on AI - though not necessarily much legislation from Congress.

But the US is not alone in regulating AI. The UK held an AI Safety Summit, that aimed at having governments and leading AI companies partner in their efforts to improve AI safety. But again, not many concrete policies came out of the event. While many governments are very publicly taking action and giving speeches on AI, they aren't yet writing laws or dictating policy. The main exception is the EU AI Act, which has been in the works for several years. It's currently being workshopped to incorporate the latest advances in generative AI - but is facing some hurdles.

How is AI going to impact me?

Related questions:

Is this all an overhyped bubble?

Is ChatGPT going to take my job?

Plenty of folks are skeptical about all of this AI stuff, and think it's a bubble or a fad. I can't really blame them - if you're not familiar with this tech, hearing about diffusion models or transformers or loss functions just sounds like gibberish. Even worse, the shape of AI hype looks suspiciously similar to crypto hype - a new technology that will disrupt everything and you need to buy my course to learn how to make money with it right now.

But AI (in my view) will reshape vast parts of our life and work. It won't be overnight, and it might not always be obvious - but it will happen. If you use Windows, Outlook, or Excel - you're going to run into Copilot. Same with Gmail and Duet/Gemini. And any number of apps and tools that you use are adding AI, from Salesforce to Snapchat and from Intercom to Instagram.

One study has estimated that 80% of US workers could have their work impacted by AI. That doesn't mean they will lose their job, but they might find that AI becomes a part of their tools. And even if you're not a white-collar worker, you may find that you're encountering more AI-generated content on social media or in advertising. Both Marvel and Coca-Cola have released content this year made with generative AI - and more is sure to follow.

I can't predict all of the ways that AI is going to impact you and me in the coming years - I'm not sure anyone can. But I remain cautiously optimistic about what this technology is capable of. And I would encourage everyone to try it for themselves - find out what it's capable of and how it can benefit you, specifically. We're still so early to all this. And while I'm at risk of adding to the hype - things are only going to get more impressive from here.

Happy holidays.

This is an incredibly helpful summary, even for those of us who are exposed to this world daily. I'll bookmark it for sure.