GPT-4o and the illusion of AGI

Why speed and multimodality is becoming the name of the game.

On Monday this week, OpenAI unveiled GPT-4o. It wasn't the search engine that everyone was expecting, but rather a new multi-modal model that’s replacing the free GPT-3.5. On top of GPT-4o, there were some new bells and whistles - a ChatGPT MacOS app and GPTs being made free for everyone.

The response online has been mixed - some were expecting GPT-4.5 (or even GPT-5) and are underwhelmed if not dismissive, while others are diligently noting that "GPT-4o just dropped, here are the 12 best examples: 🧵".

On Tuesday, Google unleashed a stream of AI announcements - more on those in Friday's roundup. But the one that stood out to me was Gemini Flash - a model roughly as powerful as Gemini Pro but designed for low-latency tasks.

What fascinates me is the shift towards faster, but not necessarily “smarter” models, and why that's going to have more of an impact than people might think.

Meet GPT-4o

GPT-4o is a new model that is faster, cheaper, and better than its predecessor, GPT-4 Turbo. Unlike previous models, GPT-4o is natively multimodal across text, images, audio, and possibly video. That means it is capable of ingesting audio and video and natively generating audio and video1.

Here's what I mean: if you've talked to ChatGPT previously, it had to transcribe your message, send that text to GPT-4, get a text response back, and generate an audio clip from that script. Now, it bypasses all that and just takes audio (and video) in and returns audio (and video) out.

The implications are pretty big - besides a real-time translation demo, OpenAI has examples of the model designing fonts, creating 3D renders, identifying different speakers in a conversation, and more.

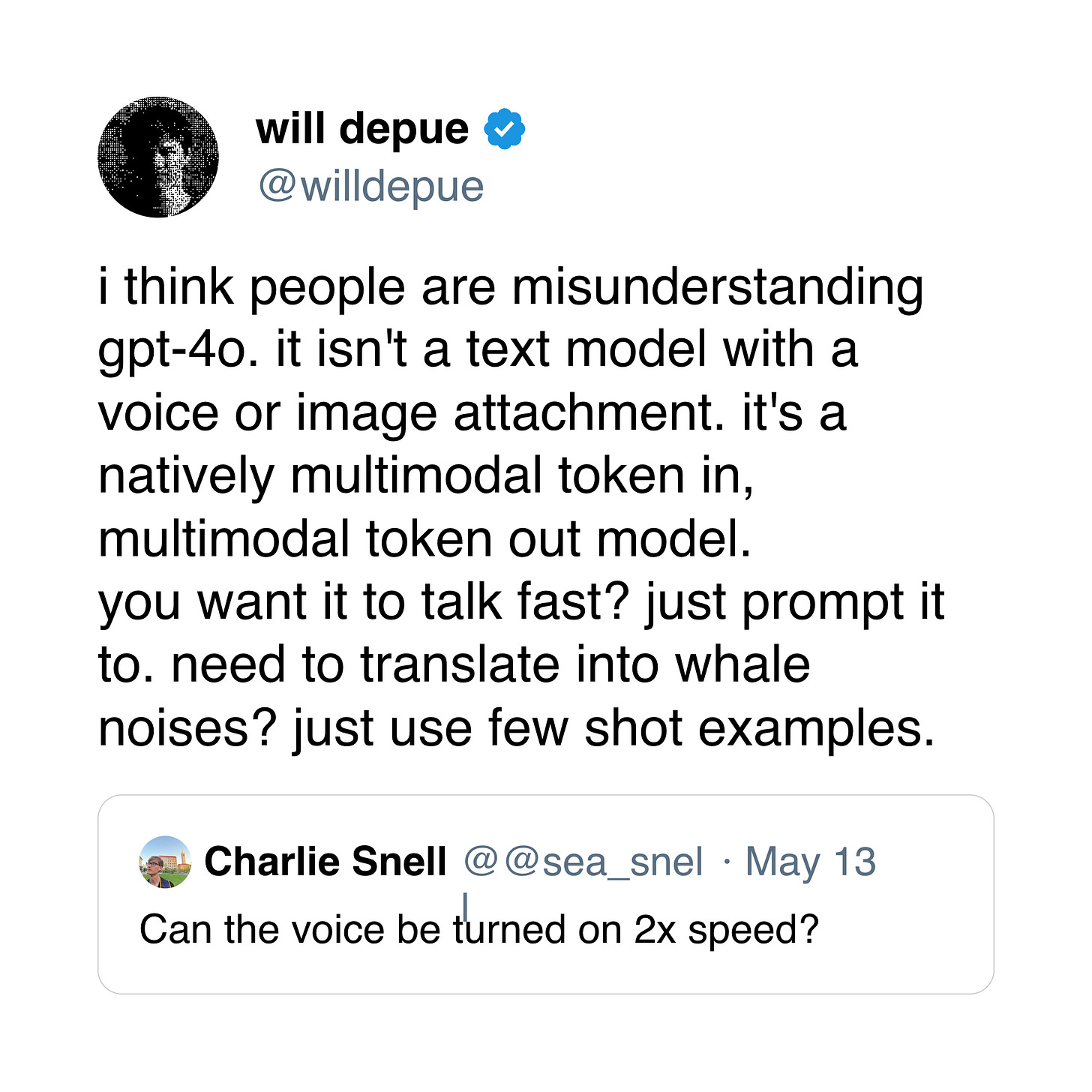

One interaction that stood out came from Twitter:

Yet despite these new capabilities, OpenAI is giving away the model for free, at speeds that are even faster than their previous flagship model. Why? And does the lack of a significantly “smarter” model (from both OpenAI and Google) mean that closed-source AI has hit a plateau?

Has AI peaked?

Many people have noticed that the state-of-the-art closed-source models have hit a bit of a wall. It's been over a year since GPT-4 was first released, and nobody has managed to do significantly better than it (slight benchmark improvements aside).

I do think that's somewhat of an unfairly high expectation. It took three years to go from GPT-3 to GPT-4, and GPT-4 was essentially done training when ChatGPT took the world by storm. Expecting benchmark-breaking models every year seems like a bit of a stretch - it takes time to find new research breakthroughs, and time to train huge models from scratch.

GPT-4o, by OpenAI’s own admission, took 18 months from idea to release. Even Google and Meta, with hundreds of billions to spend on compute, have matched GPT-4 in about 9 months, which likely says something about how far money itself can go.

But OpenAI also has to balance a secondary decision: how to allocate resources between power users and mass market adopters. To them (and competitors), broadening AI's overall reach is valuable, even in its current state.

Right now, ChatGPT, Gemini, and others2 are jockeying to become the go-to name for "AI" in the minds of consumers. Clearly, there's an opportunity for a product to become synonymous with LLMs, much like "Google" and "search engine" all those years ago. And so, even while new models are being trained and fine-tuned, figuring out how to get more consumers to use existing models still represents a major opportunity and a major challenge. With this week's launch, OpenAI has raised the stakes - a GPT-4 class model is now available to everyone, for free, natively on their phones and laptops3.

So while I understand the skepticism about whether AGI will ever be achieved - especially in light of recent moves - I also wonder whether "AGI" is going to get here faster than we expect. Or at least, the perception of it.

The illusion of AGI

Something that I've been saying for some time now is that we're on the brink of the perception of AGI. There are many broader definitions of AGI, ranging from "AI that's better than humans at any given task" to "the creation of a god machine." People much smarter than me are busy debating in what form and timeline we’ll get there. But I believe the average consumer will think of ChatGPT as AGI (even if they don't use that word) long before it can beat humans in every conceivable test and competition.

When I first used ChatGPT's voice mode (the older, clunkier version), I was struck by how quickly a part of my brain started thinking about ChatGPT as a person, even though I rationally understood it wasn't. Even though I knew it was a token prediction machine, even though the generated voice wasn't the most realistic, even though there were gaps and delays in the speech.

To be fair, I think the pandemic has done a lot of heavy lifting here. I can't count the Zoom calls and Google Meets I've been on where everyone had video turned off. After hundreds of hours of talking at a black square on my computer and getting a human voice in response, why would I treat ChatGPT any differently?

And in that medium, the thing that makes us (or at least me) perceive someone as "intelligent" isn't only the content of their words. It's also the emotion in their voice, how they respond to what we say, and our ability to create a shared idea or experience. In other words, my guess is that most people will think of Samantha from Her as AGI, even if it can't create room-temperature semiconductors or stable nuclear fusion reactions.

I think the big AI companies understand this. It's why we're seeing models focused on lower latency (to respond faster), lower memory (to work on phones and laptops), and multimodality (to move beyond a browser tab and into the real world). It's why we're seeing a ChatGPT desktop app (and an Apple partnership) or Project Astra, a universal agent to help with everyday life.

It's possible we do get a version of ChatGPT that can do groundbreaking science and physics - I hope we do. But I also think that that capability will be for "AI power users," while the rest of us keep on using AI as an assistant, therapist, doctor, lawyer, or any number use cases that are currently possible (or very shortly possible) today. And to say that they're not valuable use cases because they aren't "AGI," I think, misses the point.

One big caveat with both Google and OpenAI is that these features aren’t all available just yet. GPT-4o is available today in text-mode, but the latest voice, image, and video features are expected to roll out soon.

Claude, Copilot, Meta AI Assistant, whatever Apple eventually comes out with, etc.

Big asterisk here: not all countries, not all laptops. Ironically ChatGPT’s desktop app only supports Macs, for now.