Case Study: Scaling customer intelligence

Analyzing 10,000 sales calls with Claude

Question: how many sales calls can you listen to and take notes on in a day?

Well, if you (conservatively) assume each one is thirty minutes, and you work an eight-hour day, that gives you sixteen calls. If, for some reason, you have absolutely zero work-life balance and only stop listening to sleep, then you'd get closer to 32 in a day. That’s 224 in a week.

I had two weeks to analyze ten thousand of them.

That's the challenge I faced when our CEO wanted to dig through our repository of sales calls to map the buyer's journey for various customer personas. Two years ago, this would have been a Herculean task. But now, it's something that a single AI engineer can accomplish in a fortnight.

Side note: why is creating a buyer's journey useful?

Historically, Pulley's ICP (ideal customer profile) has been "venture backed startups," but that's pretty broad. Tailoring a sales and marketing strategy to a specific persona, such as a "CTO of an early-stage AI startup" can lead to much more success for that specific segment. Because Pulley has been around for a while, we had a sense of the different customer segments that were interested in the product - but we wanted to know the exact reasons *why, along with details like how they heard about Pulley, or what alternatives they were considering.

The opportunity

When trying to glean customer insights, there's no beating talking to customers. In my case, I was doing the next best thing - reading transcripts of sales calls that Pulley's sales reps had done over the years.

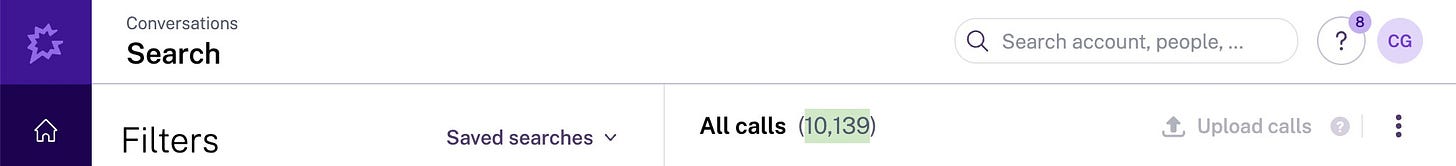

The only problem was the sheer scale of the data. A quick look at our Gong dashboard showed over 10,000 call transcripts in the database.

A manual approach to analyzing these calls would have been brutal (if not impossible):

Manually download and read through each transcript

Decide whether the prospect matches the target customer persona

Read through hundreds of lines looking for key insights and trends

Remember as much as possible while writing final reports on the customer journey

Ideally, document citations of key quotes from each call for future reference

Even if you had the time to read or listen to them all (it would take 625 days, or nearly two years), the human brain simply isn't wired to process that much information while maintaining consistent analysis. It's like trying to read an entire library and then write a detailed summary - not just impossible, but fundamentally mismatched with how humans process information.

Luckily, we have modern LLMs. And this intersection of unstructured data and pattern recognition represents a sweet spot for modern AI.

Traditional approaches to analyzing customer calls typically fall into two categories: manual analysis (high quality but completely unscalable) and keyword analysis (scalable but shallow, missing context and nuance). Leveraging LLMs like ChatGPT and Claude represents a third approach.

The technical challenges

What looks simple in hindsight - "just use AI to analyze the calls" - actually required solving several interconnected technical challenges. Like any complex engineering problem, the solution emerged through careful choices about tradeoffs between accuracy, cost, and speed.

Accuracy

The first major decision was choosing the right model. While GPT-4o and Claude 3.5 Sonnet offered the most intelligence1, they were also the most expensive and slowest options. The temptation to use smaller, cheaper models like 4o-mini or pplx-api2 was strong – if they could do the job, the cost savings would be significant.

However, early experiments quickly showed the limitations of this approach. Smaller models produced an alarming number of false positives, often making confident but incorrect assertions. They would classify customers as crypto companies because a sales rep mentioned blockchain features or decided a customer was the company founder despite zero supporting evidence in the transcripts.

These weren't just minor errors – they threatened the entire project's viability. Any savings on speed or cost would be pointless if the answers couldn't be trusted.

The breakthrough came from realizing that even the best models needed proper context and constraints. We developed a multi-layered approach:

Retrieval-augmented generation (RAG)3 to provide additional context from third-party sources

Several prompt engineering techniques to mitigate hallucinations and reduce false positives

Having the LLM return structured JSON to track and display citations properly later on

This combination created a system that could reliably extract both accurate company details and meaningful customer journey insights.

Scalability

Using Claude 3.5 Sonnet, though, meant opting for a pretty expensive model. To make matters worse, because we wanted to pull lengthy customer quotes out of the transcripts, we often ran into the 4K token output limit, meaning we'd have to send our entire prompt again to continue the conversation.

To overcome both issues, we leveraged multiple beta features to dramatically lower our costs and improve our analysis speeds.

Prompt Caching: By specifying parts of our prompts to cache ahead of time, we reduced both costs by up to 90% and latency by up to 85%. Most of the prompt context was each call's transcript, meaning we could cache it beforehand and do multiple rounds of analysis.

Longer Outputs: Access to 8K token outputs (double the default) let us generate extended customer insight summaries in a single pass. While not a major factor in reducing latency, it was still a nice quality-of-life improvement to make sure we didn't have to make unnecessary API calls.

Taken together, these features let us turn conduct a $5000 analysis for closer to $500, and get results in hours, not days.

Usability

Of course, it wasn't enough to process the sales transcripts and store them in a database - they had to be accessible to non-technical users. The data wasn't particularly valuable in its raw format, so we added a few features to make things more usable.

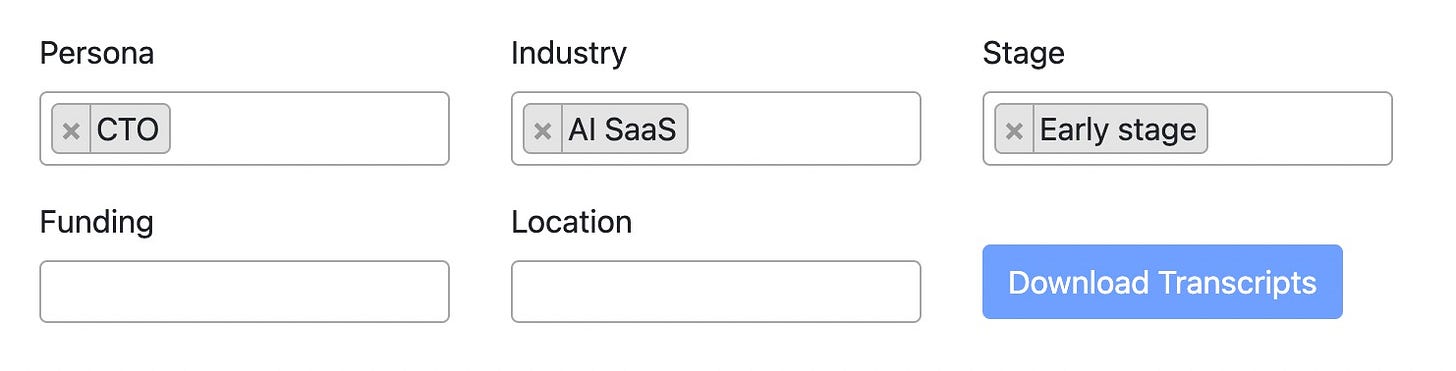

First, we made it filterable. Users could filter the calls by customer role, company stage, industry, geolocation, and more. That way, if we wanted to target different personas in the future, we would be able to replicate our analysis quickly.

Second, the transcripts were downloadable. With Cursor's help, I added buttons to download formatted transcripts. This allowed users to perform their own analysis on the fly. In fact, users ended up repeatedly downloading transcripts and creating Claude Projects with them to start asking questions.

Third, we generated a final report for the main personas that we wanted to analyze - using specific citations, we were able to link references back to specific sales calls and list the number of relevant calls for each aspect of the customer journey. We were triple-dipping on our Claude usage at this point - in addition to the API and projects, the chatbot version of Claude did a great job generating artifacts from our extracted data.

The impact

One thing that really surprised me about this project was its wide-ranging impact. What started as a strategic request from the CEO for customer journey insights quickly evolved into something more significant.

For example, the sales team used the tool to automate manually downloading transcripts, saving hours each week. The marketing team pulled key quotes about what customers loved about Pulley, helping to complete a positioning exercise. Our VP of Marketing, in particular, noted that this sort of analysis usually takes "tens if not hundreds of hours" to do.

Beyond the time savings, we also saw our mountains of unstructured data in a new light. Now, teams started asking questions they wouldn't have considered before - because the manual analysis would have been too daunting.

The learnings

There were a few key takeaways for me from this project:

Models matter. As much as the AI community believes in open-source and small language models, it was clear that Claude 3.5 Sonnet and GPT-4o were able to do handle this when other models couldn't. We decided to go with Claude because of its prompt caching - at the time, GPT-4o didn't support the feature. Of course, the right tool isn't always the most powerful one; it's the one that best fits your needs.

Don't neglect the scaffolding. Despite AI's capabilities, there were still many wins gained from "traditional" software engineering, like using JSON structures and having good database schemas. That's what AI engineering is at its core - knowing how to build effective software around LLMs. But it's important to remember that AI must still be thoughtfully integrated into existing architectures and design patterns.

Consider additional use cases. By building a simple yet flexible tool, what could have been a one-off analysis became a company-wide resource. Doing this well takes more than good engineering chops - it requires being able to work cross-functionally and understand business processes. In truth, I was lucky enough to discover these use cases after the fact, but the project opened my eyes to what they might look like in the future.

Perhaps most importantly, this project showed how AI can transform seemingly impossible tasks into routine operations. It's not about replacing human analysis – it's about augmenting that analysis and removing human-based bottlenecks. With AI, services can now support software-like margins.

That's the real promise of tools like Claude: not just doing things faster, but unlocking new possibilities. And as we continue to discover exactly what these models are good for, the best applications will be where technical capabilities meet practical needs.

If you’re interested in learning more about building with LLMs, I’m working on an AI engineering course, and I want your feedback to help tailor the content. Sign up for updates here.

o1-preview hadn't been released at the time I built this.

One approach that I wanted to test was using Perplexity's API models - the hope was that with their knowledge of the internet, they could enrich information about the transcripts to fill in any gaps. In practice, it seems that their hosted APIs don't access the internet in the same way that their flagship product does - meaning the results were less than stellar.

RAG reduced hallucinations and allowed us to build a more sophisticated understanding of context. When a sales rep mentioned “Series A funding,” we could cross-reference this with company databases to verify the funding stage. This meant we could confidently segment conversations even when key details were implied rather than explicitly stated - much like how human analysts pick up on context clues.

Oh man, I love seeing stuff like this: AI applied in a real-world context, at scale, to a well-defined purpose, and with measurable positive effects. Really cool. Thanks for sharing!

You mentioned o1-preview in a footnote, stressing how it wasn't available at the time. But my guess is that, for your specific purpose, it wouldn't have been the most appropriate model anyway, right? It would take too much time at inference to be scalable without any major improvement - since its ability to reason carefully through a specific problem doesn't yield much benefit in the context of extracting key insights from a broad dataset. Or do you think it'd have its merits?