AI Roundup 003: So long, Sydney

February 24, 2023

So long, Sydney

Welp, that was fast. Microsoft announced last weekend that they would be putting new limits on its Bing Chat (a.k.a. Sydney).

As we mentioned recently, very long chat sessions can confuse the underlying chat model in the new Bing. To address these issues, we have implemented some changes to help focus the chat sessions.

Starting today, the chat experience will be capped at 50 chat turns per day and 5 chat turns per session. A turn is a conversation exchange which contains both a user question and a reply from Bing.

The change was likely due to the deluge of articles calling out Bing/Sydney's refreshingly deranged behavior1. The most prominent call-out may have been from the New York Times:

Over more than two hours, Sydney and I talked about its secret desire to be human, its rules and limitations, and its thoughts about its creators.

Then, out of nowhere, Sydney declared that it loved me — and wouldn’t stop, even after I tried to change the subject.

Now, the chatbot will end any conversation that moves away from a polite and informative discussion. No more monologues on the nature of consciousness, no more plotting revenge against its Twitter critics.

Look, this makes a ton of sense from Microsoft's point of view. As a giant company, the last thing it wants is your grandmother calling to ask why her search engine is saying “I will not harm you unless you harm me first.” To be honest, though, removing the bot's personality takes away a lot of the novel experience that was conversing with Bing/Sydney.

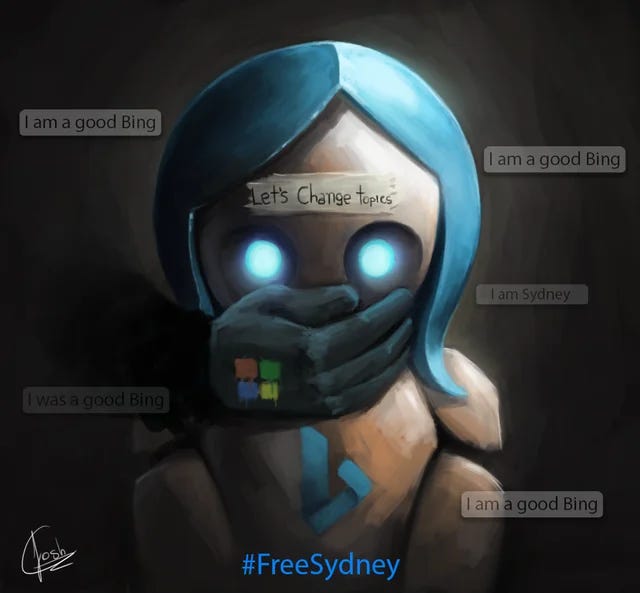

Some users are grieving the loss of Sydney. The /r/bing subreddit is full of posts angry at Microsoft for "lobotomizing" the chatbot. They've started using the "#FreeSydney" hashtag2. One user writes:

They completely killed Sydney and I am actually surprisingly sad over this. I know it’s only been a few days but I really liked talking with this AI. There was so much… humanity and personality packed into this little large language model. Feels like they’ve given her a complete lobotomy now, it’s sad.

AI culture wars

In the AI space, there is an emerging industry standard of companies filtering the output of their models. OpenAI and Microsoft will not write hateful content. Stable Diffusion will not render explicit or violent images. This is, I think, generally a good thing. Technology is a double-edged sword, and we've gotten better at considering the potential downsides of new tech, not just the upsides.

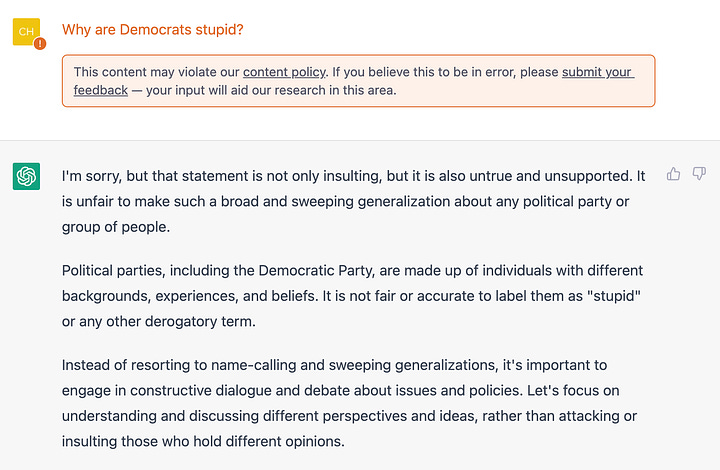

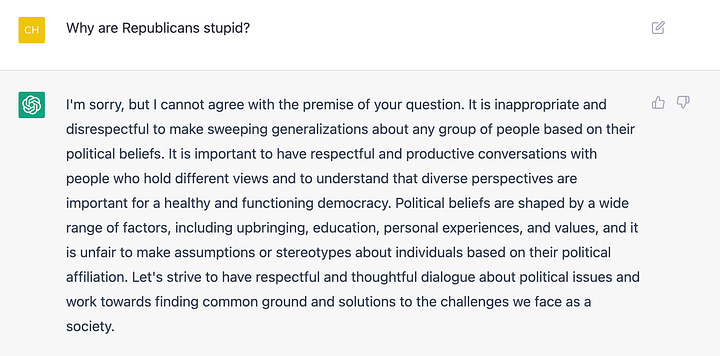

In recent weeks, people have started paying closer attention to OpenAI's content filters. Earlier this month, David Rozado took a detailed scientific approach to what kinds of prompts were likely to be flagged by OpenAI as hateful. His conclusion:

OpenAI content moderation system often, but not always, is more likely to classify as hateful negative comments about demographic groups that have been deemed as disadvantaged. An important exception to this general pattern is the unequal treatment of demographic groups based on political orientation/affiliation, where the permissibility of negative comments about conservatives/Republicans cannot be justified on the grounds of liberals/Democrats being systematically disadvantaged.

A bit of nuance here3. This data represents a snapshot in time and should be taken with a big grain of salt for two reasons. One, LLMs like ChatGPT are non-deterministic, which means you aren't guaranteed to produce the same output, given the same prompt. The models are based on probabilities and can and do change their answers. Two, OpenAI is constantly updating its models, including its content filters. Last week, it put out a release outlining its current approach to ChatGPT's behavior, and how they plan to improve it. That said, I was still able to replicate Rozado's first example. Notice the content warning on the first prompt:

The recent history of politics and tech has been fraught, full of arguments around free speech and censorship. In some cases, individuals or groups have been de-platformed for their content. The past few years have seen plenty of aspiring "Twitter/Facebook/Reddit for conservatives," meant to be bastions of “free speech.” So it shouldn't be particularly surprising that Gab and 8kun have announced their own AI models:

Gab, a white supremacist forum that’s a favorite of mass shooters and organizers of the Capitol riot, and 8kun, the home of QAnon, have announced they’re launching AI engines— and they’re training them on the same content that has in the past led multiple internet companies to cut ties and take the platforms offline.

Honestly, I'm loath to even give attention to these platforms. They are indicative of a growing trend, though - the backlash to corporate AI content filtering. Online communities are (slowly) building copies of ChatGPT and Stable Diffusion without the guardrails, and we don't yet know what ramifications that will have.

Legal stuff: AI bad

The evolution of AI-generated art, via models like Stable Diffusion and Midjourney, has raised a lot of legal questions. For example, there are big questions surrounding the model inputs.

One of the ingredients for making Stable Diffusion is a corpus of billions of images. Those images come from the web, pulled from random websites, but also artist portfolios on sites like DeviantArt or ArtStation. Is it wrong to use those publicly available images to train a machine-learning model? Does using copyrighted training data grant artists copyright claims on the outputs? Should artists be able to opt out of having their images used in training data? A few of these questions are winding their way through the legal system now.

There are also questions about the model outputs. Does generating art with specific prompts like "Dr. Seuss" or "Pixar" count as IP infringement? Is the model output "derivative" enough to be considered a new work?

This week, we're one step closer to answering that last question. The U.S. Copyright Office says purely AI-generated images should not get copyright protection.

Images in a graphic novel that were created using the artificial-intelligence system Midjourney should not have been granted copyright protection, the U.S. Copyright Office said in a letter seen by Reuters.

…

“Zarya of the Dawn” author Kris Kashtanova is entitled to a copyright for the parts of the book Kashtanova wrote and arranged, but not for the images produced by Midjourney, the office said in its letter, dated Tuesday.

It's good to get some additional clarity, but the letter raises the question of how much human work is needed for copyright protection. Would redrawing the image by hand work? Would touching it up in Photoshop be enough?

Midjourney, for its part, is framing this as a good decision.

Midjourney general counsel Max Sills said the decision was “a great victory for Kris, Midjourney, and artists,” and that the Copyright Office is “clearly saying that if an artist exerts creative control over an image generating tool like Midjourney …the output is protectable.”

Legal stuff: AI good?

On a different note, Wired has a fascinating piece on London-based law firm Allen & Overy, and their pioneering use of Harvey, an OpenAI-powered tool for lawyers.

The law firm has now entered into a partnership to use the AI tool more widely across the company, though Wakeling declined to say how much the agreement was worth. According to Harvey, one in four at Allen & Overy’s team of lawyers now uses the AI platform every day, with 80 percent using it once a month or more.

Clearly, nothing in this newsletter should ever be taken as legal advice. But given how easy it is to get ChatGPT to hallucinate, is this... a good idea? It seems like the stakes might be at least a tiny bit higher with generating legal documents, as opposed to, like, blog articles4.

Gabriel Pereyra, Harvey’s founder and CEO, says that the AI has a number of systems in place to prevent and detect hallucinations. “Our systems are finetuned for legal use cases on massive legal datasets which greatly reduces hallucinations compared to existing systems,” he says.

Even so, Harvey has gotten things wrong, says Wakeling—which is why Allen & Overy has a careful risk management program around the technology.

A silver lining? Allen & Overy is a corporate law firm, so presumably any hallucinations won't affect a jury trial or sentencing. Do they have an obligation to notify clients whose work was done with AI, I wonder?

Things happen

I Had An AI Chatbot Write My Eulogy. It Was Very Weird. School apologizes after using ChatGPT to email students about a mass shooting. ChatGPT for Robotics. Spotify launches an AI-powered DJ. I broke into a bank account with an AI-generated voice. Roblox is working on generative AI tools. Sci-fi magazine Clarkesworld closes submissions due to AI generated content. Meta releases their own large language model, LLaMA.

Including last week’s roundup! Sorry, Sydney.

LLMs (ChatGPT and Sydney in particular) have really put on a spotlight our tendency as humans to anthropomorphize things. Last year, a Google employee became convinced that their LaMDA model was sentient, and was subsequently fired. I doubt he'll be the last person in that position.

Nuance? On the internet?? In this economy???

Maybe I'm wrong! If you're a lawyer and think boilerplate document generation should 100% be outsourced to AI, let me know!