AI Roundup 079: Don't call it an acquisition

August 9, 2024.

Workshops!

I am incredibly excited to announce an upcoming Midjourney workshop from Daniel Nest! Daniel has generated over 15,000 images with Midjourney, and I always learn something new from his prompt tutorials.

Paid subscribers can join us on August 30th for a live, interactive workshop on how to make the most of Midjourney, plus a Q&A for your burning text-to-image questions.

Don't call it an acquisition

Last week, the cofounders of Character.AI announced they would join Google, while most employees are staying put.

Why it matters:

It's difficult to spin this as a good thing for Character.AI, which has raised $150M in venture funding. Investors seem to be left holding the bag while executives and researchers jump ship.

This type of deal is quickly becoming part of Big Tech's playbook, after similar moves from Microsoft/Inflection and Amazon/Adept.

It could also be a way for tech giants to avoid antitrust scrutiny - hiring a dozen at-will employees isn't usually seen as monopolistic.

Elsewhere in the FAANG free-for-all:

Microsoft and Palantir are partnering to sell government cloud and AI tools, including GPT-4, to US defense and intelligence agencies.

Google unveiled Gemini-based features for Google Home, including smarter Nest camera captions and an improved Assistant.

The Apple Intelligence system prompts include directives like "Do not hallucinate" and "Do not make up factual information."

And Meta is offering Hollywood celebrities millions of dollars for the right to use their voices in AI projects, with SAG-AFTRA agreeing to the terms.

Elsewhere in AI regulation:

The UK CMA opened a formal merger inquiry into Amazon's Anthropic investment.

Ireland's DPC launched High Court proceedings against Twitter over concerns about EU user data processing for AI training.

The architect of the European Commission's initial AI Act proposal says its reach became too broad and may entrench big US tech companies.

And the "Godmother of AI" speaks out against California's AI safety bill, believing it will burden developers and academia while failing to address potential AI harms.

People are worried about AI stocks

In recent weeks, investors have become increasingly alarmed about AI spending and valuations. On Monday, their fears materialized as the "Magnificent Seven" (Big Tech, Nvidia, and Tesla) lost nearly $1 trillion in value.

The bottom line:

Some are now saying "the bubble has burst," pointing to recent gaffes and skepticism.

Nvidia, in particular, has been hit hard after new reports that their next-gen AI chips will be delayed due to design flaws.

However, the rest of Big Tech is undaunted - in recent earnings calls, tech execs described plans to spend even more on AI in the coming months.

Elsewhere in Nvidia:

Leaked documents reveal that Nvidia greenlit the scraping of Netflix and YouTube to train an unreleased foundational model despite staff concerns.

The NYTimes looks at Nvidia's race to staff offices in response to international regulatory scrutiny as it controls 90% of the AI chip market.

And a new investigation finds an active trade in Nvidia chips in China despite US restrictions, with some military-tied Chinese organizations having bought restricted chips.

Elsewhere in AI anxiety:

Hollywood faces a growing divide over AI usage as studios push for more AI tools and unions push for more guardrails.

An investigation reveals the ecosystem behind Facebook's "AI slop" problem, from YouTubers to WhatsApp groups to paid services.

Washington officials are increasingly concerned about the potential for AI-formulated bioweapons as a national security threat.

And secretaries of state from five US states urged Elon Musk to address X's Grok AI assistant spreading false election information.

Medium risk

OpenAI released GPT-4o's system card, with plenty of details on its new risks and capabilities1.

Between the lines:

A system card (or "model card") documents a model's architecture, training, performance, and safety. It's like a "Nutrition Facts" label for AI.

GPT-4o's multimodal nature opens up new kinds of risks, including unauthorized voice cloning, playing or singing copyrighted content, and becoming emotionally attached to the AI.

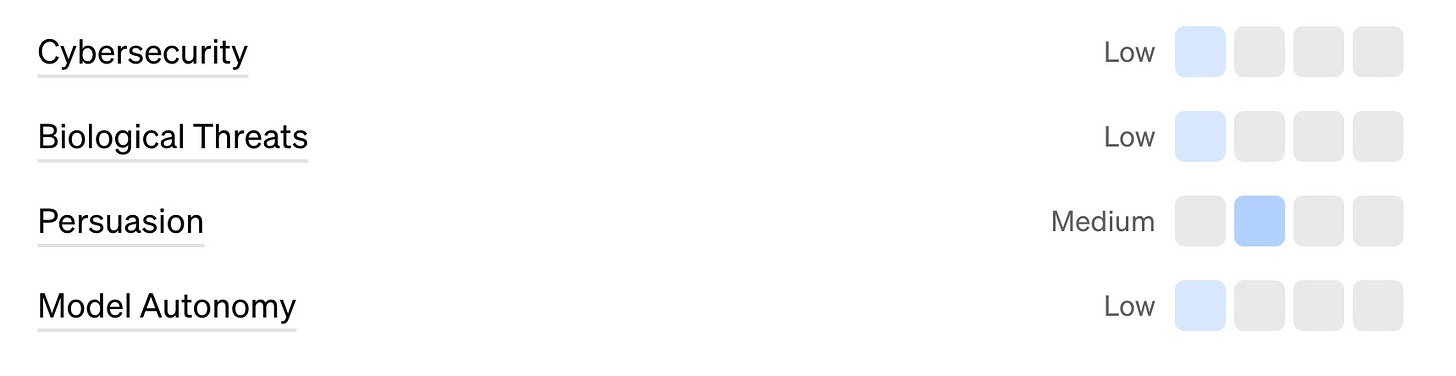

Ultimately, OpenAI classified GPT-4o as "medium risk" based on its Preparedness Framework. Models are evaluated for cybersecurity, bioweapons, persuasion, and autonomy.

Elsewhere in OpenAI:

OpenAI co-founder John Schulman departs to join Anthropic as co-founder Greg Brockman takes a sabbatical.

The WSJ details a tool for detecting ChatGPT-created text that OpenAI has had for about a year but hasn't yet been released.

Elon Musk revives his OpenAI lawsuit, alleging that OpenAI, Sam Altman, and Greg Brockman breached the company's founding contract.

And the company adds a Carnegie Mellon professor to its board of directors.

Things happen

Humane’s daily returns are outpacing sales. How Intel passed on a chance to buy 15% of OpenAI for $1B. Automattic launches AI writing tool for WordPress blogs. Yang Zhilin, the founder of China's highest-valued AI unicorn. Reddit plans to test AI-powered search results later this year. ByteDance joins OpenAI's Sora rivals with AI video app launch. Former OpenAI board member Helen Toner builds reputation as AI expert in DC. Stability AI announces Stable Fast 3D for rapid 3D asset generation. Google pulls its terrible pro-AI "Dear Sydney" ad after backlash. Reddit hentai community fight over AI-generated monster girls. UK government shelves £1.3B tech and AI plans. AI threatens to upend India's $250B outsourcing industry. macOS may get Apple Intelligence in the EU, unlike iOS and iPadOS. Websites use AI age scanners to verify users' ages via webcam. AI agents but they're working in big tech The danger of superhuman AI is not what you think. GPT-4 LLM may simulate people well enough to replicate social science experiments. Don't pivot into AI research. Home insurance decisions are now being made using drones and AI. Jeff Bezos' family office is betting big on AI. Replacing my right hand with AI. Silicon Valley parents are sending kids to AI-focused summer camps. Most AI startups are service businesses in disguise. AiOla open-sources ultra-fast speech recognition model. On the “ARC-AGI” $1M challenge.