AI Roundup 025: AI watchmen

July 28, 2023

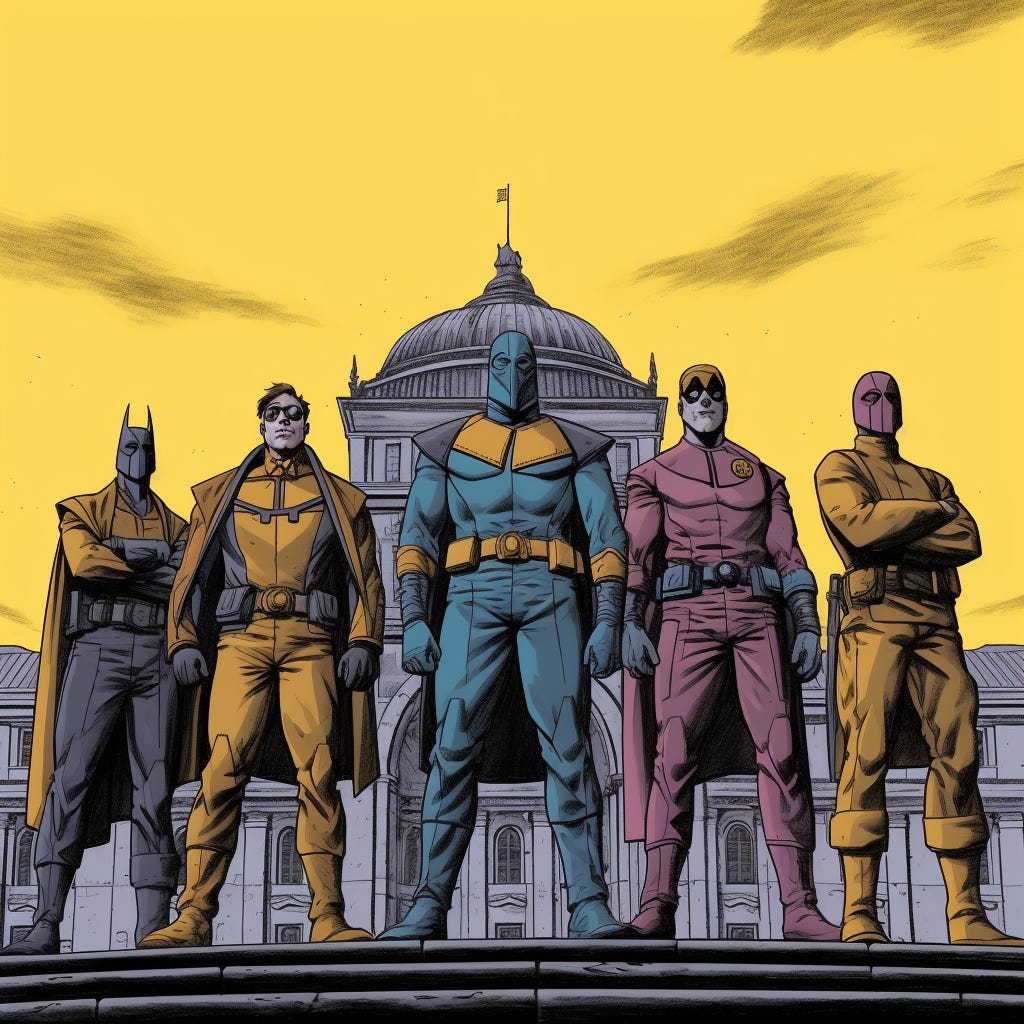

AI watchmen

Anthropic, Google, Microsoft, and OpenAI announced the formation of the Frontier Model Forum. It’s a new industry group focused on the safe and responsible development of “frontier AI models.” Frontier models are general-purpose, state-of-the-art machine learning models.

Why it matters:

Altman, Nadella, Pichai, et al. are racing to get ahead of the AI backlash. This means sharing notes on AI safety research and working with regulators to shape new laws.

While the Forum is somewhat self-serving, I'm cautiously optimistic. AI safety is important, and the current "launch first, evaluate harms later" strategy is reckless at best.

The key is getting more companies to join in, though it’s a little unclear how open-source fits. Noticeably absent: Meta, Midjourney, and Stability AI.

Meanwhile, a parade of companies announced (non-frontier) chatbots:

DoorDash wants to speed up food orders.

LinkedIn is building a coach for job applicants.

Shopify's Sidekick will help e-commerce sellers.

Apple is already using its chatbot for internal work.

Stack Overflow launched OverflowAI for programmers.

Intel's CEO plans to build AI into every possible platform.

And AWS debuted HealthScribe to summarize patient visits.

War, huh.

The military is no stranger to AI - from drones to intel, the armed forces already use AI and ML in various ways. But it's best not to forget that war, like everything else, stands to be transformed by AI.

What to watch:

A US Navy task force is using AI to police the Gulf of Oman for pirates, with increasingly capable autonomous systems.

Munich-based Helsing AI showcases its "operating system for warfare," which turns sensor and weapons data into real-time visualizations.

And a three-star Air Force general said the US military’s AI is more ethical than adversaries’ because we are a “Judeo-Christian society,” for whatever that's worth.

No biz like showbiz

As the Hollywood writing and acting strikes continue, AI has gone from a contract line item to a full-blown existential crisis. While it's easy to say that AI should never replace actors, the inconvenient truth is that the genie is out of the bottle.

Between the lines:

Bloomberg has a deep dive on AI's role in the strikes, with interviews from studio execs and screenwriters. It's a nuanced look at what both sides want and how we got to this standoff.

There's also a Q&A with Justine Bateman, a member of the DGA, the WGA, and SAG-AFTRA, on how AI stands to change the industry.

But not all studios are waiting around: Netflix and Disney are posting more AI-related roles - with salaries up to $900,000 per year!

Elsewhere in AI anxiety:

AI pioneer Yoshua Bengio, Anthropic CEO Dario Amodei, and Berkeley CS professor Stuart Russell warn Congress that AI could cause serious harms in a few years.

OpenAI admits it can't reliably detect AI-written text, and shuts down its AI detection tool.

A profile of Joseph Weizenbaum, who created the first chatbot in 1966 but turned against AI, believing the computer revolution constricted our humanity.

Things happen

Stable Diffusion XL 1.0. America already has an AI underclass. "A relationship with another human is overrated." JPMorgan warns of an AI bubble. Job applicants battle AI resume filters with white fonts. OpenAI launches a ChatGPT Android app. Transformers: profiling the "Attention is all you need" coauthors. AI porn generators are getting better. Sam Altman's other company scans your eyeballs in exchange for crypto. We asked ChatGPT how to break up with someone. NYC uses AI to track fare evaders.

Last week’s roundup

AI Roundup 024: The Llama King

Perhaps the biggest news this week was Meta's release of Llama 2, its latest and greatest large language model.

This is a time for change, and very likely faster change than we've ever seen before. I strongly suspect that the AI safety considerations will take a back seat to copyright issues in the 2nd half of this year, since that's where billions of dollars are flowing every day.