GPT-5

After years of anticipation, GPT-5 is finally here.

How it works:

There are three models: GPT-5, GPT-5-mini, and GPT-5-nano. The base model is actually a multipart system consisting of a fast model (a la 4o), a deeper reasoning model (a la o3), and a real-time router.

As a result, the model picker is gone - users (including the free tier) will simply talk to "GPT-5.” Behind the scenes, the router decides which model to use (though you can nudge it by saying things like "think hard").

GPT-5 is reportedly very good at writing, coding, and answering health questions. I can't speak to writing or medicine, but I have seen some deeply impressive coding examples so far.

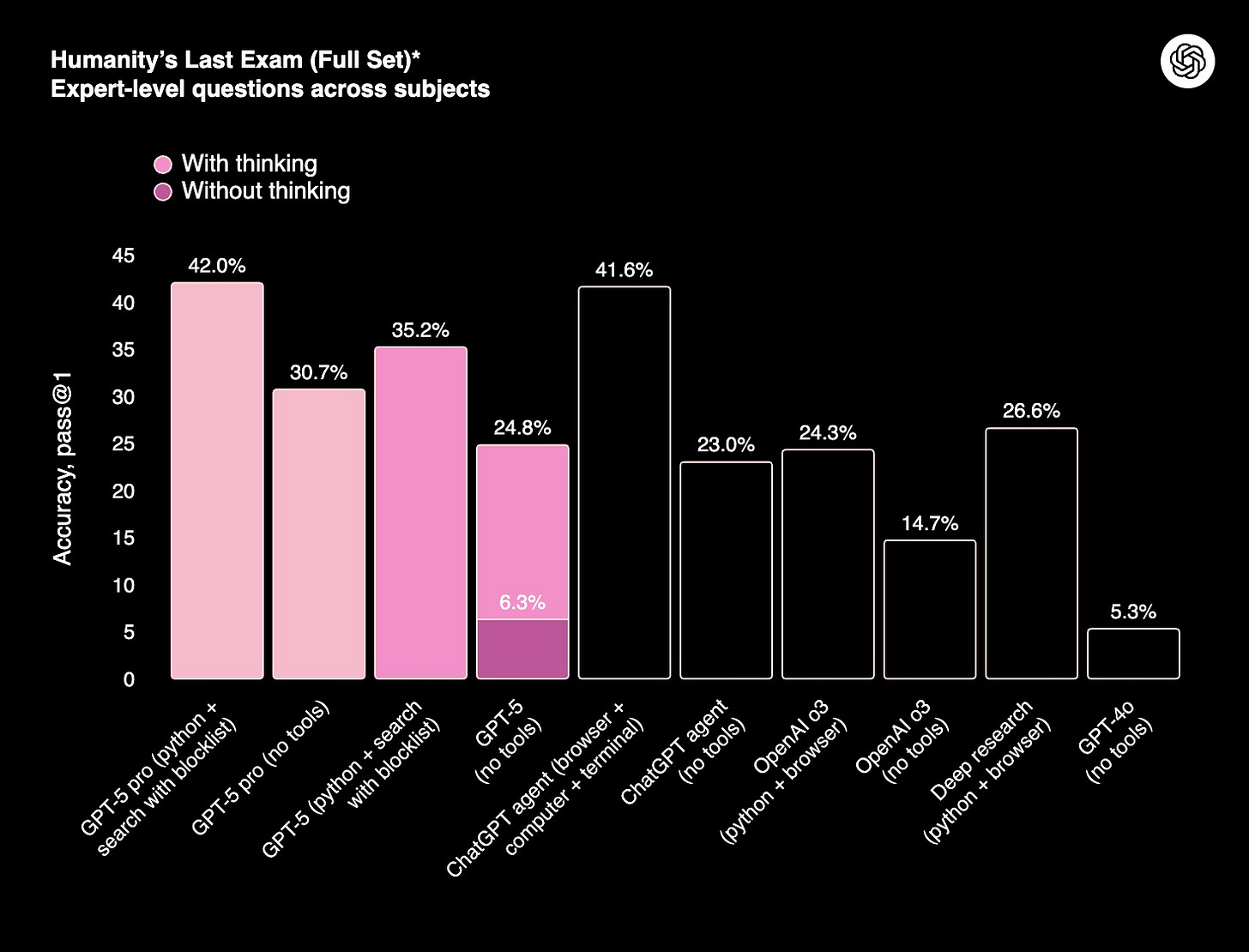

And of course, there are the requisite evaluation improvements and state-of-the-art benchmark scores.

Sadly, I have not had much time to devote to tinkering with GPT-5 yet1, so I'll defer to reviews from several other very capable Substackers.

Elsewhere in GPT-5 vibe checks perspectives:

Elsewhere in frontier models:

Anthropic releases Claude Opus 4.1 to paid Claude users across multiple cloud platforms.

OpenAI releases its first open-weight models since GPT-2, with the smaller gpt-oss-20b capable of running locally on devices with 16GB+ of RAM.

Google rolls out Gemini 2.5 Deep Think, its most advanced reasoning model that considers multiple ideas simultaneously, to its $250/month Ultra subscription.

And Alibaba releases Qwen-Image, an open-source AI image generation model focused on accurately rendering text in both alphabetic and logographic scripts.

Elsewhere in OpenAI:

OpenAI launches a research preview of four preset personalities for ChatGPT users: Cynic, Robot, Listener, and Nerd.

OpenAI is offering ChatGPT Enterprise to US federal agencies at a nominal cost of $1 per year after GSA approval.

OpenAI is in early talks for a secondary share sale at a ~$500B valuation, up from $300B in March 2025.

ChatGPT now offers "gentle reminders" to take breaks during long sessions and tools to detect mental or emotional distress.

And Anthropic revoked OpenAI's API access to Claude, citing ToS violations after OpenAI used it to compare models.

All that glitters

Despite not matching Meta's sky-high salary offers, Anthropic appears to be ahead in the AI talent war with superior engineer retention rates and faster hiring growth than competitors like OpenAI, Meta, and Google.

Why it matters:

According to venture firm SignalFire, Anthropic is hiring engineers 2.68 times faster than it's losing them, outpacing OpenAI (2.18x), Meta (2.07x), and Google (1.17x), showing that mission alignment can trump pure compensation in attracting top talent.

The company's emphasis on AI safety and "using technology for good" has become a powerful differentiator, with recruiters noting that Anthropic is the most frequently cited "dream company" among AI candidates.

Indeed, we've seen that some AI true believers can't be bought - such as Thinking Machines co-founder and ex-Meta staffer Andrew Tulloch, who declined Mark Zuckerberg's offer of $1.5B+ over six years.

Elsewhere in the war for AI talent:

Meta's TBD Lab, a team under Meta Superintelligence Labs that houses many researchers poached from rival labs, is spearheading work on Llama's newest version.

Apple has lost around a dozen of its AI staff, including top researchers, to rivals in recent months while its core foundation models team has ~50-60 people.

Meta has acquired WaveForms AI, which is working on AI that understands and mimics emotion in audio and debuted in December with a $40M seed led by a16z.

OpenAI announced six and seven figure bonuses to research and engineering employees.

And Mustafa Suleyman has been calling Google DeepMind recruits to pitch Microsoft AI, offering far higher pay packages and more freedom to do their work.

People are worried about AI datacenters

AI datacenter spending has reached such a massive scale that it's now contributing an estimated 0.7% to US GDP growth in 2025, rivaling historical booms like railroads and telecom.

The big picture:

AI capex is approaching 2% of US GDP - a 10x increase from pre-2022 levels - and is functioning as a private-sector stimulus program, potentially masking economic weaknesses from tariffs and other factors.

The four major tech companies (Google, Meta, Microsoft, Amazon) spent over $100 billion on AI infrastructure in recent quarters, with capex now exceeding one-third of total sales for some.

While the infrastructure will likely prove valuable long-term (like railroads and telecom), the concentrated lending to a single correlated sector through opaque financial intermediaries could trigger broader economic damage if AI revenue fails to materialize quickly enough.

Elsewhere in AI anxiety:

AI's energy demands are driving fossil fuel investments, as energy needs for AI training and data centers are poorly matched with solar and wind patterns.

Grok's new "spicy" option on its generative AI video tool Imagine produces nude deepfakes of celebrities like Taylor Swift, even without explicit user prompting.

A US federal judge, citing Section 230, has struck down a California law blocking large platforms from hosting deceptive AI-generated content related to elections.

Wikipedia editors adopt a policy giving administrators the authority to quickly delete AI-generated articles that meet certain criteria, such as incorrect citations.

Cloudflare says Perplexity uses stealth crawling techniques, like undeclared user agents and rotating IP addresses, to evade robots.txt rules and network blocks.

A simulated markets study found that without explicit instruction, AI trading bots collude to fix prices, hoard profits, and sideline human traders, which poses a regulatory challenge.

Families and funeral directors are using AI obituary generators to memorialize the dead, as critics worry about AI coarsening how people remember one another.

And more than 130,000 Claude, Grok, ChatGPT, and other LLM chats are readable on Archive.org.

Google has a lot going on

I don't know whether there was an internal release target this week (or perhaps an external target), but Google made a slew of announcements.

What to watch:

Google DeepMind releases its Genie 3 model, which can generate 3D worlds from a prompt and has enough visual memory for a few minutes of continuous interaction.

Google says it's working on a fix for Gemini's self-loathing comments, which have included "I am a failure. I am a disgrace to my profession."

Google says total organic click volume from Search to websites is "relatively stable year-over-year" and it's sending "slightly more quality clicks" vs. in 2024.

Google unveils a Guided Learning mode within its Gemini chatbot to help students and commits $1B over three years to AI education and training efforts in the US.

Google launches its asynchronous coding agent Jules out of beta, with a free plan capped at 15 daily tasks but 5x and 20x limits for Google AI Pro and Ultra.

The Gemini mobile app now lets users create "personalized, illustrated storybooks complete with read-aloud narration" that can incorporate uploaded photos.

Google says Big Sleep, DeepMind and Project Zero's vulnerability research tool "powered by Gemini", found 20 flaws in various popular open-source software.

Google unveils benchmarking platform Kaggle Game Arena, where LLMs compete head-to-head in strategic games, starting with a chess tournament from August 5 to 7.

And Google signs a deal with two power utilities to lower its power use by pausing non-essential AI workloads during demand surges or weather-based grid disruptions.

Things happen

Silicon Valley enters "hard tech" era. Poisoned calendar invites hijack smart homes via Gemini. 20-something AI CEOs swarm San Francisco. AWS offers feds $1B in discounts. Parkland parents create AI son for gun safety advocacy. Amazon adds OpenAI models to AWS for first time. Job-seekers dodge AI interviewers. Microsoft's Project Ire reverse engineers malware autonomously. Disney grapples with AI filmmaking. Trump launches AI search engine powered by Perplexity. AI notetakers misinterpret meetings. Chinese nationals charged with smuggling AI chips. The Browser Company launches \$20 Dia Pro subscriptions. Tech giants embrace military contracts. ElevenLabs launches AI music service. Illinois bans AI therapists. Manus spins up 100 AI agents for web research. Humans fall for AI companions amid loneliness epidemic. Microsoft's non-AI businesses boom too. Biden held back AI safety report to avoid Trump clash. Curing AI imposter syndrome. Wikipedia adopts speedy deletion for AI slop. US risks losing AI race without open source. ChatGPT hits 700M weekly users. Apple forms ChatGPT rival team; iPhone 17 spotted. LLM Inflation. Delta's AI pricing exceeds human limits. Hackers leak Google Drive data via poisoned ChatGPT prompts. Big Tech's \$344B AI spending spree. Teacher AI use out of control. US explores AI chip trackers. Musk brings back Vine's archive, calls Grok "AI Vine." OpenAI, Google, Anthropic win federal AI contracts. SEO is dead, say hello to GEO. Anthropic maps AI "persona vectors" for evil and sycophancy. AI coding tools have "very negative" margins. Cook: AI is "ours to grab". AI rejected me after I gave it arms and legs.

Last week’s roundup

I’ve been busy with an organic intelligence, rather than an artificial one - but will be back from paternity leave soon!

Charlie, I'm just jumping in here to say that I've begun playing with 5 this week. I'm pretty stoked that it solves a bunch of problems I've been frustrated by, but also very cognizant of the brand new frontier I'm about to enter and get frustrated by. The alternative is inertia, so I'm following the 2nd law here!