Tutorial: How to streamline your writing process with Whisper and GPT-4

These Python scripts help me write 3x faster and go from loose ideas to first draft in minutes.

When I tell people that I publish an AI-focused Substack, they often ask, "Does that mean you use ChatGPT to write your articles?"

And I'm here to tell you that the answer is yes - but probably not in the way you think.

To be clear, I do not use ChatGPT (or any other LLM) to write posts that I pass off as my own. The point of my publication is to explore new ideas and teach them to others - having AI write my content would be like a fitness instructor who has robots do all of the exercises.

But I do use AI in a few different ways as part of my writing process. And one of those ways is to rapidly go from loose ideas to a first draft.

Here's how I do it:

Record a voice memo on my phone and transfer it to my laptop

Transcribe the audio using the Whisper API

Edit the transcription using the ChatGPT API

And in the process, deal with edge cases around context windows

It's a simple process, but that makes it simple to implement yourself! Let's dive into each step and the code snippets that I'm using behind the scenes.

Talking to myself for fun and profit

The first step in the process is to record a voice memo. Honestly, if you haven’t tried doing this, I highly recommend it. Finding short breaks during the day (commuting to work, walking the dog, etc.) to hammer out my thoughts has made writing essays far easier. Though for a post like this that’s full of code snippets, voice memos are somewhat less useful.

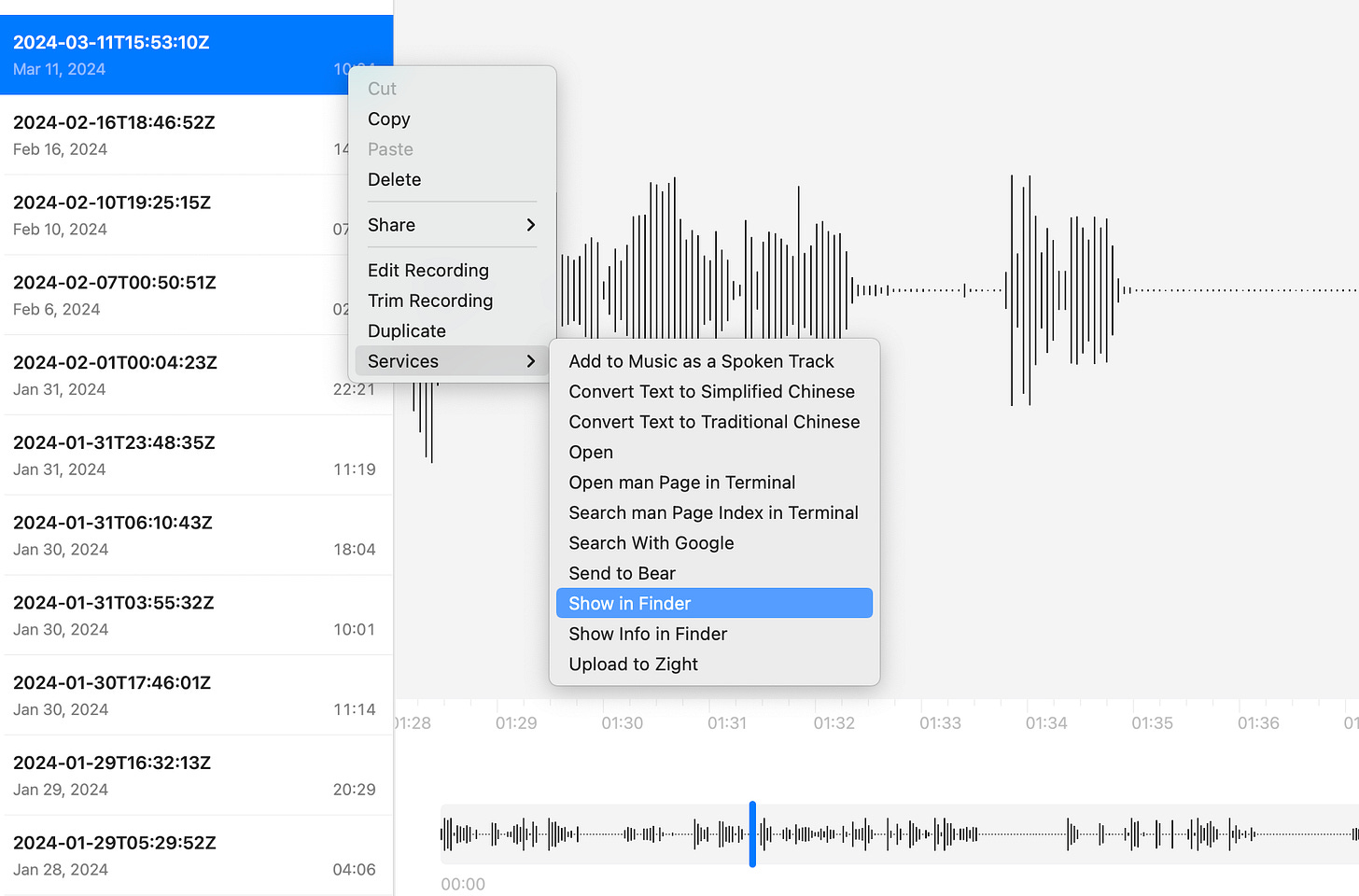

Since I've got multiple Apple devices, I can record an audio file on my iPhone and then transfer it to my MacBook Pro via iCloud. Once uploaded, I find the .m4a file and copy it to another directory (for easier editing).

Transcribing the audio file using Whisper

Before we get started, we're going to want to install the relevant Python libraries:

pip install openai tiktoken

And we'll want to make sure we have the OpenAI client set up correctly:

client = OpenAI(api_key="OPENAI_API_KEY")

But once that's done, it's actually dead simple to create a transcription from an audio file (thanks, OpenAI!). It's more-or-less a single line of code, where we're passing in the audio file data and specifying the model (which for now is limited to whisper-1).

def transcribe_audio(audio_filename):

with open(audio_filename, "rb") as audio_file:

transcript = (

client

.audio

.transcriptions

.create(file=audio_file, model="whisper-1")

)

return transcript.textWhisper is blazing fast - it can transcribe a half hour of audio in just a few seconds and costs only a few pennies - less if you're running the Whisper model locally. But there are a few downsides:

It may not always correctly identify slang or proper nouns; it often writes "Chat GBT" in my transcriptions.

It's not built to identify multiple speakers (aka speaker diarization), so it's best used for monologuing.

The output is a single uninterrupted block of text, which is difficult to parse and edit.

Most of these aren’t dealbreakers, but there are ways around them. whisperX, for example, adds speaker diarization and other features to the base Whisper model. In this case, I care most about the single block of text problem, which we can solve with ChatGPT.

Editing the transcription with GPT-4

The biggest issue with the raw Whisper output is that editing it is a nightmare - there are no paragraph breaks or logical sentence groupings. But this is a great use case for GPT-4! Unlike GPT-3.5, it's smart enough to process the input and break the sentences into meaningful paragraphs.

With the GPT-4 API, we can write a simple edit_text method. Once again, the API call is essentially one line, which I've broken up here for better readability:

def edit_text(text):

PROMPT = "Add paragraph breaks to the provided text without modifying any words."

response = client.chat.completions.create(

model="gpt-4",

temperature=0.3,

messages=[

{"role": "system", "content": PROMPT},

{"role": "user", "content": text}

]

)

edited_text = response.choices[0].message.content

return edited_textThere are a couple of hurdles when working with GPT-4 for edits. First, the prompt needs to be pretty explicit about not modifying words. While I also experimented with trying to get GPT-4 to remove filler words ("um", "like", "so"), I found that the model really wants to summarize things. As soon as it got "permission" to modify the text, it took some big liberties as it rewrote sentences. In my case, I kept the filler words to ensure I was getting the original content back out, but you could create a multi-step chain to do additional filler word removal.

But the bigger issue was context windows. In fact, this threatened to be a dealbreaker - the base GPT-4 model only had a context window of 8K tokens, which I could easily exceed with 10 minutes of uninterrupted rambling. Not only that, but the context window is measured across input and output tokens, meaning the transcript itself could only be about 4K tokens long - nowhere near enough to cover a normal brainstorming session.

Luckily, there are a few ways of solving our problem.

Dealing with context window limitations

The main issue is that we don't know how many tokens our transcription will be - so we don't know how much of that text we should send to GPT-4. Fortunately, OpenAI provides a library, tiktoken, which uses the same tokenizer as its API.

Using the tiktoken library, we can create a split_text function that takes our raw text, along with a model name and token limit, and breaks the text up into chunks. Each chunk will have fewer tokens than our token limit, meaning we can then send each chunk to GPT-4 separately and stay within the context window limits.

def split_text(text, model, token_limit):

encoder = tiktoken.encoding_for_model(model)

text_tokens = encoder.encode(text)

chunks = (len(text_tokens) // token_limit) + 1

length = (len(text_tokens) // chunks) + 1

split_text = []

for chunk in range(chunks):

split_tokens = text_tokens[

chunk * length:

min((chunk + 1) * length, len(text_tokens))

]

split_text.append(encoder.decode(split_tokens))

return split_textOf course, we can also upgrade the model to avoid cutting our transcript into tons of chunks. However, be careful: while GPT-4 Turbo appears to have far and away the biggest context window (128K tokens), that applies to the inputs - it's limited to 4K tokens of output! That's actually smaller than the base GPT-4 model, and about the same as GPT-3.5.

A better option is GPT-4 32K, (gpt-4-32k in the API) which splits 32K tokens across both inputs and outputs. It's more expensive than GPT-4 Turbo, but in my case I wasn't particularly worried about costs. Here's what an updated edit_text function looks like, taking into account our new model parameters and chunking approach:

def edit_text(text):

PROMPT = "Add paragraph breaks to the provided text without modifying any words."

model, token_limit = "gpt-4-32k", 16384

sections = split_text(text, model, token_limit)

edited_text = ""

for section in sections:

response = client.chat.completions.create(

model=model,

max_tokens=token_limit,

temperature=0.3,

messages=[

{"role": "system", "content": PROMPT},

{"role": "user", "content": section}

]

)

edited_text += response.choices[0].message.content

return edited_textAnd once we have the final text, we can write it to a file to reuse for editing.

Final thoughts

Incorporating AI into my workflow has been a big efficiency boost. By leveraging Whisper and ChatGPT, I can go for a walk and dictate a new post, saving me hours of manual transcription and editing.

If you’re not into running your own code, standalone products like superwhisper and AudioPen offer similar features for a monthly fee. But building my own lo-fi implementation has given me a deeper understanding of the Whisper and ChatGPT APIs, and has provided an incredibly cost-effective solution. The ability to process thousands of words for just a few pennies helps, especially considering the growing subscriptionization of AI services.

But besides the cost savings, using AI has made me a more productive and efficient content creator. Writing isn't my full-time job, but thanks to AI, I can research and publish more than I could otherwise. Of course, I also believe that these tools are meant to support and augment your creative process, not replace it.

How are you incorporating AI into your projects or creative pursuits? Share your tips and tricks in the comments!

Some weeks ago, I was actually toying with the idea of paying for AudioPen as I want to make taking voice notes a part of my routine. (It never became a natural go-to habit just yet.)

I was then pleasantly surprised to find out that the standard "Recorder" app on my Google Pixel 8 already does its own transcripts, which are pretty decent. It might not be quite at Whisper level, but I didn't notice any major mistakes.

So now I can potentially simply plug those transcripts into ChatGPT or another LLM and get them cleaned up, organized, and ready for drafting.

I use some similar systems. I've been pretty impressed with the native Samsung AI transcription on my phone.

Another good tool for this purpose is scribebuddy.co. it's paid but you can buy a chunk of minutes rather than pay yet another monthly fee. The quality of the transcriptions has been pretty good. I've played around with automating the entire process but it's still not streamlined just yet!