Three ways to chat with your documents

Or: how to build a ChatGPT for your business.

Does this sound like a conversation you've had with your CEO - or yourself?

"How can we leverage generative AI for our business?"

"Let's make a ChatGPT for our users. They can ask questions about our knowledge base and get back informative answers."

"...How do we do that?"

In the age of ChatGPT, it feels like almost everyone is trying to figure out how to talk to their data. And adding a conversational UI on top of an existing data source can make a lot of sense. We're seeing it emerge in multiple areas, from customer service to medicine.

But how can you do this in practice?

Six months ago, there was a straightforward "best" way to do this. But with recent advancements in large language models, there are a few options with different tradeoffs. We'll review the pros and cons of three of them.

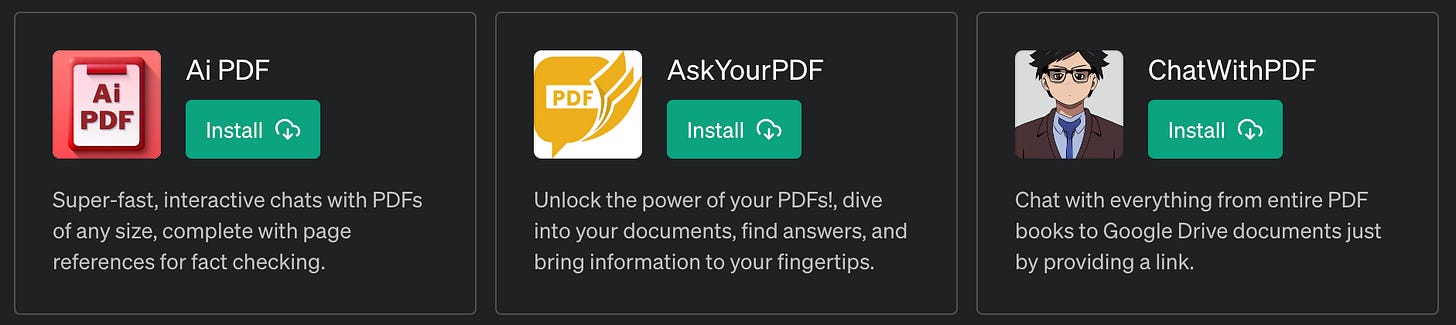

Note: this post is aimed at companies looking to build this into an existing product. If you're looking to try this out for yourself, you can use an app like ChatPDF or install a ChatGPT plugin.

Approach 1: Use ChatGPT or Claude

The simplest way is to use a chatbot like ChatGPT or Claude and provide the document alongside each question. This is quite easy to build, requiring little-to-no coding.

But this approach only works for small documents or datasets. Ultimately, it's limited by the LLM's context window - its "working memory," so to speak. ChatGPT has a 16,000 token context window, and Claude has a 100,000 token context window. For reference, one hundred thousand tokens are about the length of The Great Gatsby.

The obvious downside is that these models hallucinate. If an answer is not in the provided text, the model may invent one instead. The other drawback is that the Q&A process can be resource-intensive. Sending the whole dataset on each request consumes both time and money.

To try this out, I’d suggest trying out OpenAI’s ChatGPT Playground, then taking a look at their developer documentation.

Approach 2: Fine-tune a language model

Previously, the "best" way to create a Q&A with your data was to fine-tune GPT-3. For reference, ChatGPT is GPT-3.5, and many now have access to GPT-4. Fine-tuning is a straightforward process once you have a set of training examples to use. And as open-source models have improved, you can fine-tune an OSS model to keep all your data private.

There are a couple of hurdles to this approach as well. The biggest one is the work involved in creating the training data. Each document would need its own trained model, making the process somewhat rigid. And each training run would need many fine-tuning examples.

There's also the fact that GPT-4's output generally surpasses GPT-3 by a wide margin. By fine-tuning GPT-3 (or another open-source model), you're choosing not to use the latest and greatest - but that might not matter.

OpenAI has resources on fine-tuning GPT-3, and you can also find tutorials on fine-tuning open-source models.

Approach 3: Use embeddings

The third option is a bit of middle-ground. It sidesteps the context window issue while also avoiding a rigid fine-tuning process. Plus, it significantly cuts down on LLM hallucinations.

The key is an AI concept called embeddings. In a nutshell, embeddings are a way to turn a block of text into a list of numbers (aka "vectors"). That might not sound special, but embeddings can turn words with similar meanings into similar numbers. For example, the embedding vector of “canine companions say” will be more similar to the embedding vector of “woof” than that of “meow.”

There are several use cases for embeddings, but for our purposes, we care about finding text from our documents that’s similar to a user’s question. With embeddings, we can do the following:

Convert the document/data source into embeddings and store them in a database.

Take a user question and convert it to an embedding.

Search the database for sections of the document that are relevant to the user's question.

Pull those sections out and convert them back to text.

Provide those sections to ChatGPT, alongside the user's question, to generate an answer.

By combining embeddings with ChatGPT, you can get the benefits of ChatGPT while providing the most accurate data.

If you wanted to build this yourself, you'll need an embeddings model, a vector database like Pinecone, and code to glue everything together. Or, you could build on top of a project like LlamaIndex (formerly GPTIndex), which handles much of the heavy lifting for you.

I've used Chat PDF a bir. It works! But I think it needs to be fine-tuned a bit more than its current usability. It's no GPT 4, that's for sure.