Lessons from 139 YC AI startups (S23)

The evolution of the AI landscape, and YC's Copilot era.

YC's Demo Day was this week, and with it comes another deluge of AI companies. A record-breaking 139 startups were in some way related to AI or ML - up from 112 in the last batch.

Here are 5 of my biggest takeaways.

AI is (still) eating the world.

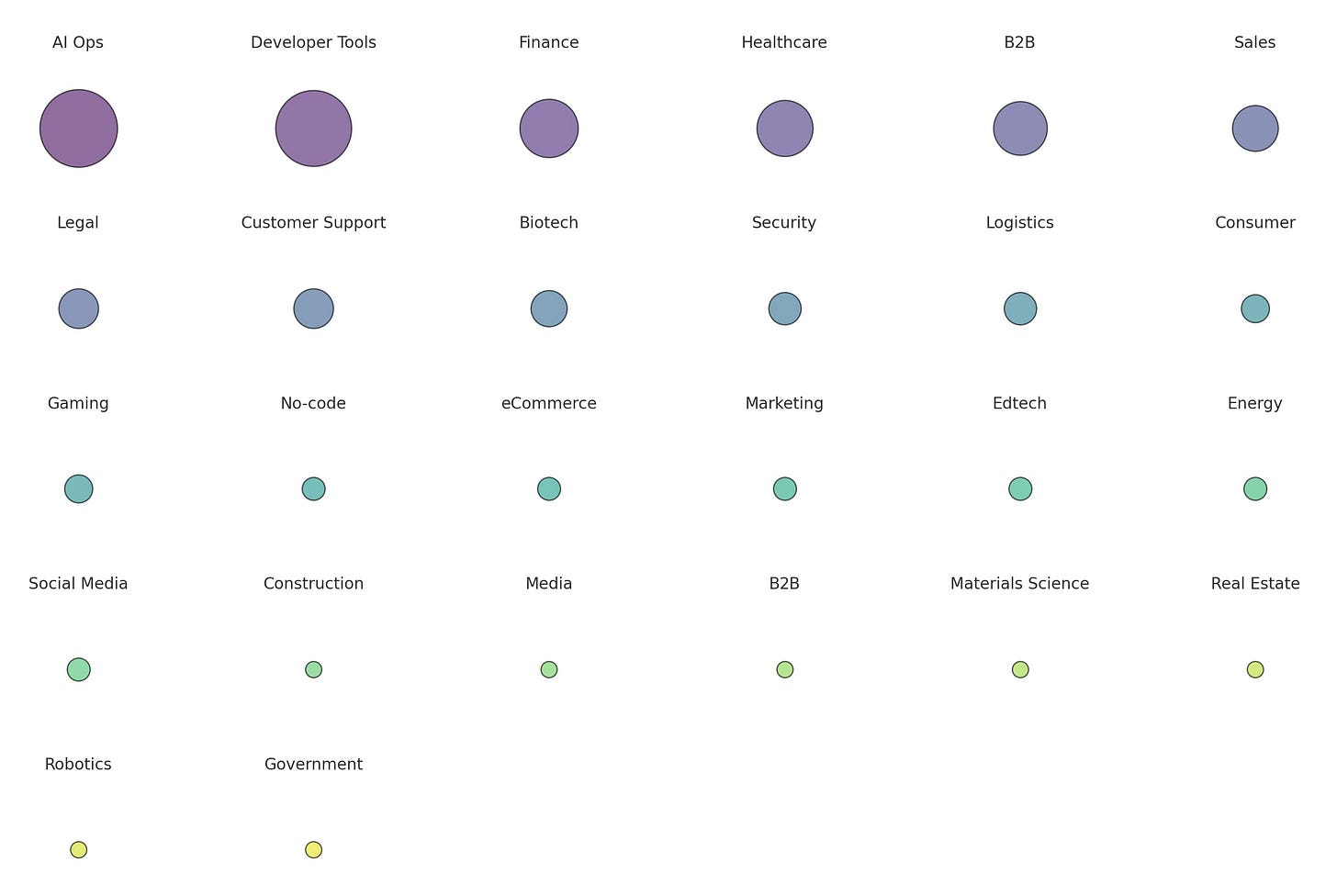

It's remarkable how diverse the industries are - over two dozen verticals were represented, from materials science to social media to security. However, the top four categories were:

AI Ops: Tooling and platforms to help companies deploy working AI models. We'll discuss more below, but AI Ops has become a huge category, primarily focused on LLMs and taming them for production use cases.

Developer Tools: Apps, plugins, and SDKs making it easier to write code. There were plenty of examples of integrating third-party data, auto-generating code/tests, and working with agents/chatbots to build and debug code.

Healthcare + Biotech: It seems like healthcare has a lot of room for automation, with companies working on note-taking, billing, training, and prescribing. And on the biotech side, there are some seriously cool companies building autonomous surgery robots and at-home cancer detection.

Finance + Payments: Startups targeting banks, fintechs, and compliance departments. This was a wide range of companies, from automated collections to AI due diligence to "Copilot for bankers."

Those four areas covered over half of the startups. The first two make sense: YC has always filtered for technical founders, and many are using AI to do what they know - improve the software developer workflow. But it's interesting to see healthcare and finance not far behind. Previously, I wrote:

Large enterprises, healthcare, and government are not going to send sensitive data to OpenAI. This leaves a gap for startups to build on-premise, compliant [LLMs] for these verticals.

And we're now seeing exactly that - LLMs focused on healthcare and finance and AI Ops companies targeting on-prem use cases. It also helps that one of the major selling points of generative AI right now is cost-cutting - an enticing use case for healthcare and finance.

Copilots are king.

In the last batch, a lot of startups positioned themselves as "ChatGPT for X," with a consumer focus. It seems the current trend, though, is "Copilot for X" - B2B AI assistants to help you do everything from KYC checks to corporate event planning to chip design to negotiate contracts.

Nearly two dozen companies were working on some sort of artificial companion for businesses - and a couple for consumers. It's more evidence for the argument that AI will not outright replace workers - instead, existing workers will collaborate with AI to be more productive. And as AI becomes more mainstream, this trend of making specialized tools for specific industries or tasks will only grow.

That being said - a Bing-style AI that lives in a sidebar and is only accessible via chat probably isn't the most useful form factor for AI. But until OpenAI, Microsoft, and Google change their approach (or until another company steps up), we'll probably see many more Copilots.

AI Ops is becoming a key sector.

"AI Ops" has been a term for only a few years. "LLM Ops" has existed for barely a year. And yet, so many companies are focused on training, fine-tuning, deploying, hosting, and post-processing LLMs it's quickly becoming a critical piece of the AI space. It's a vast industry that's sprung up seemingly overnight, and it was pretty interesting to see some of the problems being solved at the bleeding edge. For example:

Adding context to language models with as few as ten samples.

Pausing and moving training runs in real-time.

Managing training data ownership and permissions.

Faster vector databases.

Fine-tuning models with synthetic data.

But as much hype enthusiasm and opportunity as there might be, the size of the AI Ops space also shows how much work is needed to really productionalize LLMs and other models. There are still many open questions about reliability, privacy, observability, usability, and safety when it comes to using LLMs in the wild.

Who owns the model? Does it matter?

Nine months ago, anyone building an LLM company was doing one of three things:

Training their own model from scratch.

Fine-tuning a version of GPT-3.

Building a wrapper around ChatGPT.

Thanks to Meta, the open-source community, and the legions of competitors trying to catch up to OpenAI, there are now dozens of ways to integrate LLMs. However, I found it interesting how few B2B companies mentioned whether or not they trained their own model. If I had to guess, I'd say many are using ChatGPT or a fine-tuned version of Llama 2.

But it raises an interesting question - if the AI provides value, does it matter if it's "just" ChatGPT behind the scenes? And once ChatGPT becomes fine-tuneable, when (if ever) will startups decide to ditch OpenAI and use their own model instead?

"AI" isn't a silver bullet.

At the end of the day, perhaps the biggest lesson is that "AI" isn't a magical cure-all - you still need to build a defensible company. At the beginning of the post-ChatGPT hype wave, it seemed like you just had to say "we're adding AI" to raise your next round or boost your stock price.

But competition is extremely fierce. Even within this batch, there were multiple companies with nearly identical pitches, including:

Solving customer support tickets.

Negotiating sales contracts.

Writing drafts of legal documents.

Building no-code LLM workflows.

On-prem LLM deployment.

Automating trust and safety moderation.

As it turns out, AI can be a competitive advantage, but it can't make up for a bad business. The most interesting (and likely valuable) companies are the ones that take boring industries and find non-obvious use cases for AI. In those cases, the key is having a team that can effectively distribute a product to users, with or without AI.

Where we’re headed

I'll be honest - 139 companies is a lot. In reviewing them all, there were points where it just felt completely overwhelming. But after taking a step back, seeing them all together paints an incredibly vivid picture of the current AI landscape: one that is diverse, rapidly evolving, and increasingly integrated into professional and personal tasks.

These startups aren't just building AI for the sake of technology or academic research, but are trying to address real-world problems. Technology is always a double-edged sword - and some of the startups felt a little too dystopian for my taste - but I'm still hopeful about AI's ability to improve productivity and the human experience.